Image Data Workflows

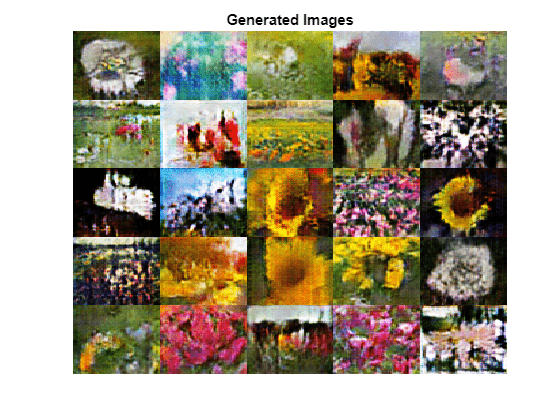

Use transfer learning to take advantage of the knowledge provided by a pretrained network to learn new patterns in new image data. Fine-tuning a pretrained image classification network with transfer learning is typically much faster and easier than training from scratch. Using pretrained deep networks enables you to quickly create models for new tasks without defining and training a new network, having millions of images, or having a powerful GPU. You can also create new deep networks for image classification and regression tasks by defining the network architecture and training the network from scratch.

You can train the network using the trainnet function with the trainingOptions function, or you can specify a custom training loop

using dlnetwork objects or dlarray

object functions.

You can train a neural network on a CPU, a GPU, multiple CPUs or

GPUs, or in parallel on a cluster or in the cloud. Training on a GPU or in parallel

requires Parallel Computing Toolbox™. Using a GPU requires a supported GPU device (for information on

supported devices, see GPU Computing Requirements (Parallel Computing Toolbox)). Specify the

execution environment using the trainingOptions function.

You can monitor training progress using built-in plots of network accuracy and loss and you can investigate trained networks using visualization techniques such as Grad-CAM, occlusion sensitivity, LIME, and deep dream.

When you have a trained network, you can verify its robustness, compute network output bounds, and find adversarial examples. You can also use a trained network in Simulink® models by using blocks from the Deep Neural Networks block library.

Categories

- Data Preprocessing

Manage and preprocess image data for deep learning

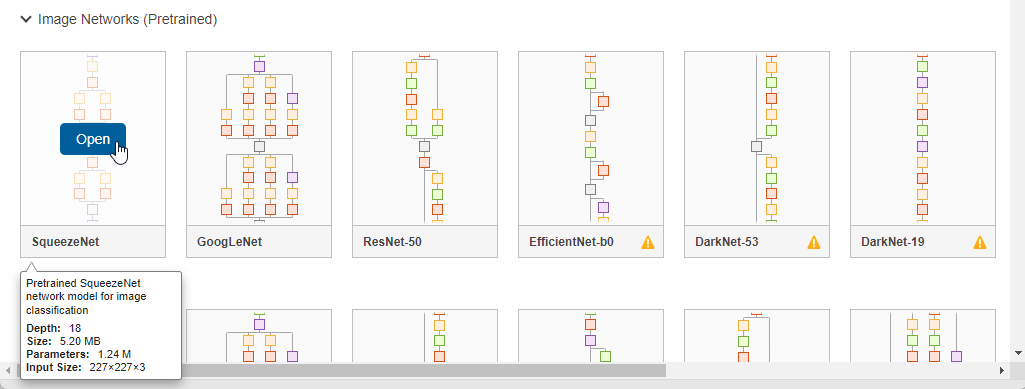

- Pretrained Networks

Use pretrained image networks to quickly learn new tasks

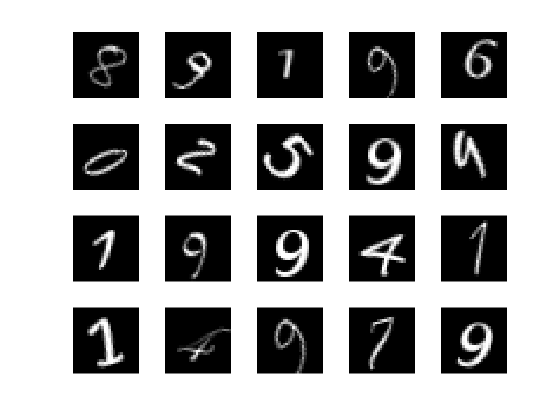

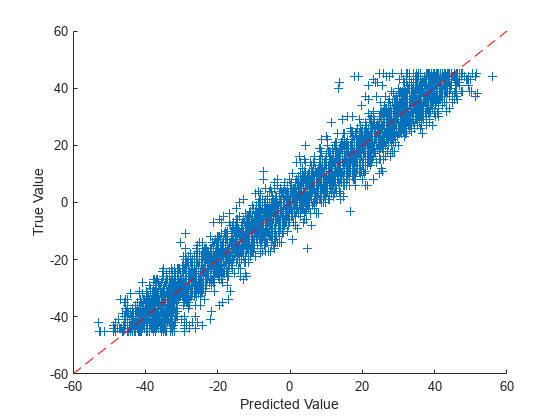

- Build and Train Networks

Create deep neural networks for image data and train from scratch

- Visualization and Verification

Visualize neural network behavior, explain predictions, and verify robustness using image data