Collect and Explore Metric Data by Using Metrics Dashboard

Note

If you currently only use the Metrics Dashboard to analyze your model size, architecture, and complexity, then you can use the Model Maintainability Dashboard instead. For information, see Migrating from Metrics Dashboard to Model Maintainability Dashboard.

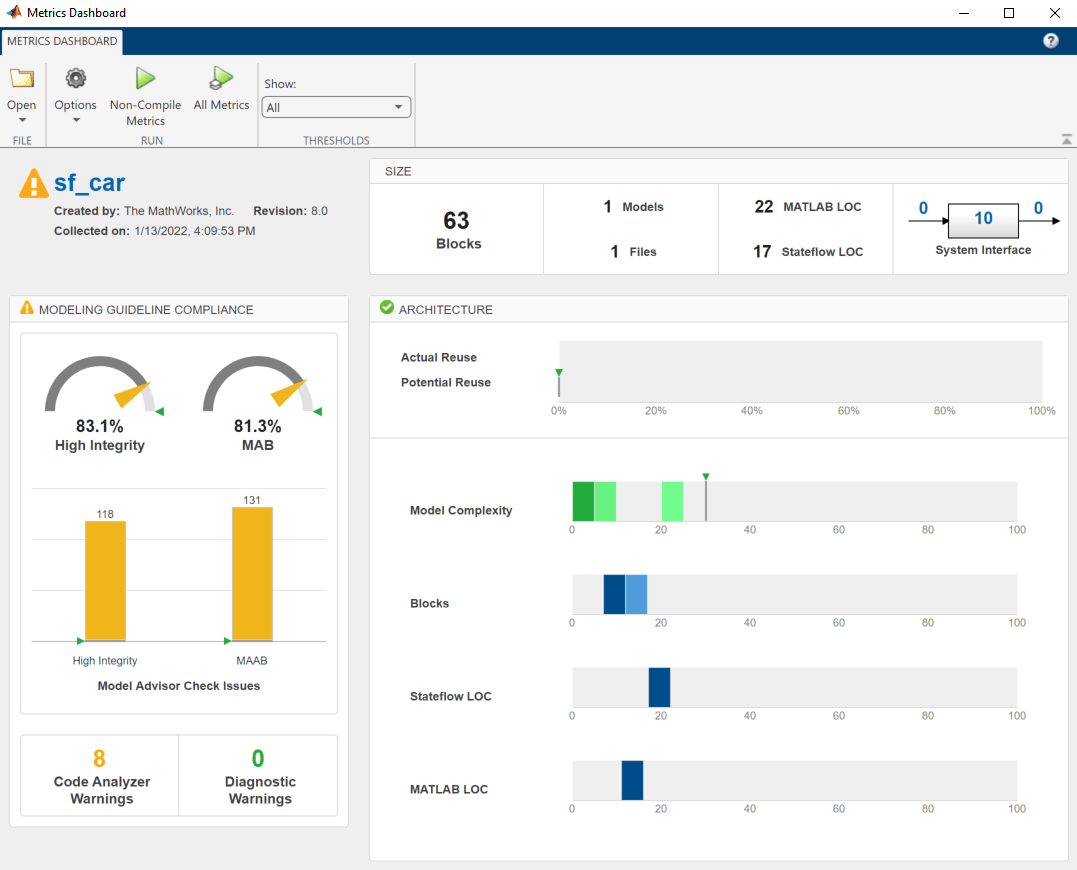

The Metrics Dashboard collects and integrates quality metric data from multiple Model-Based Design tools to provide you with an assessment of your project quality status. To open the dashboard:

In the Apps gallery, click Metrics Dashboard.

At the command line, enter

metricsdashboard(. Thesystem)systemcan be either a model name or a block path to a subsystem. The system cannot be a Configurable Subsystem block.

You can collect metric data by using the dashboard or programmatically by

using the slmetric.Engine API. When you open the dashboard, if you

have previously collected metric data for a particular model, the dashboard populates

from existing data in the database.

If you want to use the dashboard to collect (or re-collect) metric data, in the toolbar:

Use the Options menu to specify whether to include model references and libraries in the data collection.

Click All Metrics. If you do not want to collect metrics that require compiling the model, click Non-Compile Metrics.

The Metrics Dashboard provides the system name and a data collection timestamp. If there were issues during data collection, click the alert icon to see warnings.

You can have only one dashboard open per model or subsystem at once. Also, if a dashboard is open for a model or subsystem, and you programmatically collect metric data for that model or subsystem, the dashboard automatically closes.

Metrics Dashboard Widgets

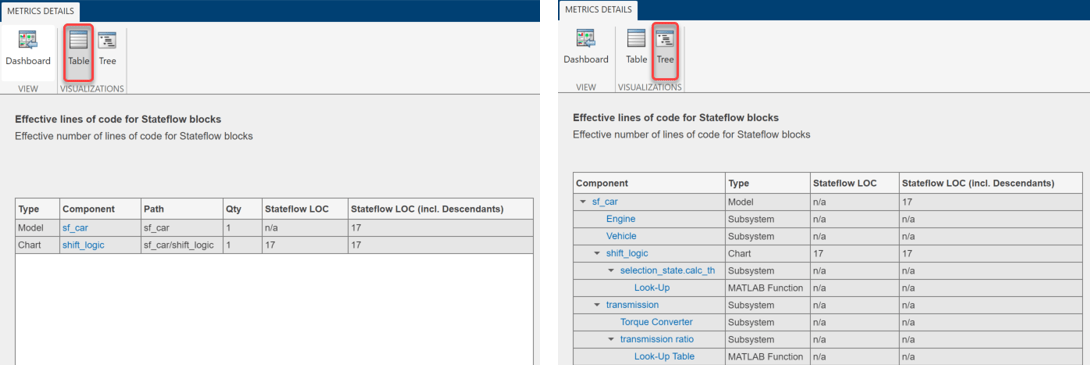

The Metrics Dashboard contains widgets that provide visualization of metric data in these categories: size, modeling guideline compliance, and architecture. To explore the data in more detail, click an individual metric widget. For your selected metric, a table displays the value, aggregated value, and measures (if applicable) at the model component level. From the table, the dashboard provides traceability and hyperlinks to the data source so that you can get detailed results and recommended actions for troubleshooting issues. When exploring drill-in data, note that:

The Metrics Dashboard calculates metric data per component. A component can be a model, subsystem, chart, or MATLAB Function block.

You can view results in either a Table or Tree view. For the High Integrity and MAB compliance widgets, you can also choose a Grid view. To view highlighted results, in the grid view, click a cell.

To sort the results by value or aggregated value, click the corresponding value column header.

For metrics other than the High Integrity and MAB compliance widgets, you can filter results. To filter results, in the Table view, select the context menu on the right side of the TYPE, COMPONENT, and PATH column headers. From the TYPE menu, select applicable components. From the COMPONENT and PATH menus, type a component name or path in the search bar. The Metrics Dashboard saves the filters for a widget, so you can view metric details for other widgets and return to the filtered results.

In the Table and Tree view, a value or aggregated value of

n/aindicates that results are not available for that component. If the value and aggregated value aren/a, the Table view does not list the component. The Tree view does list such a component.

The metric data that is collected quantifies the overall system, including instances of the same model. For aggregated values, the metric engine aggregates data from each instance of a model in the referencing hierarchy. For example, if the same model is referenced twice in the system hierarchy, its block count contributes twice to the overall system block count.

If a subsystem, chart, or MATLAB Function block uses a parameter or is flagged for an issue, then the parameter count or issue count is increased for the parent component.

The Metrics Dashboard analyzes variants.

For custom metrics, you can specify widgets to add to the dashboard. You can also remove widgets. To learn more about customizing the Metrics Dashboard, see Customize Metrics Dashboard Layout and Functionality.

Size

This table lists the Metrics Dashboard widgets that provide an overall picture of the size of your system. When you drill into a widget, this table also lists the detailed information available.

| Widget | Metric | Drill-In Data |

|---|---|---|

| Blocks | Simulink block count

(mathworks.metrics.SimulinkBlockCount) | Number of blocks by component |

| Models | Model file count

(mathworks.metrics.ModelFileCount) | Number of model files by component |

| Files | File count

(mathworks.metrics.FileCount) | Number of model and library files by component |

| MATLAB LOC | Effective lines of MATLAB code

(mathworks.metrics.MatlabLOCCount) | Effective lines of code, in MATLAB Function block and MATLAB functions in Stateflow, by component |

| Stateflow LOC | Effective lines of code for Stateflow

blocks

(mathworks.metrics.StateflowLOCCount) | Effective lines of code for Stateflow blocks by component |

| System Interface |

|

|

Modeling Guideline Compliance

For this particular system, the model compliance widgets indicate the level of compliance with industry standards and guidelines. This table lists the Metrics Dashboard widgets related to modeling guideline compliance and the detailed information available when you drill into the widget.

| Widget | Metric | Drill-In Data |

|---|---|---|

| High Integrity Compliance | Model Advisor standards check compliance - High

Integrity

(mathworks.metrics.ModelAdvisorCheckCompliance.hisl_do178) | For each component:

Integration with the Model Advisor for more detailed results. |

| MAB Compliance | Model Advisor standards check compliance -

MAB

(mathworks.metrics.ModelAdvisorCheckCompliance.maab) | For each component:

Integration with the Model Advisor for more detailed results. |

| High Integrity Check Issues | Model Advisor standards issues - High

Integrity

(mathworks.metrics.ModelAdvisorCheckIssues.hisl_do178) |

|

| MAB Check Issues | Model Advisor standards issues - MAB

(mathworks.metrics.ModelAdvisorCheckIssues.maab) |

|

| Code Analyzer Warnings | Warnings from MATLAB Code Analyzer

(mathworks.metrics.MatlabCodeAnalyzerWarnings) | Number of Code Analyzer warnings by component. |

| Diagnostic Warnings | Simulink diagnostic warning count

(mathworks.metrics.DiagnosticWarningsCount) |

|

Note

An issue with a compliance check that analyzes configuration parameters adds to the issue count for the model that fails the check.

You can use the Metrics Dashboard to perform compliance and issues checking on your own group of Model Advisor checks. For more information, see Customize Metrics Dashboard Layout and Functionality.

Architecture

These widgets provide a view of your system architecture:

The Potential Reuse/Actual Reuse widget shows the percentage of total number of subcomponents that are clones and the percentage of total number of components that are linked library blocks. Orange indicates potential reuse. Blue indicates actual reuse.

The other system architecture widgets use a value scale. For each value range for a metric, a colored bar indicates the number of components that fall within that range. Darker colors indicate more components.

This table lists the Metrics Dashboard widgets related to architecture and the detailed information available when you select the widget.

| Widget | Metric | Drill-In Data |

|---|---|---|

Potential Reuse /Actual Reuse | Potential Reuse

( | Fraction of total number of subcomponents that are clones as a percentage Fraction of total number of components that are linked library blocks as a percentage Integrate with the Identify Modeling Clones tool by clicking the Open Conversion Tool button. |

| Model Complexity | Cyclomatic complexity

(mathworks.metrics.CyclomaticComplexity) | Model complexity by component |

| Blocks | Simulink block count

(mathworks.metrics.SimulinkBlockCount) | Number of blocks by component |

| Stateflow LOC | Effective lines of code for Stateflow blocks

(mathworks.metrics.StateflowLOCCount) | Effective lines of code for Stateflow blocks by component |

| MATLAB LOC | Effective lines of MATLAB code

(mathworks.metrics.MatlabLOCCount) | Effective lines of code, in MATLAB Function block and MATLAB functions in Stateflow, by component |

Metric Thresholds

For the Model Complexity, Modeling Guideline Compliance, and Reuse widgets, the Metrics Dashboard contains default threshold values. These values indicate whether your data is Compliant or requires review (Warning). For Compliant data, the widget contains green. For warning data, the widget contains yellow. Widgets that do not have Metric threshold values contain blue.

For the Modeling Guideline Compliance metrics, the metric threshold value is zero Model Advisor issues. If you model has issues, the widgets contain yellow. If there are no issues, the widgets contain green.

If your model has warnings, the Code Analyzer and Diagnostic widgets are yellow. If there are no warnings, the widgets contain green.

For the reuse widgets, the metric threshold value is zero. If your model has potential clones, the widget contains yellow. If there are no potential clones, the widget contains green.

For the Model Complexity widget, the metric threshold value is 30. If your model has a cyclomatic complexity greater than 30, the widget contains yellow. If the value is less than or equal to 30, the widget contains green.

You can specify your own metric threshold values for all of the widgets in the Metrics Dashboard. You can also specify values corresponding to a noncompliant range. For more information, see Customize Metrics Dashboard Layout and Functionality.

Dashboard Limitations

When using the Metrics Dashboard, note these considerations:

The analysis root for the Metrics Dashboard cannot be a Configurable Subsystem block.

The Model Advisor, a tool that the Metrics Dashboard uses for data collection, cannot have more than one open session per model. For this reason, when the dashboard collects data, it closes an existing Model Advisor session.

If you use an

sl_customization.mfile to customize Model Advisor checks, these customizations can change your dashboard results. For example, if you hide Model Advisor checks that the dashboard uses to collect metrics, the dashboard does not collect results for those metrics.The Metrics Dashboard does not count MAB checks that are not about blocks as issues. Examples include checks that warn about font formatting or file names. In the Model Advisor Check Issues widget, the tool might report zero MAB issues, but still report issues in the MAB Modeling Guideline Compliance widget. For more information about these issues, click the MAB Modeling Guideline Compliance widget.