Electricity Load and Price Forecasting Webinar Case Study

** Update: The webinar recording is available at:

http://www.mathworks.com/videos/electricity-load-and-price-forecasting-with-matlab-81765.html

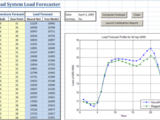

This example demonstrates building a short term electricity load (and price) forecasting system with MATLAB®. Two non-linear regression models (Neural Networks and Bagged Regression Trees) are calibrated to forecast hourly day-ahead loads given temperature forecasts, holiday information and historical loads. The models are trained on hourly data from the NEPOOL region (courtesy ISO New England) from 2004 to 2007 and tested on out-of-sample data from 2008.

The application includes an (optional) Excel front end which enables users to call the trained load forecasting models through a MATLAB-deployable DLL.

The document titled "Introduction to Load & Price Forecasting Case Study" will guide you through the different components of the analysis.

If you do not have all of the required toolboxes, you can still view the results of running the analysis by clicking on one of the HTML reports below.

NOTE: The Access database shown in the webinar is not provided with this archive due to size restrictions. The equivalent data sets are provided in MAT-files in the folders Load\Data and Price\Data for the load and price forecasting studies respectively. The raw data files can be obtained directly from ISO New England (www.iso-ne.com)

MORE ON LOAD AND PRICE FORECASTS:

Accurate load forecasts are critical for effective operations and planning for utilities. The load forecast influences a number of decisions including which generators to commit for a given period, and acutely affects the wholesale electricity market prices. Load and price forecasting algorithms typically also feature prominently in reduced-form hybrid models for electricity price, which are some of the most accurate models for simulating markets and modeling energy derivatives. The electricity price forecast is also used widely by market participants in many trading and risk management applications.

Cite As

Ameya Deoras (2024). Electricity Load and Price Forecasting Webinar Case Study (https://www.mathworks.com/matlabcentral/fileexchange/28684-electricity-load-and-price-forecasting-webinar-case-study), MATLAB Central File Exchange. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

- AI, Data Science, and Statistics > Statistics and Machine Learning Toolbox >

- AI, Data Science, and Statistics > Deep Learning Toolbox >

- Industries > Energy Production > Energy Trading >

- Computational Finance > Financial Instruments Toolbox > Price Instruments Using Functions > Energy Derivatives >

Tags

Acknowledgements

Inspired by: Intelligent Dynamic Date Ticks

Inspired: Commodities Trading with MATLAB

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

Electricity Load & Price Forecasting/

Electricity Load & Price Forecasting/Load/

Electricity Load & Price Forecasting/Price/

Electricity Load & Price Forecasting/Util/

Electricity Load & Price Forecasting/Load/html/

Electricity Load & Price Forecasting/Price/html/

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.7.0.1 | Updated license |

||

| 1.7.0.0 | Minor bug fix, added more error checking |

||

| 1.4.0.0 | Updated readme document and description. No code changes. |

||

| 1.2.0.0 | Added link to recorded webinar. No change to files. |

||

| 1.1.0.0 | Changed intro document to PDF |

||

| 1.0.0.0 |