What Is Computer Vision?

3 things you need to know

Computer vision is a set of techniques for extracting information from images, videos, or point clouds. Computer vision includes image recognition, object detection, activity recognition, 3D pose estimation, video tracking, and motion estimation. Real-world applications include face recognition for logging into smartphones, pedestrian and vehicle avoidance in self-driving vehicles, and tumor detection in medical MRIs. Software tools such as MATLAB® and Simulink® are used to develop computer vision techniques.

Most computer vision techniques are developed using an extensive set of real-world data and a workflow of data exploration, model training, and algorithm development. Computer vision engineers often modify an existing set of techniques to fit the specific problem of interest. The main types of approaches used in computer vision systems are described below.

Deep Learning-Based Techniques

Deep learning approaches to computer vision are useful for object detection, object recognition, image deblurring, and scene segmentation. Deep learning approaches involve training convoluted neural networks (CNNs), which learn directly from data using patterns at different scales. CNN training requires a large set of labeled training images or point clouds. Transfer learning uses pretrained networks to accelerate this process with less training data.

Feature-Based Techniques

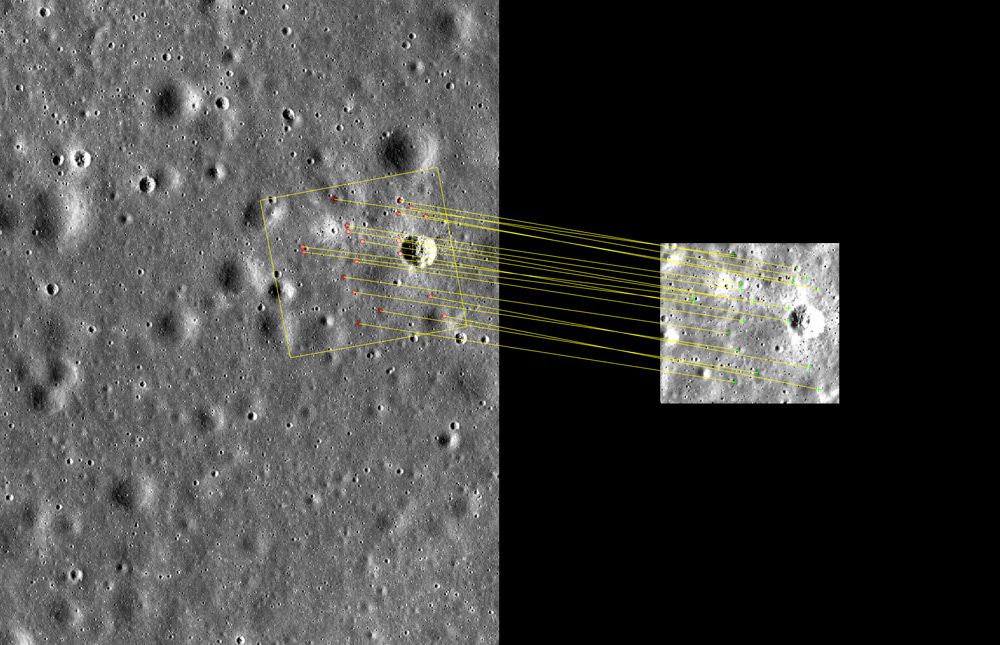

Feature detection and extraction techniques are computer vision algorithms that identify patterns or structures in images and point clouds for image alignment, video stabilization, object detection, and more. In images, useful feature types include edges, corners, or regions with uniform density, and you can identify these features with detectors such as BRISK, SURF, or ORB. In point clouds, you can use eigenvalue-based feature extractors or fast point feature histogram (FPFH) extractors.

Using feature matching to compare an image from a moving spacecraft (right image) to a reference image (left image). (Image courtesy of NASA)

Image Processing

Image processing techniques are often applied as a preprocessing step in the computer vision workflow. The type of preprocessing depends on the task. Relevant image processing techniques include:

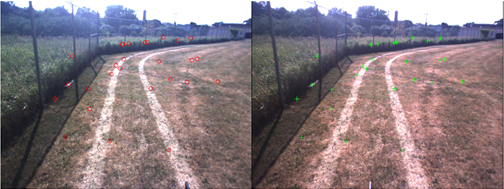

Red cone detection using rgb2hsv color conversion in MATLAB.

Point Cloud Processing

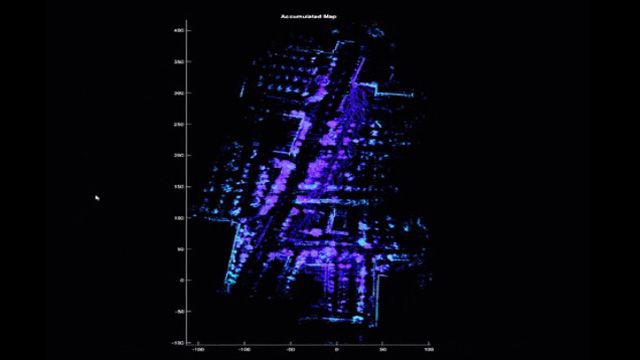

Point clouds are a set of data points in 3D space that together represent a 3D shape or object. Point cloud processing is typically done to preprocess the data in preparation for the computer vision algorithms that analyze them. Point cloud processing typically involves:

3D Point cloud registration and stitching using iterative closest point (ICP) in MATLAB.

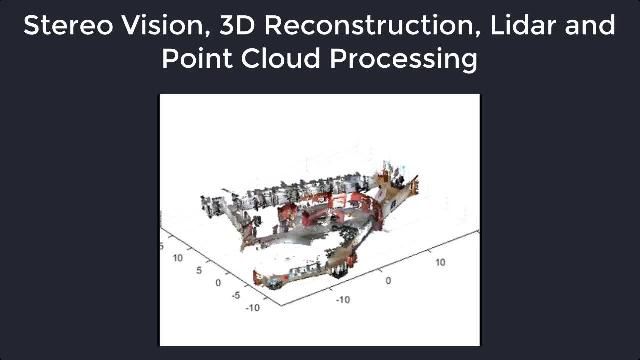

3D Vision Processing

3D vision processing techniques estimate the 3D structure of a scene using multiple images taken with a calibrated camera. These images are typically generated from a monocular camera or stereo camera pair. 3D vision processing techniques include:

Computer vision is essential in a wide range of real-world applications. Some of the most common of these are discussed below.

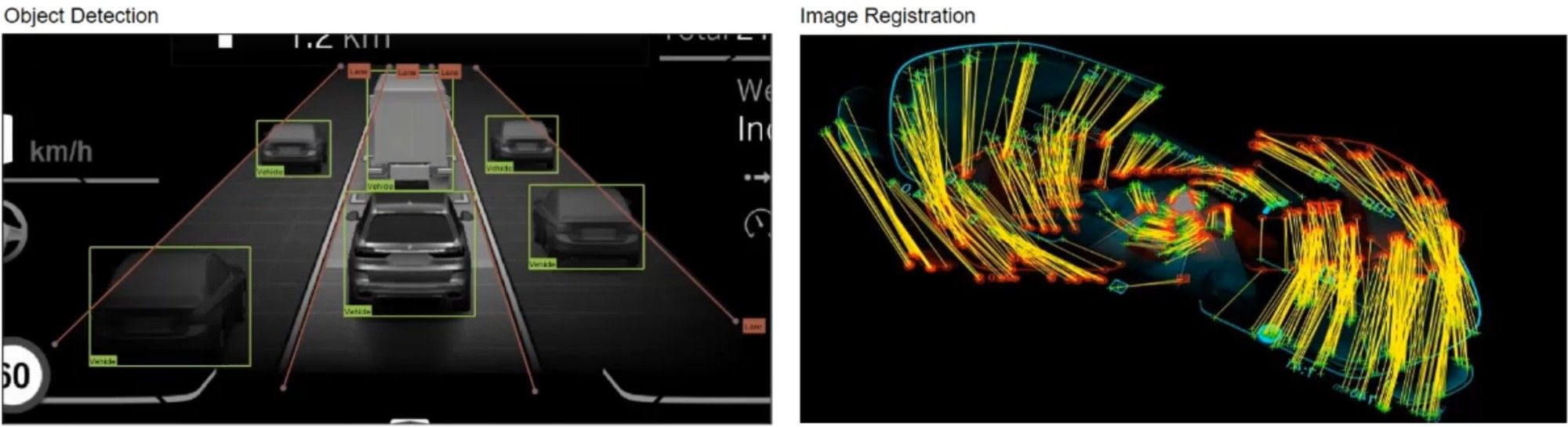

Autonomous Systems

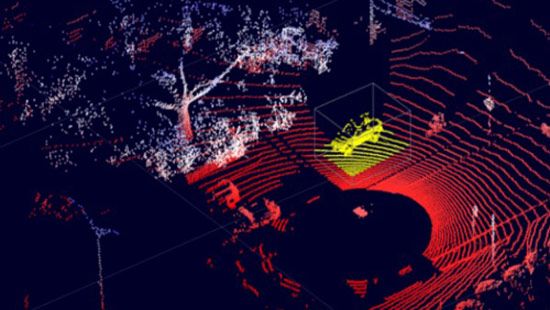

Aerial or ground autonomous systems use various sensors that collect visual or point cloud data from their environments. The systems use these data with computer vision capabilities, such as simultaneous localization and mapping (SLAM) and tracking, to map the environment. Autonomous systems can use these maps to segment roads, footpaths, or buildings and detect and track humans and vehicles. For example, BMW (14:01) uses computer vision capabilities in the Assisted Driving View (ADV) to depict surrounding vehicles and identify their types.

MATLAB supports end-to-end testing of BMW’s Assisted Driving View using real-world data.

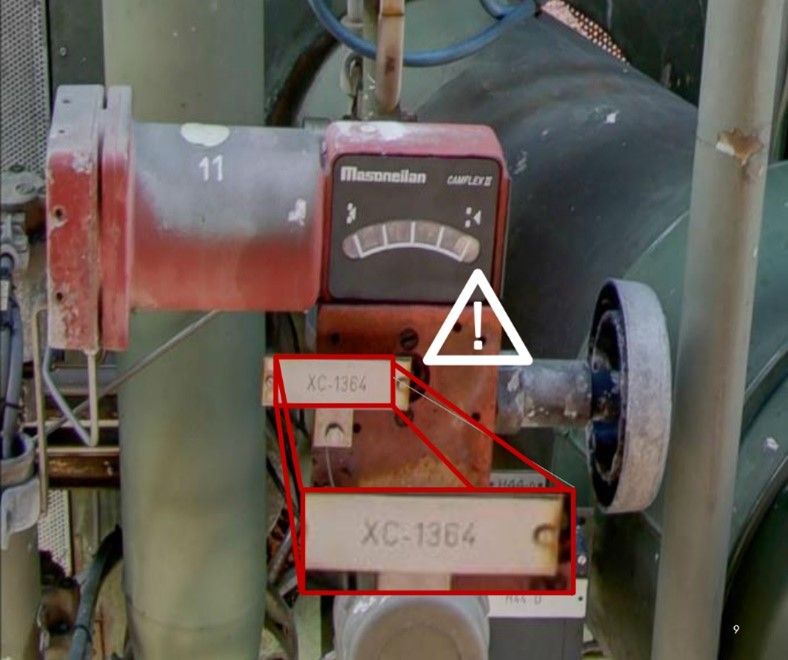

Industrial Applications

Computer vision is used in manufacturing applications such as part quality monitoring and infrastructure maintenance. For example, Shell (19:44) used trained regional convolutional neural networks (R-CNNs) to identify tags on machinery. TimkenSteel also used the same capabilities for quality control, identifying inferior or defective parts during manufacturing.

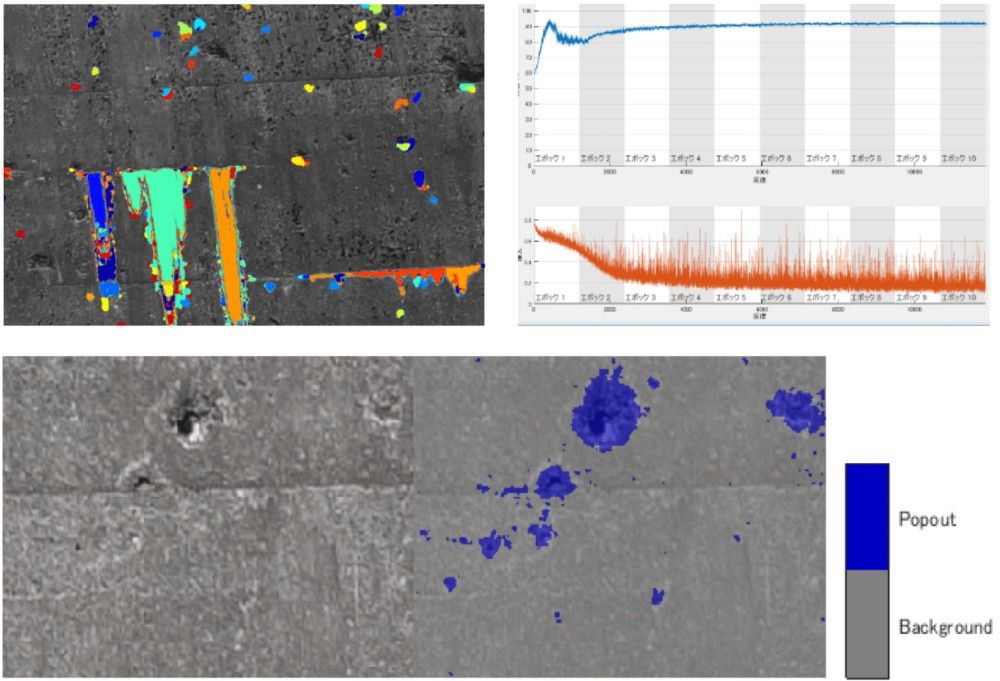

Construction and Agriculture

Computer vision is used in construction and agriculture to extract information from aerially captured infrastructure or terrain data. Computer vision capabilities such as spectral signature mapping, object detection, and segmentation are applied to analyze images, point clouds, or hyperspectral data from aerial platforms. Yachiyo Engineering in Japan uses these capabilities to detect damage to dams and bridges with semantic segmentation. Farmers also analyze crop health using drones that acquire hyperspectral images of their farms.

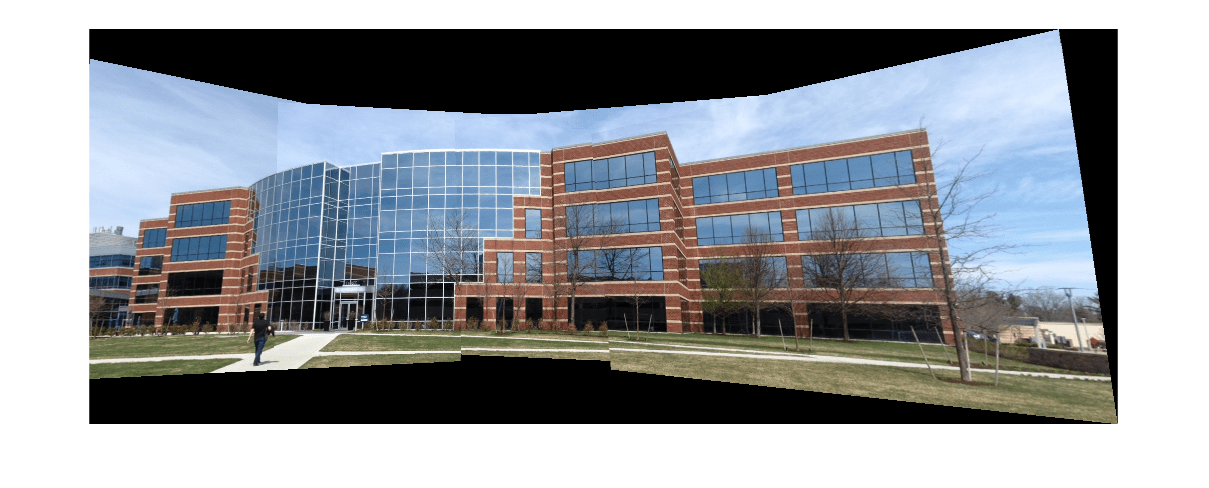

Photography

The use of computer vision in cameras and smartphones has grown heavily over the last decade. These devices use face detection and tracking to focus on faces and stitching algorithms to create panoramas. The devices also integrate optical character recognition (OCR) or barcode or QR code scanners to access stored information.

Creating a panoramic image with feature-based image registration techniques in MATLAB.

Image Processing Toolbox™, Computer Vision Toolbox™, and Lidar Toolbox™ in MATLAB provide apps, algorithms, and trained networks that you can use to build your computer vision capabilities. You can import image or point cloud data, preprocess it, and use built-in algorithms and deep learning networks to analyze the data. The toolboxes provide you with examples to get started.

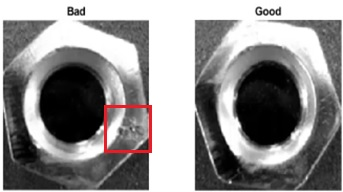

Defect Detection Using MATLAB

You can use Computer Vision Toolbox to detect anomalies and defects in objects such as machine parts, electronics circuits, or others. You can increase the chances of detecting the right features by starting with image preprocessing algorithms in Image Processing Toolbox, using capabilities such as correcting alignment, segmenting out by color, and adjusting the image intensity.

The defect detection step is often achieved using deep learning. To provide training data for deep learning, you can use the MATLAB image, video, or lidar labeler apps, which help you label data by creating semantic segmentation or instance segmentation masks. You can then train a deep learning network, either from scratch or by using transfer learning. You can then use the trained network or one of several pretrained networks to classify the objects based on anomalies or defects.

Bad vs. good nut detection using deep learning networks trained in MATLAB.

Object Detection and Tracking Using MATLAB

Object detection and tracking is one of the more well-known uses of computer vision for applications such as detecting vehicles or people, reading bar codes, and detecting objects in scenes. You can use the Deep Network Designer to build deep learning networks in MATLAB for applications such as detecting cars using a YOLO v3. You load labeled training data, preprocess the data, define and train the YOLO v3 network, and evaluate its precision and miss rate against ground truth data. You can then use the network to detect cars and display bounding boxes around them.

Detecting cars using YOLO v3 generated using the Deep Network Designer in MATLAB.

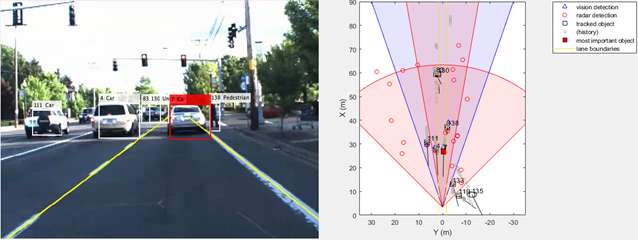

Using Computer Vision and Simulink in Autonomous System Simulation

You can use object detection and tracking results from computer vision, in a robotic or autonomous system to make decisions. The autonomous emergency braking (AEB) with sensor fusion example demonstrates the ease of building Simulink models that integrate computer vision capabilities. The model uses two parts: a computer vision and sensor fusion model to detect obstacles in front of a vehicle, and a forward collision warning (FCW) system to warn the driver and automatically apply the brake. This shows how you can use Simulink to integrate computer vision algorithms into a broader system simulation.

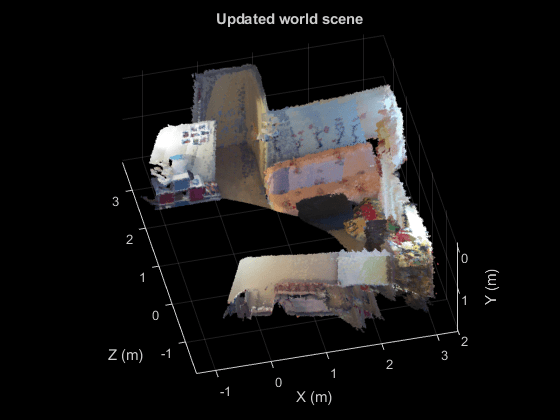

Localization and Mapping with Computer Vision Toolbox

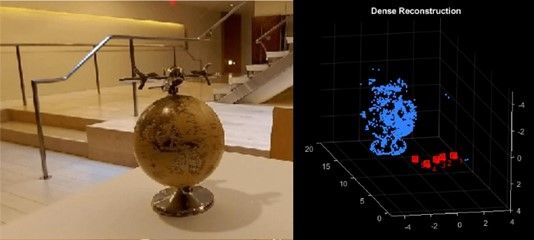

You can use computer vision in MATLAB to estimate camera positions and map the environment using visual simultaneous localization and mapping (vSLAM), to create 3D models of the objects using structure from motion (SfM), and to estimate depth.

You can estimate the position of a stereo camera pair while mapping the environment using built-in capabilities in MATLAB such as imageDatastore and the bagOfFeatures. You initialize the map by identifying matching features between the pair of images, and then estimate the camera position and feature positions on the map, using bundle adjustment to refine the position and orientation of the camera as it moves through the scene.

Matching features between a stereo camera pair using ORB-SLAM2 in Computer Vision Toolbox.

Object Counting

You can also use computer vision to count objects in an image or video. In the cell counting example, you apply morphological operators to segment the cells, find cell centers using blob analysis, and count the number of centers found. You then repeat this process for every frame in the video.

Cell counting in MATLAB using morphological operators and blob analysis.

Apps in MATLAB, such as the Image Segmenter and Color Thresholder apps, provide an interactive user interface to segment objects in an image. The Image Region Analyzer app helps count objects in an image and calculate their properties, such as area, center of mass, and others.