Visualization and Interpretability

Plot training progress, assess accuracy, explain predictions, and

visualize features learned by a network

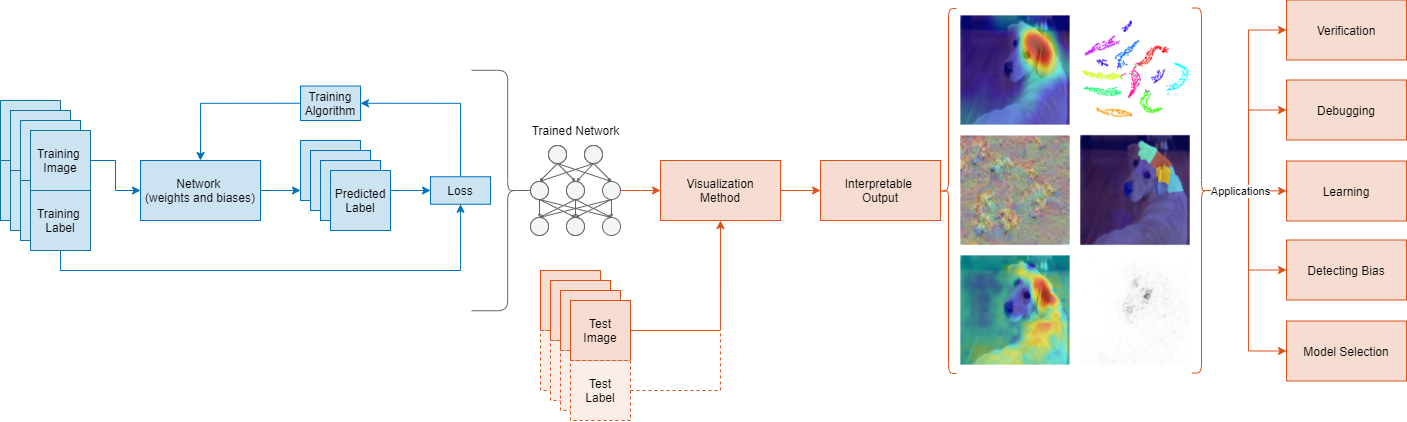

Monitor training progress using built-in plots of network accuracy and loss. Investigate trained networks using visualization techniques such as Grad-CAM, occlusion sensitivity, LIME, and deep dream.

Deep Learning Visualization Methods

Apps

| Deep Network Designer | Design and visualize deep learning networks |

Objects

trainingProgressMonitor | Monitor and plot training progress for deep learning custom training loops (Since R2022b) |

Functions

Properties

| ConfusionMatrixChart Properties | Confusion matrix chart appearance and behavior |

| ROCCurve Properties | Receiver operating characteristic (ROC) curve appearance and behavior (Since R2022b) |

Topics

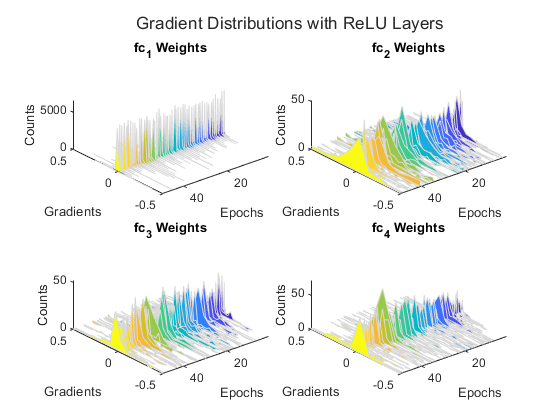

Training Progress and Performance

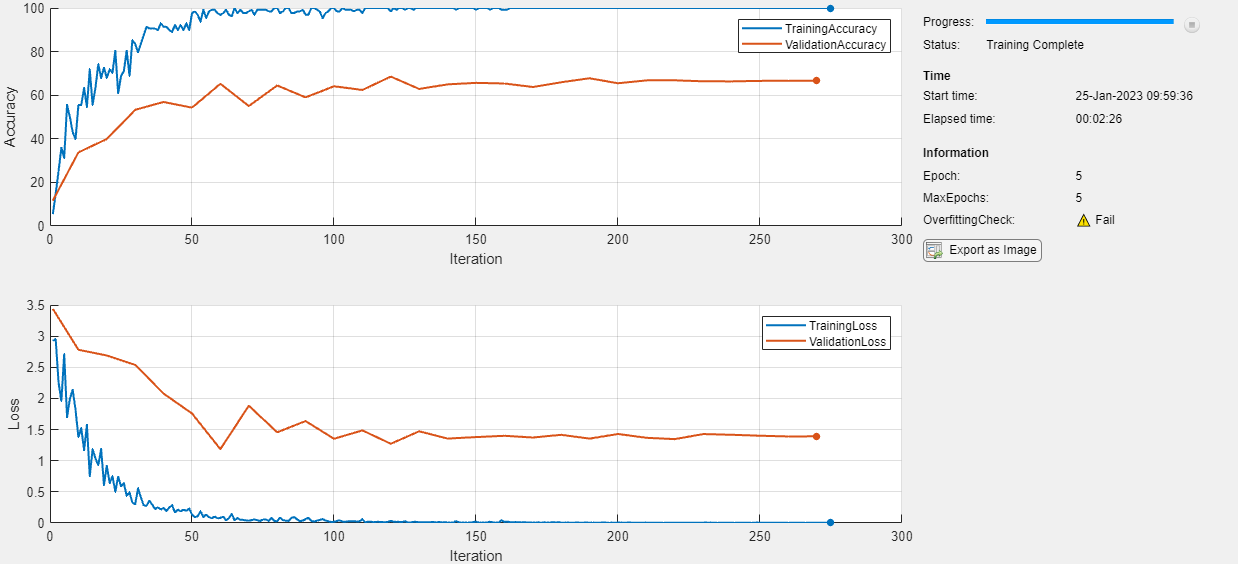

- Monitor Deep Learning Training Progress

This example shows how to monitor the training progress of deep learning networks. - Monitor Custom Training Loop Progress

Track and plot custom training loop progress. - Monitor GAN Training Progress and Identify Common Failure Modes

Learn how to diagnose and fix some of the most common failure modes in GAN training. - ROC Curve and Performance Metrics

Userocmetricsto examine the performance of a classification algorithm on a test data set. - Compare Deep Learning Models Using ROC Curves

This example shows how to use receiver operating characteristic (ROC) curves to compare the performance of deep learning models. - Define Custom Metric Function

Define custom deep learning metrics using functions. - Define Custom Deep Learning Metric Object

Learn how to define custom deep learning metrics using custom classes. - Define Custom F-Beta Score Metric Object

This example shows how to define a generic F-beta score metric.

Interpretability

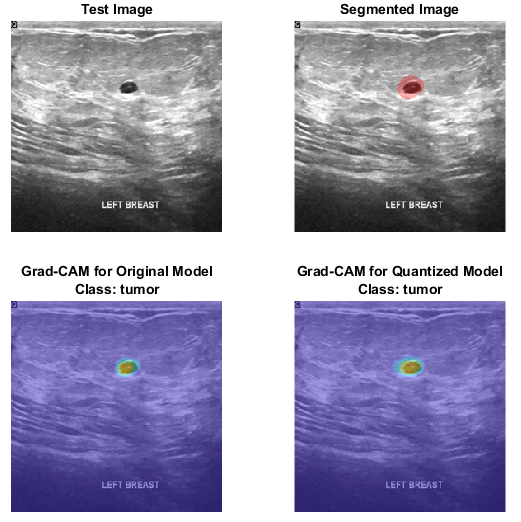

- Explore Network Predictions Using Deep Learning Visualization Techniques

This example shows how to investigate network predictions using deep learning visualization techniques. - Understand Network Predictions Using Occlusion

This example shows how to use occlusion sensitivity maps to understand why a deep neural network makes a classification decision. - Interpret Deep Network Predictions on Tabular Data Using LIME

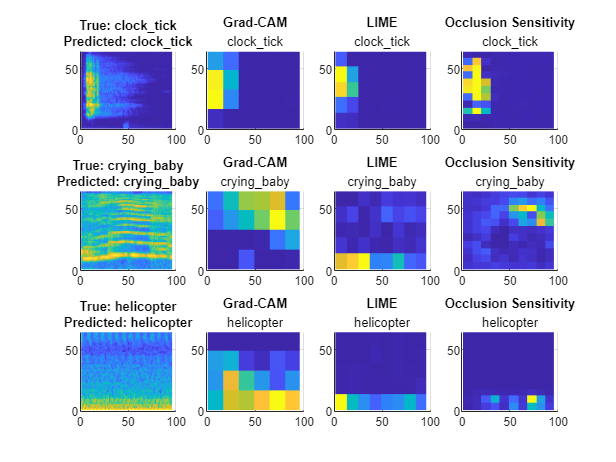

This example shows how to use the locally interpretable model-agnostic explanations (LIME) technique to understand the predictions of a deep neural network classifying tabular data. - Investigate Spectrogram Classifications Using LIME

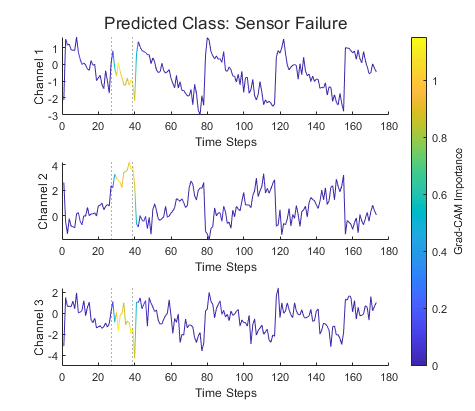

This example shows how to use locally interpretable model-agnostic explanations (LIME) to investigate the robustness of a deep convolutional neural network trained to classify spectrograms. - Investigate Classification Decisions Using Gradient Attribution Techniques

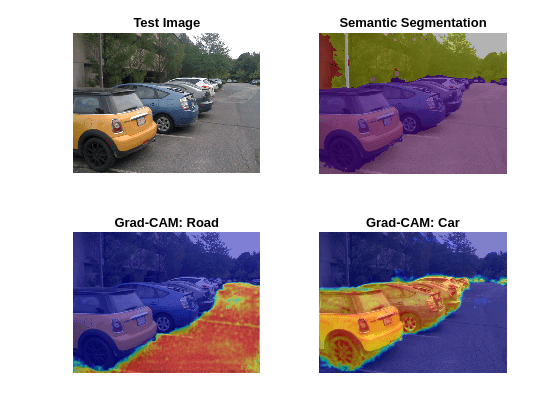

This example shows how to use gradient attribution maps to investigate which parts of an image are most important for classification decisions made by a deep neural network. - Investigate Network Predictions Using Class Activation Mapping

This example shows how to use class activation mapping (CAM) to investigate and explain the predictions of a deep convolutional neural network for image classification. - Visualize Image Classifications Using Maximal and Minimal Activating Images

This example shows how to use a data set to find out what activates the channels of a deep neural network. - View Network Behavior Using tsne

This example shows how to use thetsnefunction to view activations in a trained network. - Visualize Activations of LSTM Network

This example shows how to investigate and visualize the features learned by LSTM networks by extracting the activations. - Visualize Activations of a Convolutional Neural Network

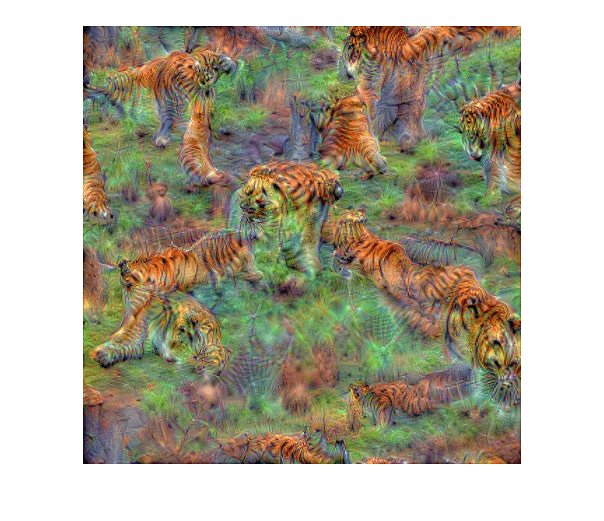

This example shows how to feed an image to a convolutional neural network and display the activations of different layers of the network. - Visualize Features of a Convolutional Neural Network

This example shows how to visualize the features learned by convolutional neural networks. - Deep Learning Visualization Methods

Learn about and compare deep learning visualization methods.