Analyze N-dimensional Convex Polyhedra

This submission contains a set of files for analyzing N-dimensional convex polyhedra. It is intended for fairly low dimensions N -- basically low enough so that vertex and facet enumeration using MATLAB's convhulln() command is tractable. For now, it is also limited to bounded polyhedra (i.e., polytopes). A bounded convex polyhedron can be represented either as the convex hull of a finite set of vertices V(i,:) or by using a combination of linear constraint equalities and inequalities,

A*x<=b,

Aeq*x=beq

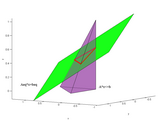

Here, A and Aeq are MxN and PxN matrices while b and beq are Mx1 and Px1 column vectors, respectively. The (in)equality representation expresses the polyhedron as the intersection of two regions. One region is a solid N-dimensional shape, described by the inequalities, while the other is a possibly lower-dimensional sub-space, described by the equalities. The screenshot above illustrates this, showing how a triangle in 3D can be represented as the intersection of a tetrahedron (a solid shape in R^3) and a plane.

The package contains tools for converting between the two representations (see VERT2LCON and LCON2VERT) as well as for taking intersections and unions of polyhedra in either form (see intersectionHull and unionHull).

The package was inspired by Michael Kleder's vert2con and con2vert functions, which were limited to N-dimensional polyhedra possessing non-zero volume in R^N.Thus, for example, they could not handle a triangle floating in R^3 as depicted in the above screenshot. Although a triangle has non-zero volume (i.e., area) in R^2 it has zero volume in R^3. The extension made in this package covers general bounded polyhedra. NOTE: however, when using linear constraint data A, b, Aeq, beq to represent a given polyhedron, it is important that the inequalities A*x<=b still be chosen to correspond with a region of non-zero N-dimensional volume. Zero-volume polyhedra are captured by adding equality constraints Aeq*x=beq.

EXAMPLE:

Consider the 3D polyhedron defined by x+y+z=1, x>=0, y>=0, z>=0. Equivalent constraint in/equalities can be obtained from the known vertices by doing,

>> [A,b,Aeq,beq]=vert2lcon(eye(3))

A =

0.4082 -0.8165 0.4082

0.4082 0.4082 -0.8165

-0.8165 0.4082 0.4082

b =

0.4082

0.4082

0.4082

Aeq =

0.5774 0.5774 0.5774

beq =

0.5774

Conversely, the vertices can be obtained by doing,

>> V=lcon2vert(A,b,Aeq,beq)

V =

1.0000 0.0000 0.0000

0.0000 0.0000 1.0000

-0.0000 1.0000 0.0000

When an interior point of the polyhedron is known a priori, one can instead use QLCON2VERT, a quicker version of LCON2VERT which uses the known point to get around some computationally intensive steps.

Cite As

Matt J (2025). Analyze N-dimensional Convex Polyhedra (https://www.mathworks.com/matlabcentral/fileexchange/30892-analyze-n-dimensional-convex-polyhedra), MATLAB Central File Exchange. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

Tags

Acknowledgements

Inspired by: CON2VERT - constraints to vertices, VERT2CON - vertices to constraints

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.9.0.2 | Edited descriptive text |

||

| 1.9.0.1 | Editing of descriptive text only. |

||

| 1.9.0.0 | Bug fix - the case where the equality constraint system Aeq*x=beq had a unique solution was not handled properly |

||

| 1.8.0.0 | Minor grammatical and spelling edits to description page.

|

||

| 1.7.0.0 | added screenshot |

||

| 1.6.0.0 | * improved error checking in lcon2vert

|

||

| 1.5.0.0 | If lcon2vert fails to find an initial interior point after many iterations of the alg it uses, it will now quit with V=[]. |

||

| 1.4.0.0 | Various bug fixes and improvements in robustness. These address failure cases discovered recently by users. |

||

| 1.3.0.0 | *Further robustness of lcon2vert

|

||

| 1.2.0.0 | Improved the reliability of CON2VERT subroutine, both in terms of performance and of error reporting. |

||

| 1.1.0.0 | added LCON2VERT which does the inverse of VERT2CON |

||

| 1.0.0.0 |