Total Least Squares Method

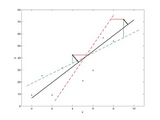

We present a Matlab toolbox which can solve basic problems related to the Total Least Squares (TLS) method in the modeling. By illustrative examples we show how to use the TLS method for solution of:

- linear regression model

- nonlinear regression model

- fitting data in 3D space

- identification of dynamical system

This toolbox requires another two functions, which are already published in Matlab Central File Exchange. Those functions will be installed to computer via supporting install package 'requireFEXpackage' included in TLS package. For more details see ReadMe.txt file.

Authors: Ivo Petras, Dagmar Bednarova, Tomas Skovranek, and Igor Podlubny

(Technical University of Kosice, Slovakia)

Detailed description of the functions, examples and demos can be found at the link:

Ivo Petras and Dagmar Bednarova: Total Least Squares Approach to Modeling: A Matlab Toolbox, Acta Montanistica Slovaca, vol. 15, no. 2, 2010, pp. 158-170.

(http://actamont.tuke.sk/pdf/2010/n2/8petras.pdf)

Cite As

Ivo Petras (2024). Total Least Squares Method (https://www.mathworks.com/matlabcentral/fileexchange/31109-total-least-squares-method), MATLAB Central File Exchange. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

Tags

Acknowledgements

Inspired by: fminsearchbnd, fminsearchcon, Orthogonal Linear Regression in 3D-space by using PCA, Require FEX package

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.