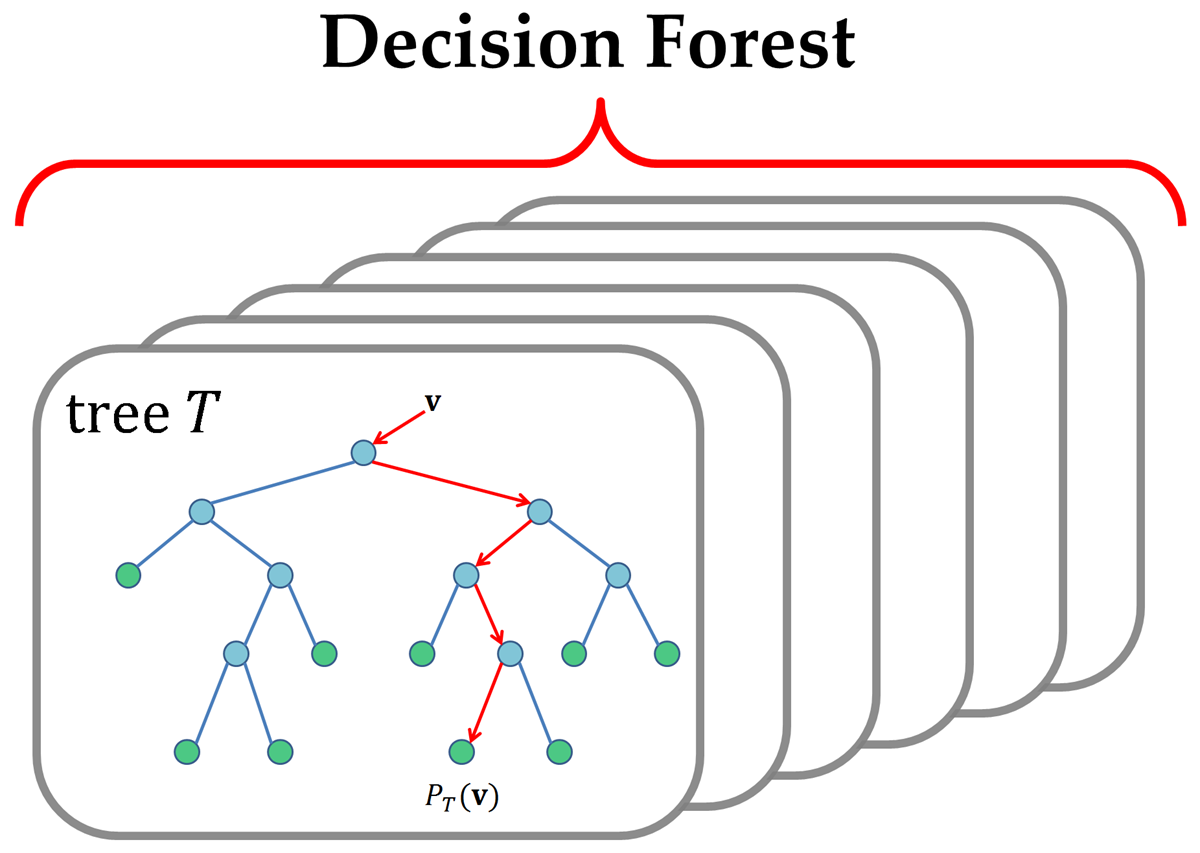

Decision Tree and Decision Forest

Decision Tree and Decision Forest

Overview

This package implements the decision tree and decision forest techniques in C++, and can be compiled with MEX and called by MATLAB/Octave. The algorithm is highly efficient, and has been used in these papers:

[1] Quan Wang, Yan Ou, A. Agung Julius, Kim L. Boyer and Min Jun Kim,

"Tracking Tetrahymena Pyriformis Cells using Decision Trees",

2012 21st International Conference on Pattern Recognition (ICPR),

Pages 1843-1847, 11-15 Nov. 2012.

[2] Quan Wang, Dijia Wu, Le Lu, Meizhu Liu, Kim L. Boyer, and Shaohua

Kevin Zhou, "Semantic Context Forests for Learning-Based Knee

Cartilage Segmentation in 3D MR Images",

MICCAI 2013: Workshop on Medical Computer Vision.

This library is also available at MathWorks MATLAB Central:

Copyright

Copyright (C) 2013 Quan Wang wangq10@rpi.edu, Signal Analysis and Machine Perception Laboratory, Department of Electrical, Computer, and Systems Engineering, Rensselaer Polytechnic Institute, Troy, NY 12180, USA

You are free to use this software for academic purposes if you cite our papers.

For commercial use, please contact the authors.

Cite As

Quan Wang (2024). Decision Tree and Decision Forest (https://github.com/wq2012/DecisionForest/releases/tag/v1.7), GitHub. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

Tags

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

code

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.7.0.0 | See release notes for this release on GitHub: https://github.com/wq2012/DecisionForest/releases/tag/v1.7 |

||

| 1.5.0.0 | Changed the positions of several delete[] commands to optimize memory use. |

||

| 1.4.0.0 | Added decision forest functionalities. |

||

| 1.3.0.0 | Better encapsulation of the HashTable class. |

||

| 1.2.0.0 | Optimized the memory use of getEntropyDecrease() function. |

||

| 1.1.0.0 | Rewrite the code in C++/MEX. Generate to multi-class. |

||

| 1.0.0.0 |