worldpointset

Manage 3-D to 2-D point correspondences

Description

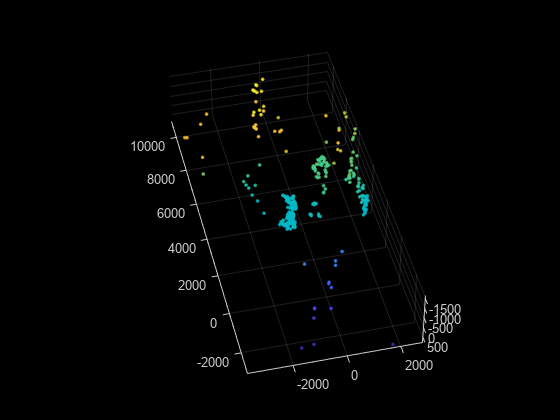

The worldpointset object stores correspondences between 3-D world

points and 2-D image points across camera views. You can use a worldpointset

object with an imageviewset object

to manage image and map data for structure-from-motion, visual odometry, and visual

simultaneous localization and mapping (SLAM).

Creation

Syntax

Description

wpSet = worldpointset

Properties

Object Functions

addWorldPoints | Add world points to world point set |

removeWorldPoints | Remove world points from world point set |

updateWorldPoints | Update world points in world point set |

selectWorldPoints | Select world points from world point set |

addCorrespondences | Update world points in a world point set |

removeCorrespondences | Remove 3-D to 2-D correspondences from world point set |

updateCorrespondences | Update 3-D to 2-D correspondences in world point set |

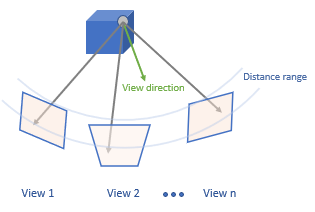

updateLimitsAndDirection | Update distance limits and viewing direction |

updateRepresentativeView | Update representative view ID and corresponding feature index |

findViewsOfWorldPoint | Find views that observe a world point |

findWorldPointsInTracks | Find world points that correspond to point tracks |

findWorldPointsInView | Find world points observed in view |