Object-Detection-and-Classification-A-Joint-Selection-and-Fu

Object-Detection-and-Classification-A-Joint-Selection-and-Fusion-Strategy-of-Deep-Convolutional-Neu Object Detection and Classification: A Joint Selection and Fusion Strategy of Deep Convolutional Neural Network and SIFT Point Features

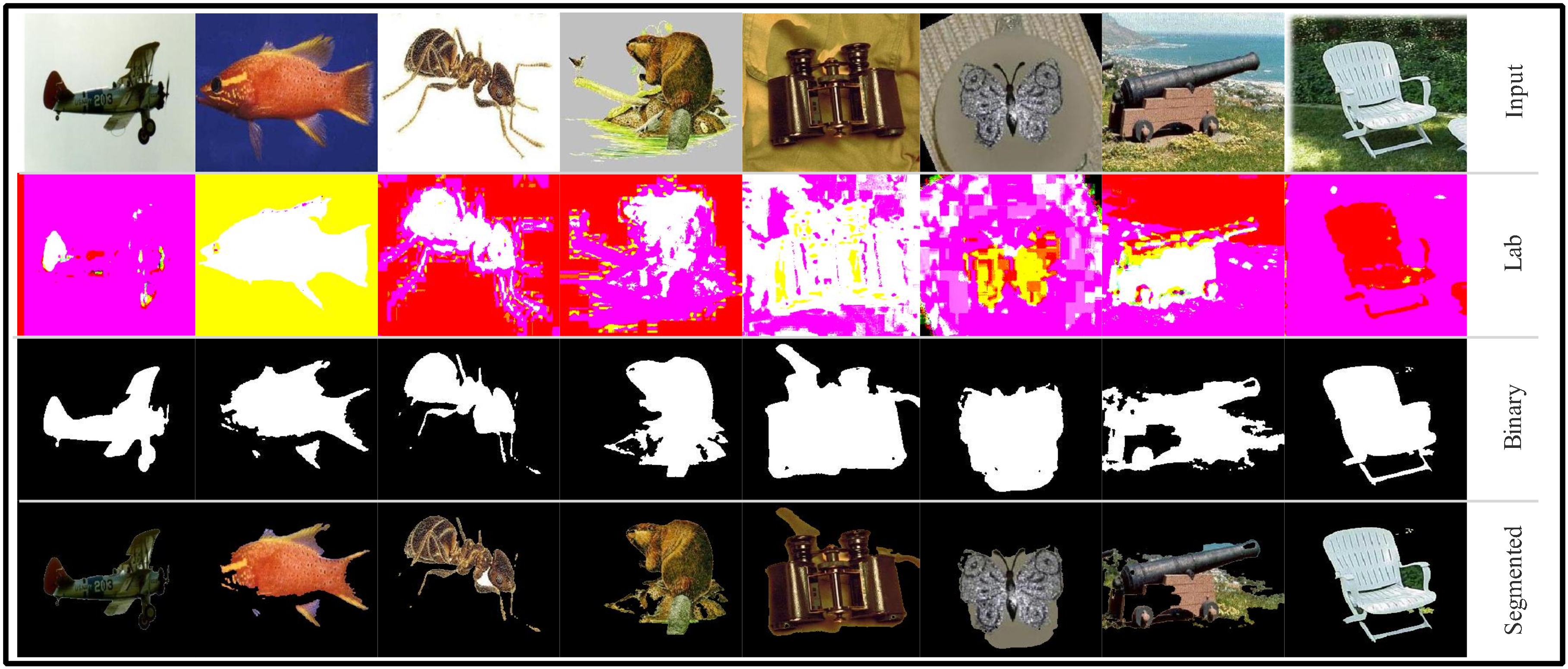

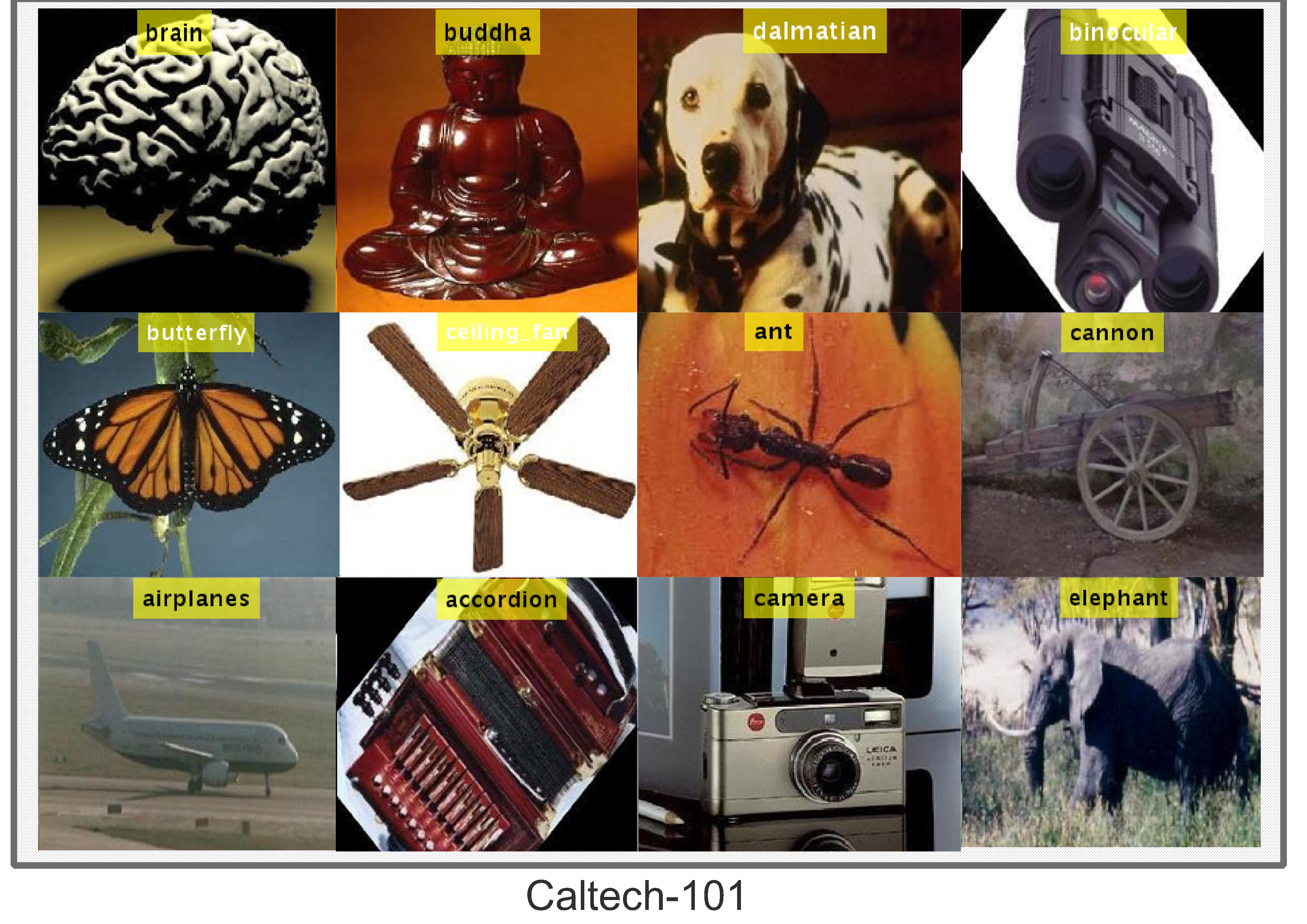

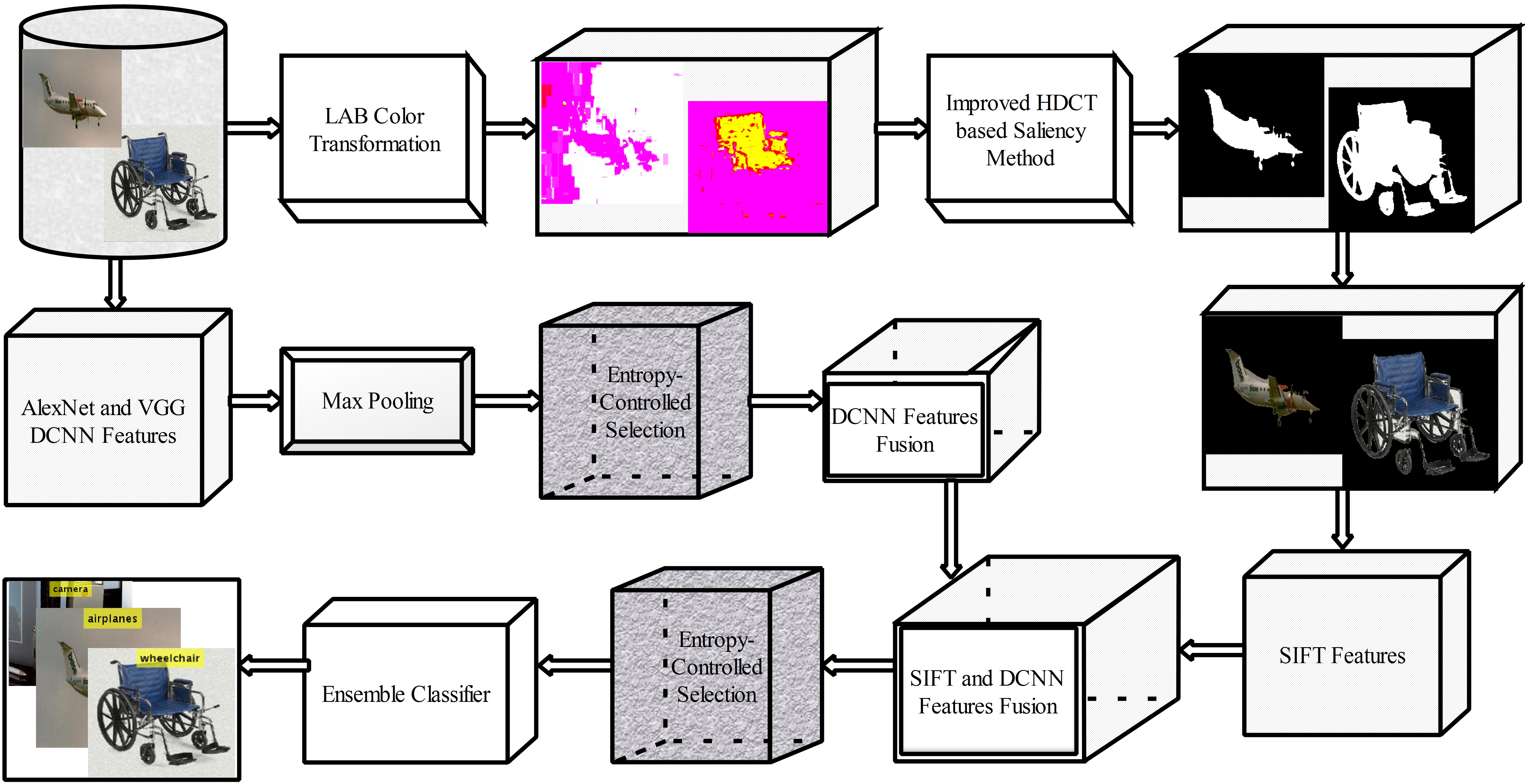

Object detection is an important task in the domain of computer vision and it gains much attention from last 2 decades based on their emerging application such as video surveillance and pedestrian detection. In this research, we deal with complex object detection and classification using three famous datasets such as Caltech101, PASCAL 3D, and 3D dataset. These datasets contain hundreds of object classes and thousands of images. To inspire with these datasets challenges, we proposed a new method for object detection and classification based on DCNN features extraction along with SIFT points. The proposed method consists of two major steps, which are executed in parallel. In the first step, SIFT point features are extracted from mapped RGB segmented object. Then, in the second step, DCNN features are extracted from pre-trained deep models such as AlexNet and VGG. The both SIFT point and DCNN features are combined in one matrix by a fusion method, which is later utilized for classification. The detailed description of each step is given below. Also, flow diagram of proposed method is shown in the Figure 1

Segmentation using Improved Saliancy Method

SIFT Features

The SIFT features are computed in four steps. In the first step, local key points are determined that are important and stable for given images. The features are extracted from each key point that explains the local image region samples, which are related to its scale space coordinate image. Then in the second step, weak features are removed by a specific threshold value. In the third step, orientations are assigned to each key point based on local image gradient directions. Finally, 1x128 dimensional feature vector is obtained and perform bilinear interpolation to improve the robustness of features

Deep CNN Features

In this article, we employed two pre-trained deep CNN models such as VGG19 and AlexNet, which are used for features extraction. These models incorporate convolution layer, pooling layer, normalization layer, ReLu layer, and FC layer. As discussed above that convolution layer extract local features from an image

Features Extraction

Max Pooling

Feature Selection

We employed entropy based feature Selection in this approach to reduce the extracted feature.

Featre Fusion

We employed Serial based Feature fusion

𝚷(𝑭𝒖𝒔𝒆𝒅) = (𝑁 × 1000) + (𝑁 × 1000) + (𝑁 × 100) 𝚷(𝑭𝒖𝒔𝒆𝒅) = 𝑁 × 𝑓𝑖

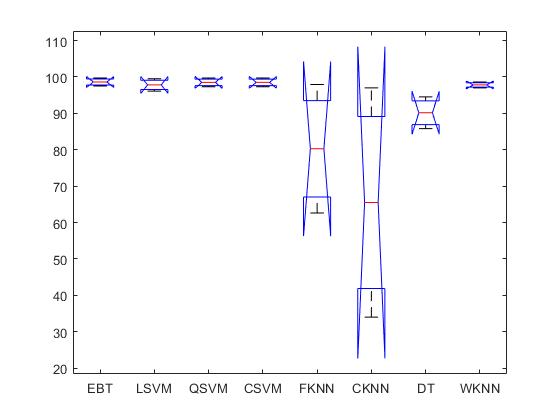

The size of final feature vector is 1 × 2100, which feed to ensemble classifier for classification. The ensemble classifier is a supervised learning method, which need to training data for prediction. Ensemble method combines several classifiers data to produce a better system

Results

Caltech-101 Dataset Pascal3D Dataset 3D Dataset Caltech-101 Dataset Pascal3D Dataset Barkely DatasetMuhammad Rashid, Muhammad Attique Khan, Muhammad Sharif, Mudassar Raza, Muhammad Masood, Farhat Afza (Multimedia Tools and Applications: Impact Factor 2.577 | 08-12-2018)

Cite As

Muhammad Rashid (2024). Object-Detection-and-Classification-A-Joint-Selection-and-Fu (https://github.com/rashidrao-pk/Object-Detection-and-Classification-A-Joint-Selection-and-Fusion-Strategy-of-Deep-Convolutional-Neu), GitHub. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxTags

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

codes

Versions that use the GitHub default branch cannot be downloaded

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.0.0 |

|