NewtonRaphson

Cite As

Mark Mikofski (2026). NewtonRaphson (https://github.com/mikofski/NewtonRaphson), GitHub. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

- Mathematics and Optimization > Optimization Toolbox > Systems of Nonlinear Equations >

- Mathematics and Optimization > Optimization Toolbox > Systems of Nonlinear Equations > Newton-Raphson Method >

Tags

Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

Versions that use the GitHub default branch cannot be downloaded

| Version | Published | Release Notes | |

|---|---|---|---|

| 2.0 | Add BSD-3 license. |

|

|

| 1.6.0.1 | connect to github |

|

|

| 1.6.0.0 | * allow sparse matrices, replace cond() with condest()

|

||

| 1.5.0.0 | version 0.4 - exit if any dx is nan or inf, allow lsq curve-fit type problems. |

||

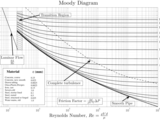

| 1.4.0.0 | (1) fix need dummy vars dx and convergence to display 0th iteration bug, and (2) if f isnan or isinf need to set lambda2 and f2 before continue bug (3) also reattach moody chart |

||

| 1.2.0.0 | v0.3: Display RCOND and lambda each step. Use ducktyping in funwrapper. Remove Ftyp and F scaling. Use backtracking line search. Output messages, exitflag and min relative step. |

||

| 1.1.0.0 | remove TypicalX and FinDiffRelStep, change feval to evalf & new example solves pipe flow problem using implicit Colebrook equation |

||

| 1.0.0.0 |