Classify ECG Signals Using DAG Network Deployed to FPGA

This example shows how to classify human electrocardiogram (ECG) signals by deploying a transfer learning trained SqueezeNet network trainedSN to a Xilinx® Zynq® Ultrascale+™ ZCU102 board.

Required Products

For this example, you need:

Xilinx Zynq Ultrascale+ MPSoC ZCu102

Download Data

Download the data from the GitHub repository. To download the data from the website, click Clone and select Download ZIP. Save the file physionet_ECG_data-main.zip in a folder where you have write permission.

After downloading the data from GitHub®, unzip the file in your temporary directory.

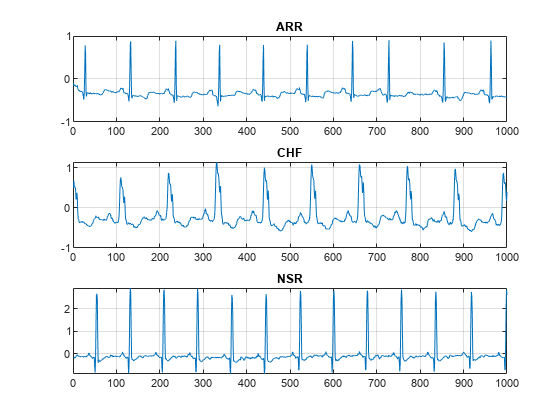

unzip(fullfile(tempdir,'physionet_ECG_data-main.zip'),tempdir);The ECG data is classified into these labels:

persons with cardiac arrhythmia (ARR)

persons with congestive heart failure (CHF)

persons with normal sinus rhythms (NSR)

The data is collected from these sources:

Unzipping creates the folder physionet-ECG_data-main in your temporary directory.

Unzip ECGData.zip in physionet-ECG_data-main. Load the ECGData.mat data file into your MATLAB® workspace.

unzip(fullfile(tempdir,'physionet_ECG_data-main','ECGData.zip'),... fullfile(tempdir,'physionet_ECG_data-main')) load(fullfile(tempdir,'physionet_ECG_data-main','ECGData.mat'))

Create a folder called dataDir inside the ECG data directory and then create three directories called ARR, CHF, and NSR inside dataDir by using the helperCreateECGDirectories function. You can find the source code for this helper function in the Supporting Functions section at the end of this example.

% parentDir = tempdir; parentDir = pwd; dataDir = 'data'; helperCreateECGDirectories(ECGData,parentDir,dataDir);

Plot an ECG that represents each ECG category by using the helperPlotReps helper function. You can find the source code for this helper function in the Supporting Functions section at the end of this example.

helperPlotReps(ECGData)

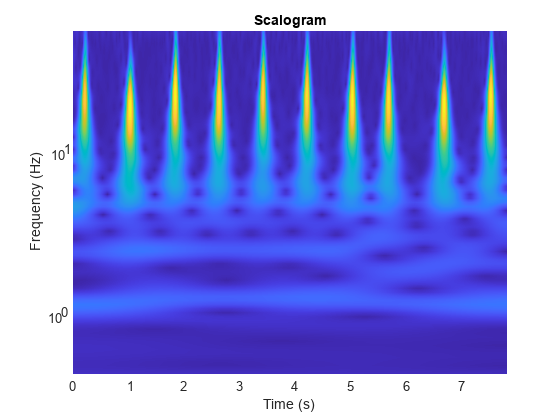

Create Time-Frequency Representations

After making the folders, create time-frequency representations of the ECG signals. Creating time-frequency representations helps with feature extraction. These representations are called scalograms. A scalogram is the absolute value of the continuous wavelet transform (CWT) coefficients of a signal. Create a CWT filter bank using cwtfilterbank (Wavelet Toolbox) for a signal with 1000 samples.

Fs =128; fb = cwtfilterbank(SignalLength=1000,... SamplingFrequency=Fs,... VoicesPerOctave=12); sig = ECGData.Data(1,1:1000); [cfs,frq] = wt(fb,sig); t = (0:999)/Fs;figure;pcolor(t,frq,abs(cfs)) set(gca,'yscale','log');shading interp;axis tight; title('Scalogram');xlabel('Time (s)');ylabel('Frequency (Hz)')

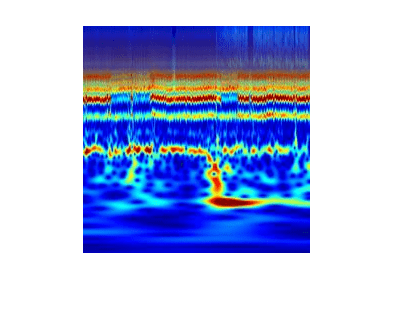

Use the helperCreateRGBfromTF helper function to create the scalograms as RGB images and write them to the appropriate subdirectory in dataDir. The source code for this helper function is in the Supporting Functions section at the end of this example. To be compatible with the SqueezeNet architecture, each RGB image is an array of size 227-by-227-by-3.

helperCreateRGBfromTF(ECGData,parentDir,dataDir)

Divide into Training and Validation Data

Load the scalogram images as an image datastore. The imageDatastore function automatically labels the images based on folder names and stores the data as an ImageDatastore object. An image datastore enables you to store large image data, including data that does not fit in memory, and efficiently read batches of images during training of a CNN.

allImages = imageDatastore(fullfile(parentDir,dataDir),... 'IncludeSubfolders',true,... 'LabelSource','foldernames');

Randomly divide the images into two groups. Use 80% of the images for training, and the remainder for validation. For purposes of reproducibility, we set the random seed to the default value.

rng default [imgsTrain,imgsValidation] = splitEachLabel(allImages,0.8,'randomized'); disp(['Number of training images: ',num2str(numel(imgsTrain.Files))]); disp(['Number of validation images: ',num2str(numel(imgsValidation.Files))]);

Load Transfer Learning Trained Network

Load the transfer learning trained SqueezeNet network trainedSN. To create the trainedSN network, see Classify Time Series Using Wavelet Analysis and Deep Learning.

load('trainedSN.mat');Configure FPGA Board Interface

Configure the FPGA board interface for the deep learning network deployment and MATLAB communication by using the dlhdl.Target class to create a target object with a custom name for your target device and an interface to connect your target device to the host computer. To use JTAG,Install Xilinx® Vivado® Design Suite 2022.1. To set the Xilinx Vivado toolpath, enter:

% hdlsetuptoolpath('ToolName', 'Xilinx Vivado', 'ToolPath', 'C:\Xilinx\Vivado\2022.1\bin\vivado.bat'); hTarget = dlhdl.Target('Xilinx',Interface="Ethernet");

Prepare trainedSN Network for Deployment

Prepare the trainedSN network for deployment by using the dlhdl.Workflow class to create an object. When you create the object, specify the network and the bitstream name. Specify trainedSN as the network. Make sure that the bitstream name matches the data type and the FPGA board that you are targeting. In this example, the target FPGA board is the Xilinx ZCU102 SoC board. The bitstream uses a single data type.

hW=dlhdl.Workflow(Network=trainedSN,Bitstream='zcu102_single',Target=hTarget)hW =

Workflow with properties:

Network: [1×1 DAGNetwork]

Bitstream: 'zcu102_single'

ProcessorConfig: []

Target: [1×1 dnnfpga.hardware.TargetEthernet]

Generate Weights, Biases, and Instructions

Generate weights, biases, and instructions for the trainedSN network by using the compile method of the dlhdl.Workflow object.

dn = hW.compile

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream zcu102_single.

### The network includes the following layers:

1 'data' Image Input 227×227×3 images with 'zerocenter' normalization (SW Layer)

2 'conv1' Convolution 64 3×3×3 convolutions with stride [2 2] and padding [0 0 0 0] (HW Layer)

3 'relu_conv1' ReLU ReLU (HW Layer)

4 'pool1' Max Pooling 3×3 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

5 'fire2-squeeze1x1' Convolution 16 1×1×64 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

6 'fire2-relu_squeeze1x1' ReLU ReLU (HW Layer)

7 'fire2-expand1x1' Convolution 64 1×1×16 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

8 'fire2-relu_expand1x1' ReLU ReLU (HW Layer)

9 'fire2-expand3x3' Convolution 64 3×3×16 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

10 'fire2-relu_expand3x3' ReLU ReLU (HW Layer)

11 'fire2-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

12 'fire3-squeeze1x1' Convolution 16 1×1×128 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

13 'fire3-relu_squeeze1x1' ReLU ReLU (HW Layer)

14 'fire3-expand1x1' Convolution 64 1×1×16 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

15 'fire3-relu_expand1x1' ReLU ReLU (HW Layer)

16 'fire3-expand3x3' Convolution 64 3×3×16 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

17 'fire3-relu_expand3x3' ReLU ReLU (HW Layer)

18 'fire3-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

19 'pool3' Max Pooling 3×3 max pooling with stride [2 2] and padding [0 1 0 1] (HW Layer)

20 'fire4-squeeze1x1' Convolution 32 1×1×128 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

21 'fire4-relu_squeeze1x1' ReLU ReLU (HW Layer)

22 'fire4-expand1x1' Convolution 128 1×1×32 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

23 'fire4-relu_expand1x1' ReLU ReLU (HW Layer)

24 'fire4-expand3x3' Convolution 128 3×3×32 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

25 'fire4-relu_expand3x3' ReLU ReLU (HW Layer)

26 'fire4-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

27 'fire5-squeeze1x1' Convolution 32 1×1×256 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

28 'fire5-relu_squeeze1x1' ReLU ReLU (HW Layer)

29 'fire5-expand1x1' Convolution 128 1×1×32 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

30 'fire5-relu_expand1x1' ReLU ReLU (HW Layer)

31 'fire5-expand3x3' Convolution 128 3×3×32 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

32 'fire5-relu_expand3x3' ReLU ReLU (HW Layer)

33 'fire5-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

34 'pool5' Max Pooling 3×3 max pooling with stride [2 2] and padding [0 1 0 1] (HW Layer)

35 'fire6-squeeze1x1' Convolution 48 1×1×256 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

36 'fire6-relu_squeeze1x1' ReLU ReLU (HW Layer)

37 'fire6-expand1x1' Convolution 192 1×1×48 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

38 'fire6-relu_expand1x1' ReLU ReLU (HW Layer)

39 'fire6-expand3x3' Convolution 192 3×3×48 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

40 'fire6-relu_expand3x3' ReLU ReLU (HW Layer)

41 'fire6-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

42 'fire7-squeeze1x1' Convolution 48 1×1×384 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

43 'fire7-relu_squeeze1x1' ReLU ReLU (HW Layer)

44 'fire7-expand1x1' Convolution 192 1×1×48 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

45 'fire7-relu_expand1x1' ReLU ReLU (HW Layer)

46 'fire7-expand3x3' Convolution 192 3×3×48 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

47 'fire7-relu_expand3x3' ReLU ReLU (HW Layer)

48 'fire7-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

49 'fire8-squeeze1x1' Convolution 64 1×1×384 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

50 'fire8-relu_squeeze1x1' ReLU ReLU (HW Layer)

51 'fire8-expand1x1' Convolution 256 1×1×64 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

52 'fire8-relu_expand1x1' ReLU ReLU (HW Layer)

53 'fire8-expand3x3' Convolution 256 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

54 'fire8-relu_expand3x3' ReLU ReLU (HW Layer)

55 'fire8-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

56 'fire9-squeeze1x1' Convolution 64 1×1×512 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

57 'fire9-relu_squeeze1x1' ReLU ReLU (HW Layer)

58 'fire9-expand1x1' Convolution 256 1×1×64 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

59 'fire9-relu_expand1x1' ReLU ReLU (HW Layer)

60 'fire9-expand3x3' Convolution 256 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

61 'fire9-relu_expand3x3' ReLU ReLU (HW Layer)

62 'fire9-concat' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

63 'new_dropout' Dropout 60% dropout (HW Layer)

64 'new_conv' Convolution 3 1×1×512 convolutions with stride [1 1] and padding [0 0 0 0] (HW Layer)

65 'relu_conv10' ReLU ReLU (HW Layer)

66 'pool10' 2-D Global Average Pooling 2-D global average pooling (HW Layer)

67 'prob' Softmax softmax (HW Layer)

68 'new_classoutput' Classification Output crossentropyex with 'ARR' and 2 other classes (SW Layer)

### Notice: The layer 'data' of type 'ImageInputLayer' is split into an image input layer 'data' and an addition layer 'data_norm' for normalization on hardware.

### Notice: The layer 'prob' with type 'nnet.cnn.layer.SoftmaxLayer' is implemented in software.

### Notice: The layer 'new_classoutput' with type 'nnet.cnn.layer.ClassificationOutputLayer' is implemented in software.

### Compiling layer group: conv1>>fire2-relu_squeeze1x1 ...

### Compiling layer group: conv1>>fire2-relu_squeeze1x1 ... complete.

### Compiling layer group: fire2-expand1x1>>fire2-relu_expand1x1 ...

### Compiling layer group: fire2-expand1x1>>fire2-relu_expand1x1 ... complete.

### Compiling layer group: fire2-expand3x3>>fire2-relu_expand3x3 ...

### Compiling layer group: fire2-expand3x3>>fire2-relu_expand3x3 ... complete.

### Compiling layer group: fire3-squeeze1x1>>fire3-relu_squeeze1x1 ...

### Compiling layer group: fire3-squeeze1x1>>fire3-relu_squeeze1x1 ... complete.

### Compiling layer group: fire3-expand1x1>>fire3-relu_expand1x1 ...

### Compiling layer group: fire3-expand1x1>>fire3-relu_expand1x1 ... complete.

### Compiling layer group: fire3-expand3x3>>fire3-relu_expand3x3 ...

### Compiling layer group: fire3-expand3x3>>fire3-relu_expand3x3 ... complete.

### Compiling layer group: pool3>>fire4-relu_squeeze1x1 ...

### Compiling layer group: pool3>>fire4-relu_squeeze1x1 ... complete.

### Compiling layer group: fire4-expand1x1>>fire4-relu_expand1x1 ...

### Compiling layer group: fire4-expand1x1>>fire4-relu_expand1x1 ... complete.

### Compiling layer group: fire4-expand3x3>>fire4-relu_expand3x3 ...

### Compiling layer group: fire4-expand3x3>>fire4-relu_expand3x3 ... complete.

### Compiling layer group: fire5-squeeze1x1>>fire5-relu_squeeze1x1 ...

### Compiling layer group: fire5-squeeze1x1>>fire5-relu_squeeze1x1 ... complete.

### Compiling layer group: fire5-expand1x1>>fire5-relu_expand1x1 ...

### Compiling layer group: fire5-expand1x1>>fire5-relu_expand1x1 ... complete.

### Compiling layer group: fire5-expand3x3>>fire5-relu_expand3x3 ...

### Compiling layer group: fire5-expand3x3>>fire5-relu_expand3x3 ... complete.

### Compiling layer group: pool5>>fire6-relu_squeeze1x1 ...

### Compiling layer group: pool5>>fire6-relu_squeeze1x1 ... complete.

### Compiling layer group: fire6-expand1x1>>fire6-relu_expand1x1 ...

### Compiling layer group: fire6-expand1x1>>fire6-relu_expand1x1 ... complete.

### Compiling layer group: fire6-expand3x3>>fire6-relu_expand3x3 ...

### Compiling layer group: fire6-expand3x3>>fire6-relu_expand3x3 ... complete.

### Compiling layer group: fire7-squeeze1x1>>fire7-relu_squeeze1x1 ...

### Compiling layer group: fire7-squeeze1x1>>fire7-relu_squeeze1x1 ... complete.

### Compiling layer group: fire7-expand1x1>>fire7-relu_expand1x1 ...

### Compiling layer group: fire7-expand1x1>>fire7-relu_expand1x1 ... complete.

### Compiling layer group: fire7-expand3x3>>fire7-relu_expand3x3 ...

### Compiling layer group: fire7-expand3x3>>fire7-relu_expand3x3 ... complete.

### Compiling layer group: fire8-squeeze1x1>>fire8-relu_squeeze1x1 ...

### Compiling layer group: fire8-squeeze1x1>>fire8-relu_squeeze1x1 ... complete.

### Compiling layer group: fire8-expand1x1>>fire8-relu_expand1x1 ...

### Compiling layer group: fire8-expand1x1>>fire8-relu_expand1x1 ... complete.

### Compiling layer group: fire8-expand3x3>>fire8-relu_expand3x3 ...

### Compiling layer group: fire8-expand3x3>>fire8-relu_expand3x3 ... complete.

### Compiling layer group: fire9-squeeze1x1>>fire9-relu_squeeze1x1 ...

### Compiling layer group: fire9-squeeze1x1>>fire9-relu_squeeze1x1 ... complete.

### Compiling layer group: fire9-expand1x1>>fire9-relu_expand1x1 ...

### Compiling layer group: fire9-expand1x1>>fire9-relu_expand1x1 ... complete.

### Compiling layer group: fire9-expand3x3>>fire9-relu_expand3x3 ...

### Compiling layer group: fire9-expand3x3>>fire9-relu_expand3x3 ... complete.

### Compiling layer group: new_conv>>pool10 ...

### Compiling layer group: new_conv>>pool10 ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ ________________

"InputDataOffset" "0x00000000" "24.0 MB"

"OutputResultOffset" "0x01800000" "4.0 MB"

"SchedulerDataOffset" "0x01c00000" "4.0 MB"

"SystemBufferOffset" "0x02000000" "28.0 MB"

"InstructionDataOffset" "0x03c00000" "4.0 MB"

"ConvWeightDataOffset" "0x04000000" "12.0 MB"

"EndOffset" "0x04c00000" "Total: 76.0 MB"

### Network compilation complete.

dn = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {{} [-24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 -50.7900 -184.4480 0 -24.2516 … ]}

Program Bitstream onto FPGA and Download Network Weights

To deploy the network on the Xilinx ZCU102 hardware, run the deploy function of the dlhdl.Workflow object. This function uses the output of the compile function to program the FPGA board by using the programming file. It also downloads the network weights and biases. The deploy function starts programming the FPGA device, displays progress messages, and the time it takes to deploy the network.

hW.deploy

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming FPGA device on Xilinx SoC hardware board at 192.168.1.101... ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... # Copying Bitstream zcu102_single.bit to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/zcu102_single.bit # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'AXI-Stream DDR Memory Access : 3-AXIM' ### Rebooting Xilinx SoC at 192.168.1.101... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 28-Apr-2022 15:33:54

Load Image for Prediction and Run Prediction

Load an image by randomly selecting an image from the validation data store.

idx=randi(32); testim=readimage(imgsValidation,idx); imshow(testim)

Execute the predict method on the dlhdl.Workflow object and then show the label in the MATLAB command window.

[YPred1,probs1] = classify(trainedSN,testim); accuracy1 = (YPred1==imgsValidation.Labels); [YPred2,probs2] = hW.predict(single(testim),'profile','on');

### Finished writing input activations.

### Running single input activation.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 9253245 0.04206 1 9257253 23.8

data_norm 361047 0.00164

conv1 672559 0.00306

pool1 509079 0.00231

fire2-squeeze1x1 308258 0.00140

fire2-expand1x1 305646 0.00139

fire2-expand3x3 305085 0.00139

fire3-squeeze1x1 627799 0.00285

fire3-expand1x1 305241 0.00139

fire3-expand3x3 305256 0.00139

pool3 286627 0.00130

fire4-squeeze1x1 264151 0.00120

fire4-expand1x1 264600 0.00120

fire4-expand3x3 264567 0.00120

fire5-squeeze1x1 734588 0.00334

fire5-expand1x1 264575 0.00120

fire5-expand3x3 264719 0.00120

pool5 219725 0.00100

fire6-squeeze1x1 194605 0.00088

fire6-expand1x1 144199 0.00066

fire6-expand3x3 144819 0.00066

fire7-squeeze1x1 288819 0.00131

fire7-expand1x1 144285 0.00066

fire7-expand3x3 144841 0.00066

fire8-squeeze1x1 368116 0.00167

fire8-expand1x1 243691 0.00111

fire8-expand3x3 243738 0.00111

fire9-squeeze1x1 488338 0.00222

fire9-expand1x1 243654 0.00111

fire9-expand3x3 243683 0.00111

new_conv 93849 0.00043

pool10 2751 0.00001

* The clock frequency of the DL processor is: 220MHz

[val,idx]= max(YPred2);

trainedSN.Layers(end).ClassNames{idx}ans = 'ARR'

References

Baim, D. S., W. S. Colucci, E. S. Monrad, H. S. Smith, R. F. Wright, A. Lanoue, D. F. Gauthier, B. J. Ransil, W. Grossman, and E. Braunwald. "Survival of patients with severe congestive heart failure treated with oral milrinone." Journal of the American College of Cardiology. Vol. 7, Number 3, 1986, pp. 661–670.

Engin, M. "ECG beat classification using neuro-fuzzy network." Pattern Recognition Letters. Vol. 25, Number 15, 2004, pp.1715–1722.

Goldberger A. L., L. A. N. Amaral, L. Glass, J. M. Hausdorff, P. Ch. Ivanov, R. G. Mark, J. E. Mietus, G. B. Moody, C.-K. Peng, and H. E. Stanley. "PhysioBank, PhysioToolkit,and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals." Circulation. Vol. 101, Number 23: e215–e220. [Circulation Electronic Pages;

http://circ.ahajournals.org/content/101/23/e215.full]; 2000 (June 13). doi: 10.1161/01.CIR.101.23.e215.Leonarduzzi, R. F., G. Schlotthauer, and M. E. Torres. "Wavelet leader based multifractal analysis of heart rate variability during myocardial ischaemia." In Engineering in Medicine and Biology Society (EMBC), Annual International Conference of the IEEE, 110–113. Buenos Aires, Argentina: IEEE, 2010.

Li, T., and M. Zhou. "ECG classification using wavelet packet entropy and random forests." Entropy. Vol. 18, Number 8, 2016, p.285.

Maharaj, E. A., and A. M. Alonso. "Discriminant analysis of multivariate time series: Application to diagnosis based on ECG signals." Computational Statistics and Data Analysis. Vol. 70, 2014, pp. 67–87.

Moody, G. B., and R. G. Mark. "The impact of the MIT-BIH Arrhythmia Database." IEEE Engineering in Medicine and Biology Magazine. Vol. 20. Number 3, May-June 2001, pp. 45–50. (PMID: 11446209)

Russakovsky, O., J. Deng, and H. Su et al. "ImageNet Large Scale Visual Recognition Challenge." International Journal of Computer Vision. Vol. 115, Number 3, 2015, pp. 211–252.

Zhao, Q., and L. Zhang. "ECG feature extraction and classification using wavelet transform and support vector machines." In IEEE International Conference on Neural Networks and Brain, 1089–1092. Beijing, China: IEEE, 2005.

ImageNet.

http://www.image-net.org

Supporting Functions

helperCreateECGDataDirectories creates a data directory inside a parent directory, then creates three subdirectories inside the data directory. The subdirectories are named after each class of ECG signal found in ECGData.

function helperCreateECGDirectories(ECGData,parentFolder,dataFolder) rootFolder = parentFolder; localFolder = dataFolder; mkdir(fullfile(rootFolder,localFolder)) folderLabels = unique(ECGData.Labels); for i = 1:numel(folderLabels) mkdir(fullfile(rootFolder,localFolder,char(folderLabels(i)))); end end

helperPlotReps plots the first thousand samples of a representative of each class of ECG signal found in ECGData.

function helperPlotReps(ECGData) folderLabels = unique(ECGData.Labels); for k=1:3 ecgType = folderLabels{k}; ind = find(ismember(ECGData.Labels,ecgType)); subplot(3,1,k) plot(ECGData.Data(ind(1),1:1000)); grid on title(ecgType) end end

helperCreateRGBfromTF uses cwtfilterbank (Wavelet Toolbox) to obtain the continuous wavelet transform of the ECG signals and generates the scalograms from the wavelet coefficients. The helper function resizes the scalograms and writes them to disk as jpeg images.

function helperCreateRGBfromTF(ECGData,parentFolder,childFolder) imageRoot = fullfile(parentFolder,childFolder); data = ECGData.Data; labels = ECGData.Labels; [~,signalLength] = size(data); fb = cwtfilterbank('SignalLength',signalLength,'VoicesPerOctave',12); r = size(data,1); for ii = 1:r cfs = abs(fb.wt(data(ii,:))); im = ind2rgb(im2uint8(rescale(cfs)),jet(128)); imgLoc = fullfile(imageRoot,char(labels(ii))); imFileName = strcat(char(labels(ii)),'_',num2str(ii),'.jpg'); imwrite(imresize(im,[227 227]),fullfile(imgLoc,imFileName)); end end

See Also

dlhdl.Workflow | dlhdl.Target | compile | deploy | predict