Visual Localization in a Parking Lot

This example shows how to develop a visual localization system using synthetic image data from the Unreal Engine® simulation environment.

It is a challenging task to obtain ground truth for evaluating the performance of a localization algorithm in different conditions. Virtual simulation in different scenarios is a cost-effective method to obtain the ground truth in comparison with more expensive approaches such as using high-precision inertial navigation systems or differential GPS. The use of simulation enables testing under a variety of scenarios and sensor configurations. It also enables a rapid algorithm development, and provides precise ground truth.

This example uses the Unreal Engine simulation environment from Epic Games® to develop and evaluate a visual localization algorithm in a parking lot scenario.

Overview

Visual localization is the process of estimating the camera pose for a captured image relative to a visual representation of a known scene. It is a key technology for applications such as augmented reality, robotics, and automated driving. Compared with a Implement Visual SLAM in MATLAB, visual localization assumes that a map of the environment is known and does not require 3-D reconstruction or loop closure detection. The pipeline of visual localization includes the following:

Map Loading: Load the pre-built map 3-D map containing world point positions and the 3-D to 2-D correspondences between the map points and the key frames. Additionally. for each key frame, load the feature descriptors corresponding to the 3-D map points.

Global Initialization: Extract features from the first image frame and match them with the features corresponding to all the 3-D map points. After getting the 3-D to 2-D correspondences, estimate the camera pose of the first frame in the world coordinate by solving a Perspective-n-Point (PnP) problem. Refine the pose using motion-only bundle adjustment. The key frame that shares the most covisible 3-D map points with the first frame is identified as the reference key frame.

Tracking: Once the first frame is localized, for each new frame, match features in the new frame with features in the reference key frame that have known 3-D world points. Estimate and refine the camera pose using the same approach as in Global Initialization step. The camera pose can be further refined by tracking the features associated with nearby key frames.

Create Scene

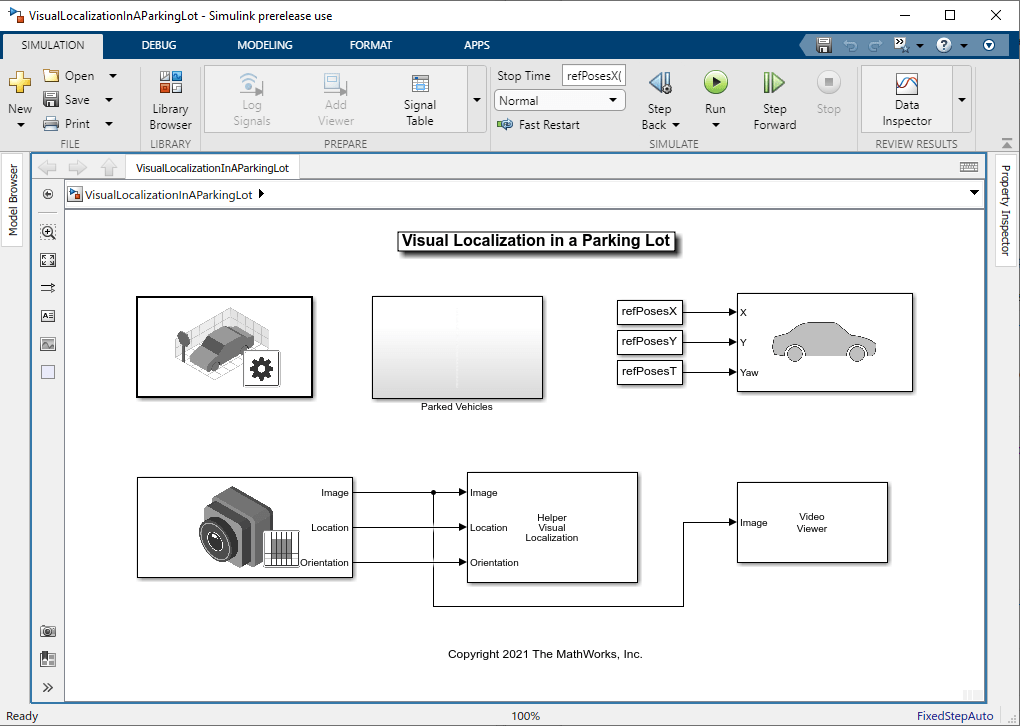

Guiding a vehicle into a parking spot is a challenging maneuver that relies on accurate localization. The VisualLocalizationInAParkingLot model simulates a visual localization system in the parking lot scenario used in the Develop Visual SLAM Algorithm Using Unreal Engine Simulation example.

The Simulation 3D Scene Configuration block sets up the Large Parking Lot scene. The

Parked Vehiclessubsystem adds parked cars into the parking lot.The Simulation 3D Vehicle with Ground Following block controls the motion of the ego vehicle.

The Simulation 3D Camera block models a monocular camera fixed at the center of the vehicle's roof. You can use the Camera Calibrator app to estimate intrinsics of the actual camera that you want to simulate.

The

Helper Visual LocalizationMATLAB System block implements the visual localization algorithm. The initial camera pose with respect to the map is estimated using thehelperGlobalInitializationfunction. The subsequent camera poses are estimated using thehelperTrackRefKeyFramefunction and refined using thehelperTrackLocalKeyFramesfunction. This block also provides a visualization of the estimated camera trajectory in the pre-built map. You can specify the pre-built map data and the camera intrinsic parameters in the block dialog.

% Open the model modelName = 'VisualLocalizationInAParkingLot'; open_system(modelName);

![{"String":"Figure Video Viewer contains an axes object and other objects of type uiflowcontainer, uimenu, uitoolbar. The axes object contains an object of type image.","Tex":[],"LaTex":[]}](../../examples/shared_vision_driving/win64/VisualLocalizationInAParkingLotExample_01.png)

Load Map Data

The pre-built map data is generated using the stereo camera in the Develop Visual SLAM Algorithm Using Unreal Engine Simulation example. The data consists of three objects that are commonly used to manage image and map data for visual SLAM:

vSetKeyFrame: animageviewsetobject storing the camera poses of key frames and the associated feature points for each 3-D map point inmapPointSet.mapPointSet: aworldpointsetobject storing the 3-D map point locations and the correspondences between the 3-D points and 2-D feature points across key frames. The 3-D map points provide a sparse representation of the environment.

% Load pre-built map data mapData = load("prebuiltMapData.mat")

mapData = struct with fields:

vSetKeyFrames: [1×1 imageviewset]

mapPointSet: [1×1 worldpointset]

Set Up Ego Vehicle and Camera Sensor

You can follow the Select Waypoints for Unreal Engine Simulation example to select a sequence of waypoints and generate a reference trajectory for the ego vehicle. This example uses a recorded reference trajectory.

% Load reference path refPosesData = load('parkingLotLocalizationData.mat'); % Set reference trajectory of the ego vehicle refPosesX = refPosesData.refPosesX; refPosesY = refPosesData.refPosesY; refPosesT = refPosesData.refPosesT; % Set camera intrinsics focalLength = [700, 700]; % specified in units of pixels principalPoint = [600, 180]; % in pixels [x, y] imageSize = [370, 1230]; % in pixels [mrows, ncols]

Run Simulation

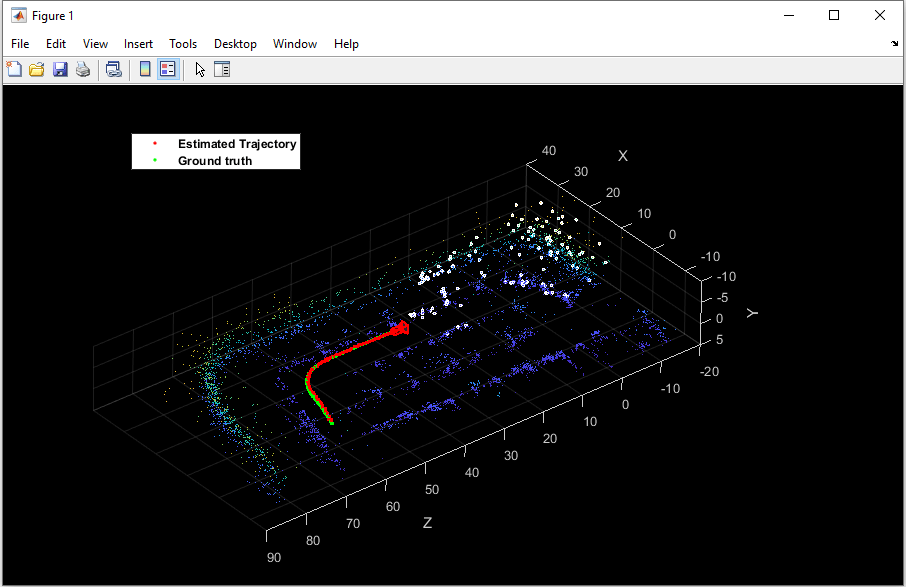

Run the simulation and visualize the estimated camera trajectory in the pre-built map. The white points represent the tracked 3-D map points in the current frame. You can compare the estimated trajectory with the ground truth provided by the Simulation 3D Camera block to evaluate the localization accuracy.

if ismac error(['3D Simulation is supported only on Microsoft' char(174) ' Windows' char(174) ' and Linux' char(174) '.']); end % Open video viewer to examine camera images open_system([modelName, '/Video Viewer']); % Run simulation sim(modelName);

![{"String":"Figure Video Viewer contains an axes object and other objects of type uiflowcontainer, uimenu, uitoolbar. The axes object contains an object of type image.","Tex":[],"LaTex":[]}](../../examples/shared_vision_driving/win64/VisualLocalizationInAParkingLotExample_03.png)

Close the model.

close_system([modelName, '/Video Viewer']);

close_system(modelName, 0);Conclusion

With this setup, you can rapidly iterate over different scenarios, sensor configurations, or reference trajectories and refine the visual localization algorithm before moving to real-world testing.

To select a different scenario, use the Simulation 3D Scene Configuration block. Choose from the existing prebuilt scenes or create a custom scene in the Unreal® Editor.

To create a different reference trajectory, use the

helperSelectSceneWaypointstool, as shown in the Select Waypoints for Unreal Engine Simulation example.To alter the sensor configuration use the Simulation 3D Camera block. The Mounting tab provides options for specifying different sensor mounting placements. The Parameters tab provides options for modifying sensor parameters such as detection range, field of view, and resolution. You can also use the Simulation 3D Fisheye Camera block which provides a larger field of view.