Perform Instance Segmentation Using Mask R-CNN

This example shows how to segment individual instances of people and cars using a multiclass mask region-based convolutional neural network (R-CNN).

Instance segmentation is a computer vision technique in which you detect and localize objects while simultaneously generating a segmentation map for each of the detected instances.

This example first shows how to perform instance segmentation using a pretrained Mask R-CNN that detects two classes. Then, you can optionally download a data set and train a multiclass Mask R-CNN using transfer learning.

Load Pretrained Network

Specify dataFolder as the desired location of the pretrained network and data.

dataFolder = fullfile(tempdir,"coco");Download the pretrained Mask R-CNN. The network is stored as a maskrcnn object.

trainedMaskRCNN_url = "https://www.mathworks.com/supportfiles/vision/data/maskrcnn_object_person_car_v2.mat"; downloadTrainedMaskRCNN(trainedMaskRCNN_url,dataFolder); load(fullfile(dataFolder,"maskrcnn_object_person_car_v2.mat"));

Segment People in Image

Read a test image that contains objects of the target classes.

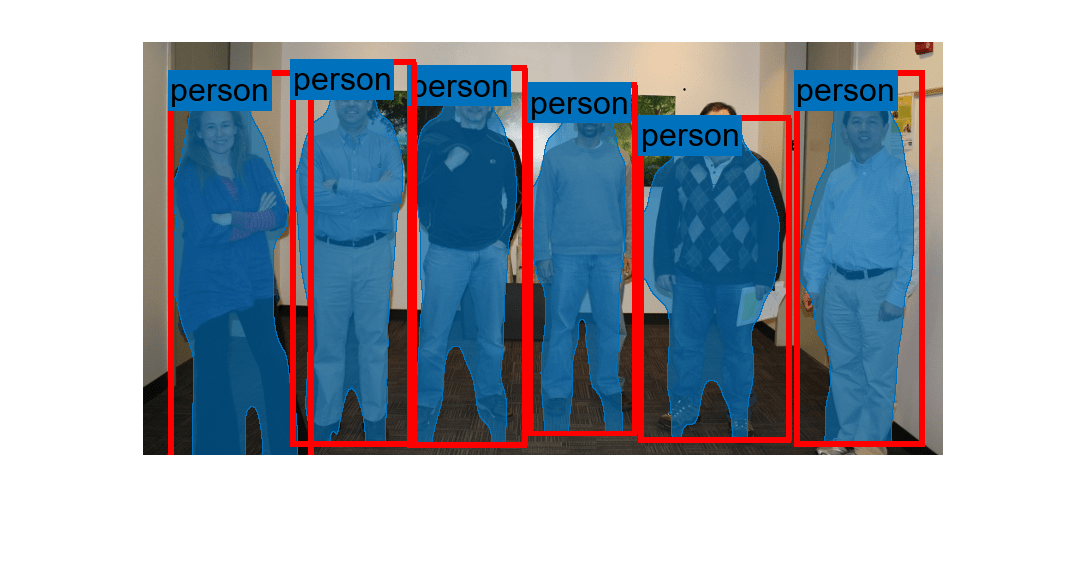

imTest = imread("visionteam.jpg");Segment the objects and their masks using the segmentObjects function. The segmentObjects function performs these preprocessing steps on the input image before performing prediction.

Zero center the images using the COCO data set mean.

Resize the image to the input size of the network, while maintaining the aspect ratio (letter boxing).

[masks,labels,scores,boxes] = segmentObjects(net,imTest,Threshold=0.98);

Visualize the predictions by overlaying the detected masks on the image using the insertObjectMask function.

overlayedImage = insertObjectMask(imTest,masks); imshow(overlayedImage)

Show the bounding boxes and labels on the objects.

showShape("rectangle",gather(boxes),Label=labels,LineColor="r")

Load Training Data

Create directories to store the COCO training images and annotation data.

imageFolder = fullfile(dataFolder,"images"); captionsFolder = fullfile(dataFolder,"annotations"); if ~exist(imageFolder,"dir") mkdir(imageFolder) mkdir(captionsFolder) end

The COCO 2014 training images data set [2] consists of 82,783 images. The annotations data contains at least five captions corresponding to each image. Download the COCO 2014 training images and captions from https://cocodataset.org/#download by clicking the "2014 Train images" and "2014 Train/Val annotations" links, respectively. Extract the image files into the folder specified by imageFolder. Extract the annotation files into the folder specified by captionsFolder.

annotationFile = fullfile(captionsFolder,"instances_train2014.json");

str = fileread(annotationFile);Prepare Data for Training

To train a Mask R-CNN, you need this data.

RGB images that serve as input to the network, specified as H-by-W-by-3 numeric arrays.

Bounding boxes for objects in the RGB images, specified as NumObjects-by-4 matrices, with rows in the format [x y w h]).

Instance labels, specified as NumObjects-by-1 string vectors.

Instance masks. Each mask is the segmentation of one instance in the image. The COCO data set specifies object instances using polygon coordinates formatted as NumObjects-by-2 cell arrays. Each row of the array contains the (x,y) coordinates of a polygon along the boundary of one instance in the image. However, the Mask R-CNN in this example requires binary masks specified as logical arrays of size H-by-W-by-NumObjects.

Initialize Training Data Parameters

trainClassNames = ["person","car"]; numClasses = length(trainClassNames); imageSizeTrain = [800 800 3];

Format COCO Annotation Data as MAT Files

The COCO API for MATLAB enables you to access the annotation data. Download the COCO API for MATLAB from https://github.com/cocodataset/cocoapi by clicking the "Code" button and selecting "Download ZIP." Extract the cocoapi-master directory and its contents to the folder specified by dataFolder. If needed for your operating system, compile the gason parser by following the instructions in the gason.m file within the MatlabAPI subdirectory.

Specify the directory location for the COCO API for MATLAB and add the directory to the path.

cocoAPIDir = fullfile(dataFolder,"cocoapi-master","MatlabAPI"); addpath(cocoAPIDir);

Specify the folder in which to store the MAT files.

unpackAnnotationDir = fullfile(dataFolder,"annotations_unpacked","matFiles"); if ~exist(unpackAnnotationDir,'dir') mkdir(unpackAnnotationDir) end

Extract the COCO annotations to MAT files using the unpackAnnotations helper function, which is attached to this example as a supporting file. Each MAT file corresponds to a single training image and contains the file name, bounding boxes, instance labels, and instance masks for each training image. The function converts object instances specified as polygon coordinates to binary masks using the poly2mask function.

unpackAnnotations(trainClassNames,annotationFile,imageFolder,unpackAnnotationDir);

Create Datastore

The Mask R-CNN expects input data as a 1-by-4 cell array containing the RGB training image, bounding boxes, instance labels, and instance masks.

Create a file datastore with a custom read function, cocoAnnotationMATReader, that reads the content of the unpacked annotation MAT files, converts grayscale training images to RGB, and returns the data as a 1-by-4 cell array in the required format. The custom read function is attached to this example as a supporting file.

ds = fileDatastore(unpackAnnotationDir, ...

ReadFcn=@(x)cocoAnnotationMATReader(x,imageFolder));Preview the data returned by the transformed datastore.

data = preview(ds)

data=1×4 cell array

{428×640×3 uint8} {16×4 double} {16×1 categorical} {428×640×16 logical}

Configure Mask R-CNN Network

The Mask R-CNN builds upon a Faster R-CNN with a ResNet-50 base network. To transfer learn on the pretrained Mask R-CNN network, use the maskrcnn object to load the pretrained network and customize the network for the new set of classes and input size. By default, the maskrcnn object uses the same anchor boxes as used for training with COCO data set.

net = maskrcnn("resnet50-coco",trainClassNames,InputSize=imageSizeTrain)net =

maskrcnn with properties:

ModelName: 'maskrcnn'

ClassNames: {'person' 'car'}

InputSize: [800 800 3]

AnchorBoxes: [15×2 double]

If you want to use custom anchor boxes specific to the training data set, you can estimate the anchor boxes using the estimateAnchorBoxes function. Then, specify the anchor boxes using the AnchorBoxes name-value argument when you create the maskrcnn object.

Train Network

Specify the options for SGDM optimization and train the network for 10 epochs.

Specify the ExecutionEnvironment name-value argument as "gpu" to train on a GPU. It is recommended to train on a GPU with at least 12 GB of available memory. Using a GPU requires Parallel Computing Toolbox™ and a CUDA® enabled NVIDIA® GPU. For more information, see GPU Computing Requirements (Parallel Computing Toolbox).

options = trainingOptions("sgdm", ... InitialLearnRate=0.001, ... LearnRateSchedule="piecewise", ... LearnRateDropPeriod=1, ... LearnRateDropFactor=0.95, ... Plot="none", ... Momentum=0.9, ... MaxEpochs=10, ... MiniBatchSize=2, ... BatchNormalizationStatistics="moving", ... ResetInputNormalization=false, ... ExecutionEnvironment="gpu", ... VerboseFrequency=50);

To train the Mask R-CNN network, set the doTraining variable in the following code to true. Train the network using the trainMaskRCNN function. Because the training data set is similar to the data that the pretrained network is trained on, you can freeze the weights of the feature extraction backbone using the FreezeSubNetwork name-value argument.

doTraining = true; if doTraining [net,info] = trainMaskRCNN(ds,net,options,FreezeSubNetwork="backbone"); modelDateTime = string(datetime("now",Format="yyyy-MM-dd-HH-mm-ss")); save("trainedMaskRCNN-"+modelDateTime+".mat","net"); end

Using the trained network, you can perform instance segmentation on test images, as demonstrated in the "Segment People in Image" section of this example.

References

[1] He, Kaiming, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. “Mask R-CNN.” Preprint, submitted January 24, 2018. https://arxiv.org/abs/1703.06870.

[2] Lin, Tsung-Yi, Michael Maire, Serge Belongie, Lubomir Bourdev, Ross Girshick, James Hays, Pietro Perona, Deva Ramanan, C. Lawrence Zitnick, and Piotr Dollár. “Microsoft COCO: Common Objects in Context,” May 1, 2014. https://arxiv.org/abs/1405.0312v3.

See Also

maskrcnn | trainMaskRCNN | segmentObjects | transform | insertObjectMask

Topics

- Getting Started with Mask R-CNN for Instance Segmentation

- Deep Learning in MATLAB (Deep Learning Toolbox)

- Datastores for Deep Learning (Deep Learning Toolbox)