Markov Decision Process - Pendulum Control

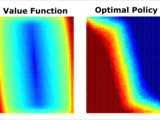

Takes a single pendulum (with a torque actuator) and models it as a Markov Decision Process (MDP), using linear barycentric interpolation over a uniform grid. Then, value iteration is used to compute the optimal policy, which is then displayed as a plot. Finally, a simulation is run to demonstrate how to evaluate the optimal policy.

Cite As

Matthew Kelly (2025). Markov Decision Process - Pendulum Control (https://github.com/MatthewPeterKelly/MDP_Pendulum), GitHub. Retrieved .

MATLAB Release Compatibility

Platform Compatibility

Windows macOS LinuxCategories

Tags

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Discover Live Editor

Create scripts with code, output, and formatted text in a single executable document.

Versions that use the GitHub default branch cannot be downloaded

| Version | Published | Release Notes | |

|---|---|---|---|

| 1.4.0.0 | Changed name |

|

|

| 1.3.0.0 | updated photo |

|

|

| 1.2.0.0 | added a photo. |

|

|

| 1.1.0.0 | Improved error handling regarding the MEX functions. |

|

|

| 1.0.0.0 |

|