Vision Detection Generator

Detect objects and lanes from visual measurements in a driving scenario or RoadRunner Scenario

Libraries:

Automated Driving Toolbox /

Driving Scenario and Sensor Modeling

Description

The Vision Detection Generator block generates detections from camera measurements taken by a vision sensor mounted on an ego vehicle.

The block derives detections from simulated actor poses and generates these detections at intervals equal to the sensor update interval. By default, detections are referenced to the coordinate system of the ego vehicle. The block can simulate real detections with added random noise and also generate false positive detections. A statistical model generates the measurement noise, true detections, and false positives. To control the random numbers that the statistical model generates, use the random number generator settings on the Measurements tab of the block.

You can use the block with vehicle actors in Driving Scenario and RoadRunner Scenario simulations. For more information, see Add Sensors to RoadRunner Scenario Using Simulink example.

You can use the Vision Detection Generator to create input to a Multi-Object Tracker block. When building scenarios and sensor models using the Driving Scenario Designer app, the camera sensors exported to Simulink® are output as Vision Detection Generator blocks.

Examples

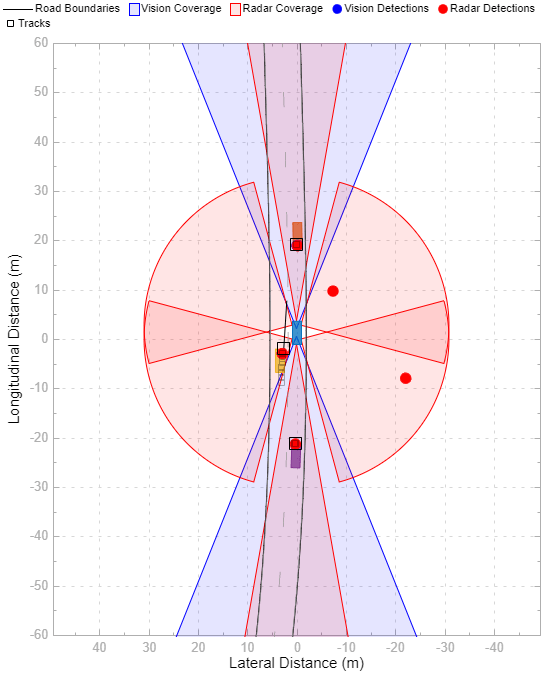

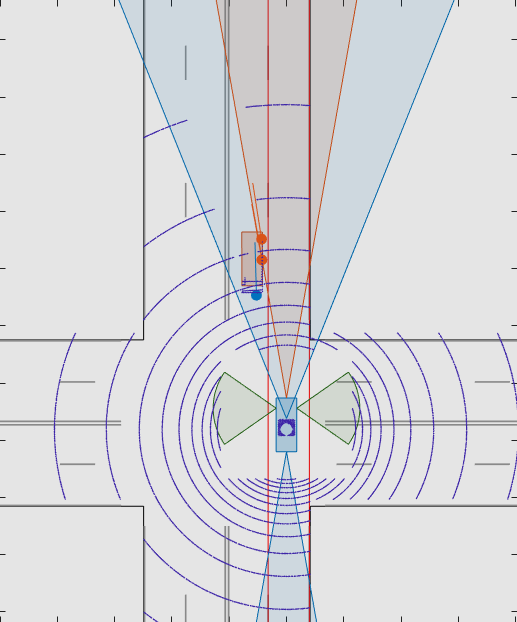

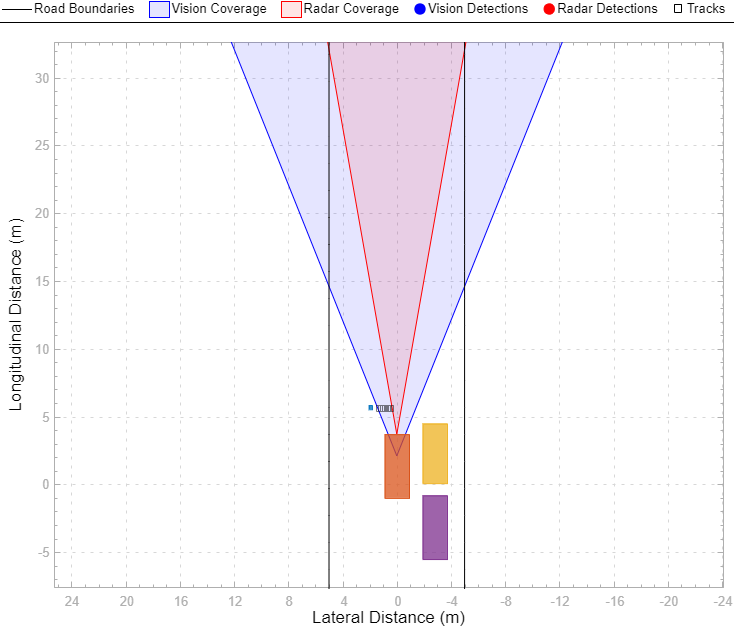

Sensor Fusion Using Synthetic Radar and Vision Data in Simulink

Implement a synthetic data simulation for tracking and sensor fusion in Simulink with Automated Driving Toolbox™.

Adaptive Cruise Control with Sensor Fusion

Implement an automotive adaptive cruise controller using sensor fusion.

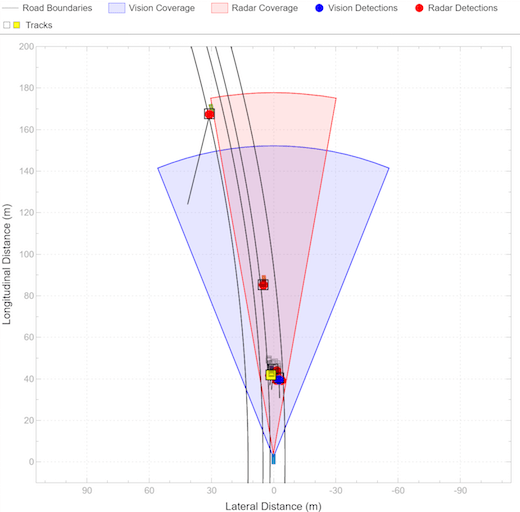

Lane Following Control with Sensor Fusion and Lane Detection

Simulate and generate code for an automotive lane-following controller.

Generate Sensor Blocks Using Driving Scenario Designer

Generate Simulink blocks for a driving scenario and sensors that were built using the Driving Scenario Designer app.

Test Open-Loop ADAS Algorithm Using Driving Scenario

Test open-loop ADAS algorithms in Simulink by using driving scenarios saved from the Driving Scenario Designer app.

Test Closed-Loop ADAS Algorithm Using Driving Scenario

Test closed-loop ADAS algorithms in Simulink by using driving scenarios saved from the Driving Scenario Designer app.

Add Sensors to RoadRunner Scenario Using Simulink

Simulate a RoadRunner Scenario with sensor models defined in Simulink and visualize object and lane detections.

Ports

Input

Scenario actor poses in ego vehicle coordinates, specified as a Simulink bus containing a MATLAB structure.

The structure must contain these fields.

| Field | Description | Type |

|---|---|---|

NumActors | Number of actors | Nonnegative integer |

Time | Current simulation time | Real-valued scalar |

Actors | Actor poses | NumActors-length array of actor pose structures |

Each actor pose structure in Actors must have these

fields.

| Field | Description |

|---|---|

ActorID | Scenario-defined actor identifier, specified as a positive integer. |

In R2024b:

| Front-axle position of the vehicle, specified as a three-element row vector in the form [x y z]. Units are in meters. Note If the driving scenario does not contain a

front-axle trajectory for at least one vehicle,

then the

|

Position | Position of actor, specified as a real-valued vector of the form [x y z]. Units are in meters. |

Velocity | Velocity (v) of actor in the x- y-, and z-directions, specified as a real-valued vector of the form [vx vy vz]. Units are in meters per second. |

Roll | Roll angle of actor, specified as a real-valued scalar. Units are in degrees. |

Pitch | Pitch angle of actor, specified as a real-valued scalar. Units are in degrees. |

Yaw | Yaw angle of actor, specified as a real-valued scalar. Units are in degrees. |

AngularVelocity | Angular velocity (ω) of actor in the x-, y-, and z-directions, specified as a real-valued vector of the form [ωx ωy ωz]. Units are in degrees per second. |

Dependencies

To enable this input port, set the Types of detections

generated by sensor parameter to Objects

only, Lanes with

occlusion, or Lanes and

objects.

Lane boundaries in ego vehicle coordinates, specified as a Simulink bus containing a MATLAB structure.

The structure must contain these fields.

| Field | Description | Type |

|---|---|---|

NumLaneBoundaries | Number of lane boundaries | Nonnegative integer |

Time | Current simulation time | Real scalar |

LaneBoundaries | Lane boundaries starting from the leftmost lane with respect to the ego vehicle. | NumLaneBoundaries-length array of lane boundary structures |

Each lane boundary structure in LaneBoundaries must

have these fields.

| Field | Description |

| Lane boundary coordinates, specified as a real-valued N-by-3 matrix, where N is the number of lane boundary coordinates. Lane boundary coordinates define the position of points on the boundary at specified longitudinal distances away from the ego vehicle, along the center of the road.

This matrix also includes the boundary coordinates at zero distance from the ego vehicle. These coordinates are to the left and right of the ego-vehicle origin, which is located under the center of the rear axle. Units are in meters. |

| Lane boundary curvature at each row of the Coordinates matrix, specified

as a real-valued N-by-1 vector. N is the

number of lane boundary coordinates. Units are in radians per meter. |

| Derivative of lane boundary curvature at each row of the Coordinates

matrix, specified as a real-valued N-by-1 vector.

N is the number of lane boundary coordinates. Units are

in radians per square meter. |

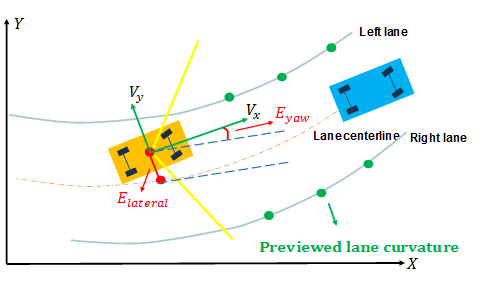

| Initial lane boundary heading angle, specified as a real scalar. The heading angle of the lane boundary is relative to the ego vehicle heading. Units are in degrees. |

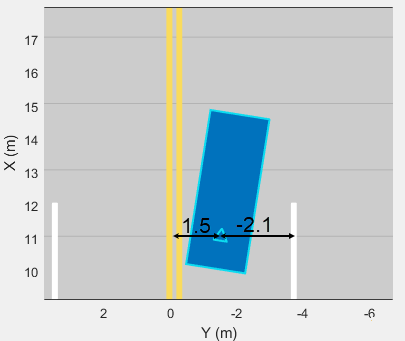

| Lateral offset of the ego vehicle position from the lane boundary, specified as a real scalar. An offset to a lane boundary to the left of the ego vehicle is positive. An offset to the right of the ego vehicle is negative. Units are in meters. In this image, the ego vehicle is offset 1.5 meters from the left lane and 2.1 meters from the right lane.

|

| Type of lane boundary marking, specified as one of these values:

|

| Saturation strength of the lane boundary marking, specified as a real scalar from 0 to

1. A value of |

| Lane boundary width, specified as a positive real scalar. In a double-line lane marker, the same width is used for both lines and for the space between lines. Units are in meters. |

| Length of dash in dashed lines, specified as a positive real scalar. In a double-line lane marker, the same length is used for both lines. |

| Length of space between dashes in dashed lines, specified as a positive real scalar. In a dashed double-line lane marker, the same space is used for both lines. |

Dependencies

To enable this input port, set the Types of detections

generated by sensor parameter to Lanes

only, Lanes only,

Lanes with occlusion, or

Lanes and objects.

Output

Object detections, returned as a Simulink bus containing a MATLAB structure. For more details about buses, see Create Nonvirtual Buses (Simulink).

You can pass object detections from these sensors and other sensors to a tracker, such as a Multi-Object Tracker block, and generate tracks.

The detections structure has this form:

| Field | Description | Type |

|---|---|---|

NumDetections | Number of detections | Integer |

IsValidTime | False when updates are requested at times that are between block invocation intervals | Boolean |

Detections | Object detections | Array of object detection structures of length set by

the Maximum number of reported

detections parameter. Only

NumDetections of these detections

are actual detections. |

The object detection structure contains these properties.

| Property | Definition |

|---|---|

Time | Measurement time |

Measurement | Object measurements |

MeasurementNoise | Measurement noise covariance matrix |

SensorIndex | Unique ID of the sensor |

ObjectClassID | Object classification |

MeasurementParameters | Parameters used by initialization functions of nonlinear Kalman tracking filters |

ObjectAttributes | Additional information passed to tracker |

The Measurement field reports the position and

velocity of a measurement in the coordinate system specified by

Coordinate system used to report detections.

This field is a real-valued column vector of the form

[x; y; z;

vx; vy;

vz]. Units are in meters per second.

The MeasurementNoise field is a 6-by-6 matrix that

reports the measurement noise covariance for each coordinate in the

Measurement field.

The MeasurementParameters field is a structure

with these fields.

| Parameter | Definition |

|---|---|

Frame | Enumerated type indicating the frame used to

report measurements. The Vision Detection

Generator block reports detections in

either ego and sensor Cartesian coordinates, which

are both rectangular coordinate frames. Therefore,

for this block, Frame is always

set to 'rectangular'. |

OriginPosition | 3-D vector offset of the sensor origin from the ego vehicle origin. The vector is derived from the Sensor's (x,y) position (m) and Sensor's height (m) parameters of the block. |

Orientation | Orientation of the vision sensor coordinate system with respect to the ego vehicle coordinate system. The orientation is derived from the Yaw angle of sensor mounted on ego vehicle (deg), Pitch angle of sensor mounted on ego vehicle (deg), and Roll angle of sensor mounted on ego vehicle (deg) parameters of the block. |

HasVelocity | Indicates whether measurements contain velocity. |

The ObjectAttributes property of each detection

is a structure with these fields.

| Field | Definition |

|---|---|

TargetIndex | Identifier of the actor,

ActorID, that generated the

detection. For false alarms, this value is

negative. |

Dependencies

To enable this output port, set the Types of detections

generated by sensor parameter to Objects

only, Lanes with

occlusion, or Lanes and

objects.

Lane boundary detections, returned as a Simulink bus containing a MATLAB structure. The structure had these fields:

| Field | Description | Type |

|---|---|---|

Time | Lane detection time | Real scalar |

IsValidTime | False when updates are requested at times that are between block invocation intervals | Boolean |

SensorIndex | Unique identifier of sensor | Positive integer |

NumLaneBoundaries | Number of lane boundary detections | Nonnegative integer |

LaneBoundaries | Lane boundary detections | Array of clothoidLaneBoundary objects |

Dependencies

To enable this output port, set the Types of detections

generated by sensor parameter to Lanes

only, Lanes with

occlusion, or Lanes and

objects.

Parameters

Parameters

Sensor Identification

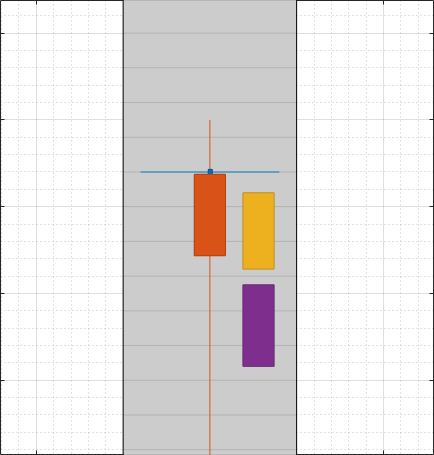

Unique sensor identifier, specified as a positive integer. The sensor identifier distinguishes detections that come from different sensors in a multisensor system. If a model contains multiple sensor blocks with the same sensor identifier, the Bird's-Eye Scope displays an error.

Example: 5

Types of detections generated by the sensor, specified as

Objects only, Lanes

only, Lanes with occlusion,

or Lanes and objects.

When set to

Objects only, no road information is used to occlude actors.When set to

Lanes only, no actor information is used to detect lanes.When set to

Lanes with occlusion, actors in the camera field of view can impair the sensor ability to detect lanes.When set to

Lanes and objects, the sensor generates object both object detections and occluded lane detections.

Required time interval between sensor updates, specified as a positive real scalar. The value of this parameter must be an integer multiple of the Actors input port data interval. Updates requested from the sensor between update intervals contain no detections. Units are in seconds.

Required time interval between lane detection updates, specified as a positive real scalar. The vision detection generator is called at regular time intervals. The vision detector generates new lane detections at intervals defined by this parameter which must be an integer multiple of the simulation time interval. Updates requested from the sensor between update intervals contain no lane detections. Units are in seconds.

Sensor Extrinsics

Location of the vision sensor center, specified as a real-valued 1-by-2 vector. The Sensor's (x,y) position (m) and Sensor's height (m) parameters define the coordinates of the vision sensor with respect to the ego vehicle coordinate system. The default value corresponds to a forward-facing vision sensor mounted to a sedan dashboard. Units are in meters.

Vision sensor height above the ground plane, specified as a positive real scalar. The height is defined with respect to the vehicle ground plane. The Sensor's (x,y) position (m) and Sensor's height (m) parameters define the coordinates of the vision sensor with respect to the ego vehicle coordinate system. The default value corresponds to a forward-facing vision sensor mounted a sedan dashboard. Units are in meters.

Example: 0.25

Yaw angle of vision sensor, specified as a real scalar. Yaw angle is the angle between the center line of the ego vehicle and the optical axis of the camera. A positive yaw angle corresponds to a clockwise rotation when looking in the positive direction of the z-axis of the ego vehicle coordinate system. Units are in degrees.

Example: -4.0

Pitch angle of sensor, specified as a real scalar. The pitch angle is the angle between the optical axis of the camera and the x-y plane of the ego vehicle coordinate system. A positive pitch angle corresponds to a clockwise rotation when looking in the positive direction of the y-axis of the ego vehicle coordinate system. Units are in degrees.

Example: 3.0

Roll angle of the vision sensor, specified as a real scalar. The roll angle is the angle of rotation of the optical axis of the camera around the x-axis of the ego vehicle coordinate system. A positive roll angle corresponds to a clockwise rotation when looking in the positive direction of the x-axis of the coordinate system. Units are in degrees.

Output Port Settings

Source of object bus name, specified as Auto or

Property. If you select Auto,

the block automatically creates a bus name. If you select

Property, specify the bus name using the

Specify an object bus name parameter.

Example: Property

Source of output lane bus name, specified as Auto

or Property. If you choose Auto,

the block will automatically create a bus name. If you choose

Property, specify the bus name using the

Specify an object bus name parameter.

Example: Property

Name of object bus, specified as a valid bus name.

Example: objectbus

Dependencies

To enable this parameter, set the Source of object bus

name parameter to Property.

Namer of output lane bus, specified as a valid bus name.

Example: lanebus

Dependencies

To enable this parameter, set the Source of output lane

bus name parameter to

Property.

Detection Reporting

Maximum number of detections reported by the sensor, specified as a positive integer. Detections are reported in order of increasing distance from the sensor until the maximum number is reached.

Example: 100

Dependencies

To enable this parameter, set the Types of detections

generated by sensor parameter to Objects

only or Lanes and

objects.

Maximum number of reported lanes, specified as a positive integer.

Example: 100

Dependencies

To enable this parameter, set the Types of detections

generated by sensor parameter to Lanes

only, Lanes with

occlusion, or Lanes and

objects.

Coordinate system of reported detections, specified as one of these values:

Ego Cartesian— Detections are reported in the ego vehicle Cartesian coordinate system.Sensor Cartesian— Detections are reported in the sensor Cartesian coordinate system.

Simulation

Interpreted execution— Simulate the model using the MATLAB interpreter. This option shortens startup time. InInterpreted executionmode, you can debug the source code of the block.Code generation— Simulate the model using generated C/C++ code. The first time you run a simulation, Simulink generates C/C++ code for the block. The C code is reused for subsequent simulations as long as the model does not change. This option requires additional startup time.

Measurements

Settings

Maximum detection range, specified as a positive real scalar. The vision sensor cannot detect objects beyond this range. Units are in meters.

Example: 250

Object Detector Settings

Bounding box accuracy, specified as a positive real scalar. This quantity defines the accuracy with which the detector can match a bounding box to a target. Units are in pixels.

Example: 9

Noise intensity used for filtering position and velocity measurements, specified as a positive real scalar. Noise intensity defines the standard deviation of the process noise of the internal constant-velocity Kalman filter used in a vision sensor. The filter models the process noise using a piecewise-constant white noise acceleration model. Noise intensity is typically of the order of the maximum acceleration magnitude expected for a target. Units are in meters per second squared.

Example: 2

Maximum detectable object speed, specified as a nonnegative real scalar. Units are in meters per second.

Example: 20

Maximum allowed occlusion of an object, specified as a real scalar in the range [0 1). Occlusion is the fraction of the total surface area of an object that is not visible to the sensor. A value of 1 indicates that the object is fully occluded. Units are dimensionless.

Example: 0.2

Minimum height and width of an object that the vision sensor detects

within an image, specified as a [minHeight,minWidth]

vector of positive values. The 2-D projected height of an object must be

greater than or equal to minHeight. The projected

width of an object must be greater than or equal to

minWidth. Units are in pixels.

Example: [25 20]

Probability of detecting a target, specified as a positive real scalar less than or equal to 1. This quantity defines the probability that the sensor detects a detectable object. A detectable object is an object that satisfies the minimum detectable size, maximum range, maximum speed, and maximum allowed occlusion constraints.

Example: 0.95

Number of false detections generated by the vision sensor per image, specified as a nonnegative real scalar.

Example: 1.0

Lane Detector Settings

Minimum size of a projected lane marking in the camera image that can

be detected by the sensor after accounting for curvature, specified as a

1-by-2 real-valued vector, [minHeight minWidth]. Lane

markings must exceed both of these values to be detected. Units are in

pixels.

Dependencies

To enable this parameter, set the Types of detections

generated by sensor parameter to Lanes

only, Lanes only, or

Lanes and objects.

Accuracy of lane boundaries, specified as a positive real scalar. This parameter defines the accuracy with which the lane sensor can place a lane boundary. Units are in pixels.

Example: 2.5

Dependencies

To enable this parameter, set the Types of detections

generated by sensor parameter to Lanes

only, Lanes only, or

Lanes and objects.

Random Number Generator Settings

Select this parameter to add noise to vision sensor measurements.

Otherwise, the measurements are noise-free. The

MeasurementNoise property of each detection is

always computed and is not affected by the value you specify for the

Add noise to measurements parameter.

Method to set the random number generator seed, specified as one of the options in the table.

| Option | Description |

|---|---|

Repeatable | The block generates a random initial seed

for the first simulation and reuses this seed for

all subsequent simulations. Select this parameter

to generate repeatable results from the

statistical sensor model. To change this initial

seed, at the MATLAB command prompt, enter:

|

Specify seed | Specify your own random initial seed for reproducible results by using the Specify seed parameter. |

Not repeatable | The block generates a new random initial seed after each simulation run. Select this parameter to generate nonrepeatable results from the statistical sensor model. |

Random number generator seed, specified as a nonnegative integer less than 232.

Example: 2001

Dependencies

To enable this parameter, set the Random Number

Generator Settings parameter to Specify

seed.

Actor Profiles

Method to specify actor profiles, which are the physical and radar characteristics of all actors in the driving scenario, specified as one of these options:

From Scenario Reader block— The block obtains the actor profiles from the scenario specified by the Scenario Reader block or RoadRunner Scenario Reader block.Parameters— The block obtains the actor profiles from the parameters that become enabled on the Actor Profiles tab.MATLAB expression— The block obtains the actor profiles from the MATLAB expression specified by the MATLAB expression for actor profiles parameter.

MATLAB expression for actor profiles, specified as a MATLAB structure, a MATLAB structure array, or a valid MATLAB expression that produces such a structure or structure array.

If your Scenario Reader block reads data from a drivingScenario object, to obtain the actor profiles directly from this

object, set this expression to call the actorProfiles function on the object. For example:

actorProfiles(scenario).

Example: struct('ClassID',5,'Length',5.0,'Width',2,'Height',2,'OriginOffset',[-1.55,0,0])

Dependencies

To enable this parameter, set the Select method to specify actor profiles parameter to MATLAB expression.

Scenario-defined actor identifier, specified as a positive integer or

length-L vector of unique positive integers.

L must equal the number of actors input into the

Actors input port. The vector elements must

match ActorID values of the actors. You can specify

Unique identifier for actors as

[]. In this case, the same actor profile

parameters apply to all actors.

Example: [1,2]

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

User-defined classification identifier, specified as an integer or

length-L vector of integers. When

Unique identifier for actors is a vector, this

parameter is a vector of the same length with elements in one-to-one

correspondence to the actors in Unique identifier for

actors. When Unique identifier for

actors is empty, [], you must specify

this parameter as a single integer whose value applies to all

actors.

Example: 2

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

Length of cuboid, specified as a positive real scalar or

length-L vector of positive values. When

Unique identifier for actors is a vector, this

parameter is a vector of the same length with elements in one-to-one

correspondence to the actors in Unique identifier for

actors. When Unique identifier for

actors is empty, [], you must specify

this parameter as a positive real scalar whose value applies to all

actors. Units are in meters.

Example: 6.3

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

Width of cuboid, specified as a positive real scalar or

length-L vector of positive values. When

Unique identifier for actors is a vector, this

parameter is a vector of the same length with elements in one-to-one

correspondence to the actors in Unique identifier for

actors. When Unique identifier for

actors is empty, [], you must specify

this parameter as a positive real scalar whose value applies to all

actors. Units are in meters.

Example: 4.7

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

Height of cuboid, specified as a positive real scalar or

length-L vector of positive values. When

Unique identifier for actors is a vector, this

parameter is a vector of the same length with elements in one-to-one

correspondence to the actors in Unique identifier for

actors. When Unique identifier for

actors is empty, [], you must specify

this parameter as a positive real scalar whose value applies to all

actors. Units are in meters.

Example: 2.0

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

Rotational center of the actor, specified as a

length-L cell array of real-valued 1-by-3

vectors. Each vector represents the offset of the rotational center of

the actor from the bottom-center of the actor. For vehicles, the offset

corresponds to the point on the ground beneath the center of the rear

axle. When Unique identifier for actors is a

vector, this parameter is a cell array of vectors with cells in

one-to-one correspondence to the actors in Unique identifier

for actors. When Unique identifier for

actors is empty, [], you must specify

this parameter as a cell array of one element containing the offset

vector whose values apply to all actors. Units are in meters.

Example: [ -1.35, .2, .3 ]

Dependencies

To enable this parameter, set the Select method to

specify actor profiles parameter to

Parameters.

Camera Intrinsics

Camera focal length, in pixels, specified as a two-element real-valued

vector. See also the FocalLength property of

cameraIntrinsics.

Example: [480,320]

Optical center of the camera, in pixels, specified as a two-element

real-valued vector. See also the PrincipalPoint property of

cameraIntrinsics.

Example: [480,320]

Image size produced by the camera, in pixels, specified as a

two-element vector of positive integers. See also the ImageSize property of

cameraIntrinsics.

Example: [240,320]

Radial distortion coefficients, specified as a two-element or

three-element real-valued vector. For details on setting these

coefficients, see the RadialDistortion property of

cameraIntrinsics.

Example: [1,1]

Tangential distortion coefficients, specified as a two-element

real-valued vector. For details on setting these coefficients, see the

TangentialDistortion property of

cameraIntrinsics.

Example: [1,1]

Skew angle of the camera axes, specified as a real scalar. See also

the Skew

property of cameraIntrinsics.

Example: 0.1

Algorithms

The vision sensor models a monocular camera that produces 2-D camera images. To project the coordinates of these 2-D images into the 3-D world coordinates used in driving scenarios, the sensor algorithm assumes that the z-position (height) of all image points of the bottom edge of the target’s image bounding box lie on the ground. The plane defining the ground is defined by the height property of the vision detection generator, which defines the offset of the monocular camera above the ground plane. With this projection, the vertical locations of objects in the produced images are strongly correlated to their heights above the road. However, if the road is not flat and the heights of objects differ from the height of the sensor, then the sensor reports inaccurate detections. For an example that shows this behavior, see Model Vision Sensor Detections.

Extended Capabilities

For driving scenario workflows, the Vision Detection Generator block supports:

Rapid accelerator mode simulation.

Standalone deployment using Simulink Coder™ and for Simulink Real-Time™ targets.

For RoadRunner Scenario workflows, the Vision Detection Generator block supports standalone deployment using ready-to-run packages. For more information about generating ready-to-run packages for your Simulink model, see Publish Ready-to-Run Actor Behaviors for Reuse and Simulation Performance.

Version History

Introduced in R2017bStarting in R2024a, Simulink automatically instantiates a SensorSimulation object and adds sensors defined in the model to the RoadRunner scenario. Hence, registering Vision Detection Generator block manually using the addSensors function is not required. For more information on the new workflow, see the Add Sensors to RoadRunner Scenario Using Simulink example.

In releases prior to R2024a, you must manually register the block as a sensor model using the addSensors function. For more information, see Adding sensors manually using addSensors function is not required for Simulink models.

As of R2023a, the Simulink buses created by this block no longer show in MATLAB workspace.

See Also

Apps

Blocks

- Driving Radar Data Generator | Detection Concatenation | Multi-Object Tracker | Scenario Reader | Lidar Point Cloud Generator | Simulation 3D Vision Detection Generator

Objects

Topics

- Create Nonvirtual Buses (Simulink)

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)