patchGANDiscriminator

Description

net = patchGANDiscriminator(inputSize)inputSize.

For more information about the PatchGAN network architecture, see PatchGAN Discriminator Network.

This function requires Deep Learning Toolbox™.

net = patchGANDiscriminator(inputSize,Name=Value)

You can create a 1-by-1 PatchGAN discriminator network, called a pixel discriminator

network, by specifying the NetworkType name-value argument as

"pixel". For more information about the pixel discriminator network

architecture, see Pixel Discriminator Network.

Examples

Specify the input size of the network for a color image of size 256-by-256 pixels.

inputSize = [256 256 3];

Create the PatchGAN discriminator network with the specified input size.

net = patchGANDiscriminator(inputSize)

net =

dlnetwork with properties:

Layers: [13×1 nnet.cnn.layer.Layer]

Connections: [12×2 table]

Learnables: [16×3 table]

State: [6×3 table]

InputNames: {'input_top'}

OutputNames: {'conv2d_final'}

Initialized: 1

View summary with summary.

Display the network.

analyzeNetwork(net)

Specify the input size of the network for a color image of size 256-by-256 pixels.

inputSize = [256 256 3];

Create the pixel discriminator network with the specified input size.

net = patchGANDiscriminator(inputSize,"NetworkType","pixel")

net =

dlnetwork with properties:

Layers: [7×1 nnet.cnn.layer.Layer]

Connections: [6×2 table]

Learnables: [8×3 table]

State: [2×3 table]

InputNames: {'input_top'}

OutputNames: {'conv2d_final'}

Initialized: 1

View summary with summary.

Display the network.

analyzeNetwork(net)

Input Arguments

Network input size, specified as a 3-element vector of positive integers.

inputSize has the form [H

W

C], where H is the height,

W is the width, and C is the number of

channels. If the input to the discriminator is a channel-wise concatenated dlarray (Deep Learning Toolbox) object,

then C must be the concatenated size.

Example: [28 28 3] specifies an input size of 28-by-28 pixels for

a 3-channel image.

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Example: net = patchGANDiscriminator(inputSize,FilterSize=5) creates a

discriminator whose convolution layers have a filter of size 5-by-5 pixels.

Before R2021a, use commas to separate each name and value, and enclose

Name in quotes.

Example: net = patchGANDiscriminator(inputSize,"FilterSize",5) creates

a discriminator whose convolution layers have a filter of size 5-by-5 pixels.

Type of discriminator network, specified as one of these values.

"patch"– Create a PatchGAN discriminator"pixel"– Create a pixel discriminator, which is a 1-by-1 PatchGAN discriminator

Data Types: char | string

Number of downsampling operations of the network, specified as a positive integer.

The discriminator network downsamples the input by a factor of

2^NumDownsamplingBlocks. This argument is ignored when you

specify NetworkType as "pixel".

Number of filters in the first discriminator block, specified as a positive integer.

Filter size of convolution layers, specified as a positive integer or 2-element

vector of positive integers of the form [height

width]. When you specify the filter size as a scalar, the filter

has equal height and width. Typical filters have height and width between 1 and 4.

This argument has an effect only when you specify NetworkType as

"patch".

Style of padding used in the network, specified as one of these values.

PaddingValue | Description | Example |

|---|---|---|

| Numeric scalar | Pad with the specified numeric value |

|

"symmetric-include-edge" | Pad using mirrored values of the input, including the edge values |

|

"symmetric-exclude-edge" | Pad using mirrored values of the input, excluding the edge values |

|

"replicate" | Pad using repeated border elements of the input |

|

Weight initialization used in convolution layers, specified as

"glorot", "he",

"narrow-normal", or a function handle. For more information, see

Specify Custom Weight Initialization Function (Deep Learning Toolbox).

Activation function to use in the network, specified as one of these values. For more information and a list of available layers, see Activation Layers (Deep Learning Toolbox).

"relu"— Use areluLayer(Deep Learning Toolbox)"leakyRelu"— Use aleakyReluLayer(Deep Learning Toolbox) with a scale factor of 0.2"elu"— Use aneluLayer(Deep Learning Toolbox)A layer object

Activation function after the final convolution layer, specified as one of these values. For more information and a list of available layers, see Activation Layers (Deep Learning Toolbox).

"tanh"— Use atanhLayer(Deep Learning Toolbox)"sigmoid"— Use asigmoidLayer(Deep Learning Toolbox)"softmax"— Use asoftmaxLayer(Deep Learning Toolbox)"none"— Do not use a final activation layerA layer object

Normalization operation to use after each convolution, specified as one of these values. For more information and a list of available layers, see Normalization Layers (Deep Learning Toolbox).

"instance"— Use aninstanceNormalizationLayer(Deep Learning Toolbox)"batch"— Use abatchNormalizationLayer(Deep Learning Toolbox)"none"— Do not use a normalization layerA layer object

Prefix to all layer names in the network, specified as a string or character vector.

Data Types: char | string

Output Arguments

PatchGAN discriminator network, returned as a dlnetwork (Deep Learning Toolbox) object.

More About

A PatchGAN discriminator network consists of an encoder module that

downsamples the input by a factor of 2^NumDownsamplingBlocks. The

default network follows the architecture proposed by Zhu et. al. [2].

The encoder module consists of an initial block of layers that performs one downsampling

operation, NumDownsamplingBlocks–1 downsampling blocks, and a final

block.

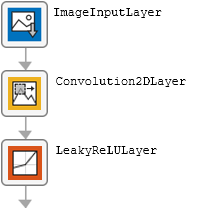

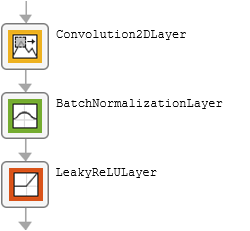

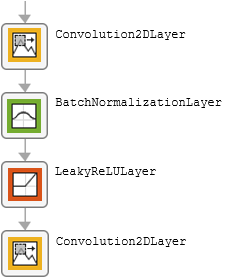

The table describes the blocks of layers that comprise the encoder module.

| Block Type | Layers | Diagram of Default Block |

|---|---|---|

| Initial block |

|

|

| Downsampling block |

|

|

| Final block |

|

|

A pixel discriminator network consists of an initial block and final block that return an output of size [H W C]. This network does not perform downsampling. The default network follows the architecture proposed by Zhu et. al. [2].

The table describes the blocks of layers that comprise the network.

| Block Type | Layers | Diagram of Default Block |

|---|---|---|

| Initial block |

|

|

| Final block |

|

|

References

[1] Isola, Phillip, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. "Image-to-Image Translation with Conditional Adversarial Networks." In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5967–76. Honolulu, HI: IEEE, 2017. https://arxiv.org/abs/1611.07004.

[2] Zhu, Jun-Yan, Taesung Park, and Tongzhou Wang. "CycleGAN and pix2pix in PyTorch." https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix.

Version History

Introduced in R2021a

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)