rectifyStereoImages

Rectify pair of stereo images

Syntax

Description

[

undistorts and rectifies versions of

J1,J2,reprojectionMatrix]

= rectifyStereoImages(I1,I2,stereoParams)I1 and

I2 input images using the

stereo parameters of a stereo camera system stored

in the stereoParams object.

Use the reconstructScene function with the

reprojectionMatrix to

reproject a 2-D point in a disparity map to a 3-D

point in the rectified camera coordinate system of

camera 1.

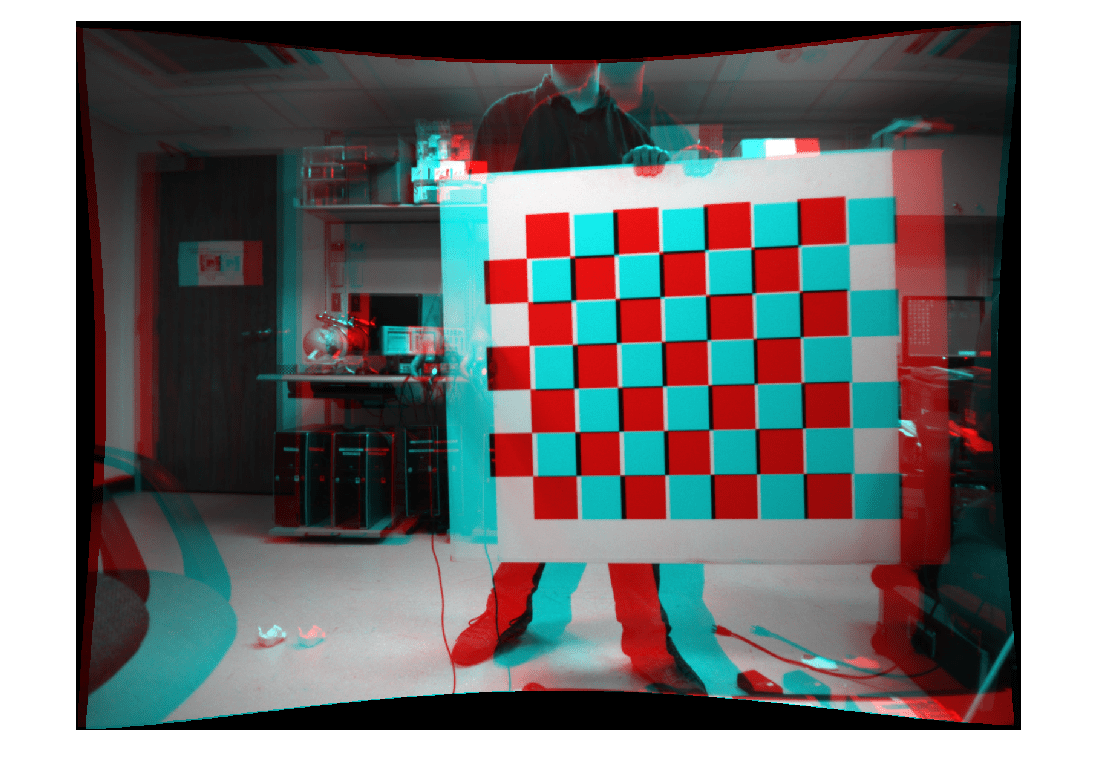

Stereo image rectification projects images onto a common image plane in such a way that the

corresponding points have the same row

coordinates. This image projection makes the image

appear as though the two cameras are parallel. Use

the disparityBM or disparitySGM functions to compute a

disparity map from the rectified images for 3-D

scene reconstruction.

[___,

returns the 3-by-4 camera projection matrices

camMatrix1,camMatrix2,R1,R2]

= rectifyStereoImages(I1,I2,stereoParams)camMatrix1 and

camMatrix2 for the rectified

cameras, and the corresponding rectification

rotation matrices, R1 and

R2.

[

specifies options using one or more name-value

arguments in addition to any combination of

arguments from previous syntaxes. For example,

J1,J2]

= rectifyStereoImages(___,Name=Value)OutputView="valid" sets the

OutputView argument to

"valid".

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Tips

The Computer Vision Toolbox™ rectification algorithm requires that the epipole for each image lie outside of the image. If the epipole lies within the image, you can first transform the images into polar coordinates as described in the rectification method proposed by Marc Pollefeys, Reinhard Koch, and Luc Van Gool [2].

References

[1] G. Bradski and A. Kaehler, Learning OpenCV : Computer Vision with the OpenCV Library. Sebastopol, CA: O'Reilly, 2008.

Extended Capabilities

Version History

Introduced in R2014aSee Also

Apps

Functions

reconstructScene|disparityBM|disparitySGM|estimateCameraParameters|estimateStereoRectification