AI-Based Time Series Anomaly Detection for Cyber-Physical Systems at the University of Stuttgart

By Sheng Ding, Tagir Fabarisov, Adrian Wolf, and Andrey Morozov, University of Stuttgart

For a relatively simple control system—such as one that drives an actuator after processing input from a few sensors—it is often straightforward for the design engineer to account for anomalous sensor input. As system complexity increases, however, anomaly detection can become exponentially more difficult. The structural and behavioral complexity of modern cyber-physical systems, such as flexible production lines, autonomous vehicles, and medical exoskeletons, to name just a few, increases not only the likelihood of fault-related failures, but also the potential cost of those failures.

Researchers and engineers have been improving anomaly detection techniques and methods for many years. Conventional techniques, including statistical analysis based on autoregressive integrated moving average (ARIMA) models, have been eclipsed by newer approaches based on machine learning and deep learning. While these AI-based approaches have been shown to be effective in a variety of anomaly detection scenarios, an internet search for papers on anomaly detection reveals a problematic trend. The typical paper details the application of a single technique for a single, specific type of system. After authoring several such papers ourselves, we recognized that the value of such efforts was limited by their specificity. Instead, a more generalized approach for identifying the most effective anomaly detection methods for virtually any type of cyber-physical system was needed.

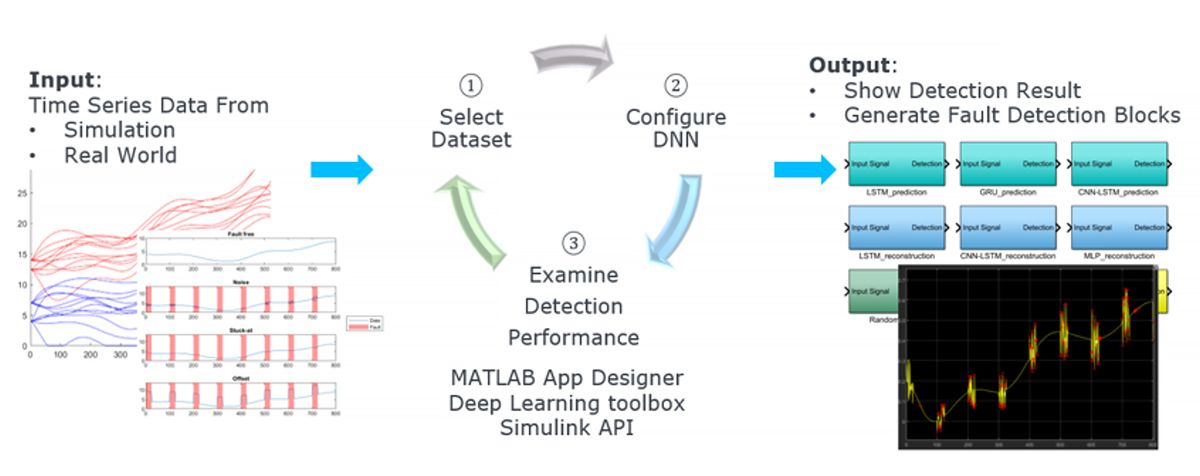

To help meet this need, our research team has developed a software platform for time series anomaly detection (TSAD). Developed in MATLAB® with Deep Learning Toolbox™ and Statistics and Machine Learning Toolbox™, this platform streamlines the process of importing and preprocessing data from real-world or simulated systems; training a variety of deep learning networks, classic machine learning models, and statistical models; and evaluating the best options based on performance and accuracy (Figure 1).

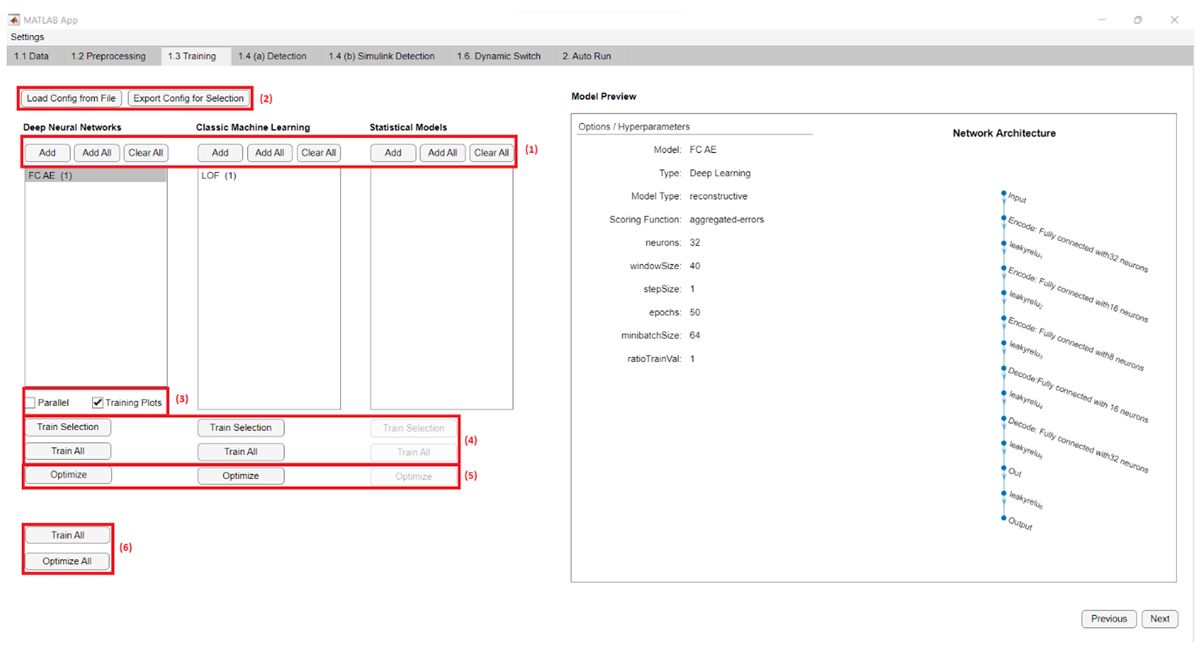

Figure 1. First, the entire workflow of the TSAD platform. Second, the TSAD platform’s Training tab, showing options for selecting deep learning networks, classic machine learning models, and statistical models to be trained and evaluated.

Building TSAD in MATLAB has several significant advantages, including seamless integration with Simulink® as well as an extensive framework for designing and implementing AI-based systems. Countless systems that would benefit from more effective anomaly detection have been (and continue to be) designed in Simulink, providing us with many opportunities to test the platform for different use cases and a wide audience of target users. Further, simulations of Simulink and Simscape™ models provide a valuable source of training data when real-world data is unavailable or insufficient. Lastly, Simulink simulations provide an excellent way to verify the most effective anomaly detection models identified with TSAD. Researchers can inject faults into an existing model using the Fault Injection Block (FIBlock) that our team has developed and then incorporate anomaly detection blocks generated by the TSAD software into their models for testing (Figure 2a).

Below we show a case study with a Franka Emika industrial robot (Figure 2b). We first let the robot run with randomized trajectories and collect data from the measurements. This time series data is then used as input into the TSAD for training and testing. TSAD recommends a fully connected autoencoder as the best detection model for the data set, generating an anomaly detection block accordingly. We then use MATLAB and Simulink to connect to the ROS. With a noise fault injected at the first joint, the control algorithm gets confused, skips the putting down process, and drops the tube. In comparison, if the anomaly detection block monitors the signal, we can raise a red flag and send a message to do the emergency shutdown in the ROS.

AI-Based Anomaly Detection Basics

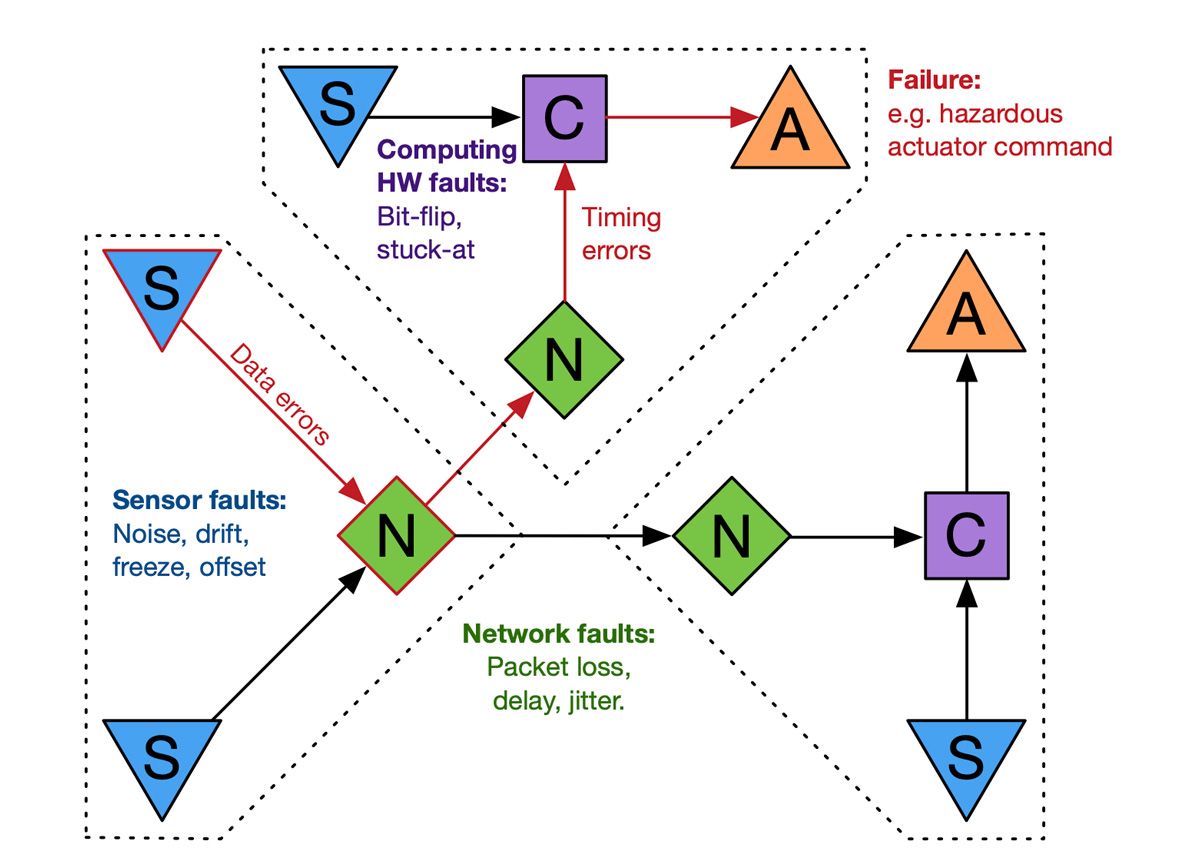

To get a clearer picture of how AI-based anomaly detection works, it helps to start with an understanding of the various types of faults that can occur in a cyber-physical system (Figure 3). Broadly speaking, internal faults include sensor problems such as noise, drift, bias, and freeze; computing hardware issues such as bit flips; and network issues such as packet loss, delay, and jitter. External faults include attacks from malicious users and environmental conditions. All these faults, individually or in combination, can lead to failures and safety issues if not properly identified and handled.

Within the context of AI, faults are detected using either classic machine learning—with k-means clustering, isolation forest, and local outlier factor algorithms, for example—or deep learning networks.

The difficulty of finding a suitable anomaly detector for any given system is complicated by the wide variety of approaches and methods available. The task is further complicated by the need to evaluate multiple deep learning network architectures and hyperparameter values—as well as the potential utility of combining multiple detectors into an ensemble or dynamically switching between individual detectors based on the system’s current mode of operation. In developing the TSAD platform, it was our goal to provide an automated and self-adapting approach to addressing all these challenges.

Generating an Anomaly Detector with the TSAD Platform

The process of creating an anomaly detector begins with collecting data. This is true whether you have already chosen a particular statistical, machine learning, or deep learning method to use, or whether you plan to use the TSAD platform to explore and assess a variety of methods. In some cases, it is possible to use real-world data gathered from a system in operation. By definition, however, anomalies are rare and it can be difficult to capture them in sufficient numbers and variety to support effective model training. Model-based simulations, such as those conducted with MATLAB and Simulink, are a useful alternative, enabling you to generate lots of training data with and without anomalies. The FIBlock provides a convenient way to perform fault injection experiments with a variety of fault types such as sensor faults, computing hardware, and network faults (Figure 4). The FIBlock also allows flexible tuning of fault parameters. Fault events and the exposure duration can be modeled with different parametric stochastic methods.

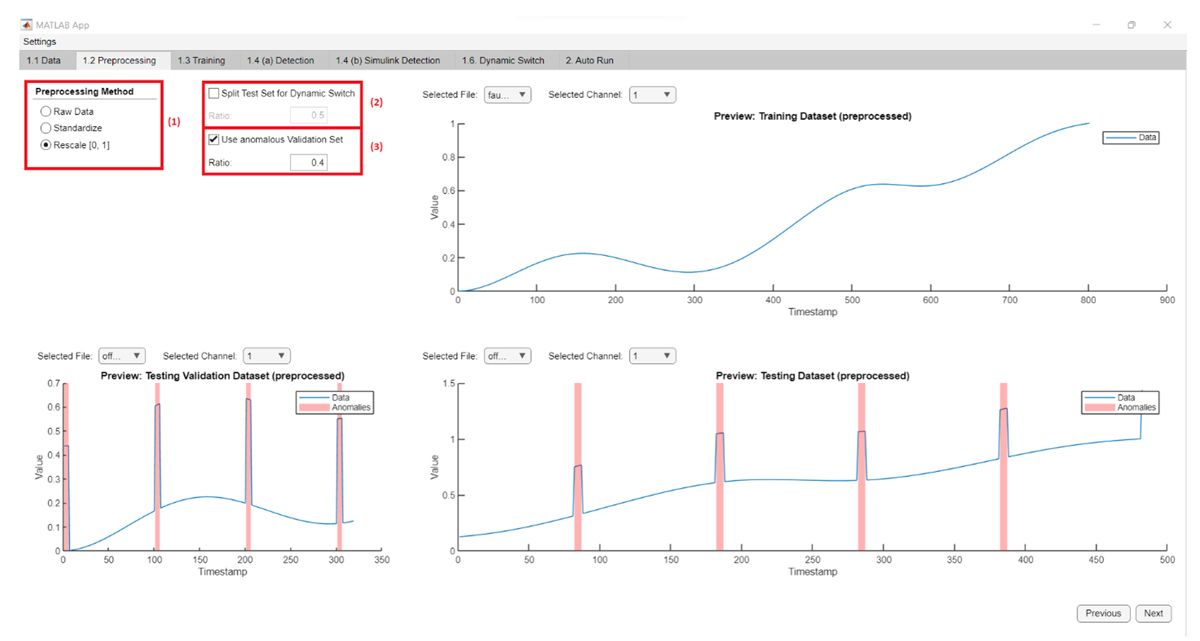

Once you have assembled a data set for training, you can begin using the TSAD platform. The platform’s user interface, created with App Designer in MATLAB, provides step-by-step guidance throughout the process via a series of panels. On the first panel (1.1 Data), you load data into TSAD from a folder containing one or more CSV files. On the second (1.2 Preprocessing), you preprocess the data to prepare it for use in training, for example, by standardizing it or rescaling it (Figure 5).

The next step is training (1.3 Training). Here you can select the deep learning, machine learning, and statistical methods to use, and then train them individually or all at once. With Parallel Computing Toolbox™, you have the option to run the training in parallel across multiple computing cores. On this panel, you can also initiate hyperparameter optimization using Optimization Toolbox™ to improve model performance (Figure 6).

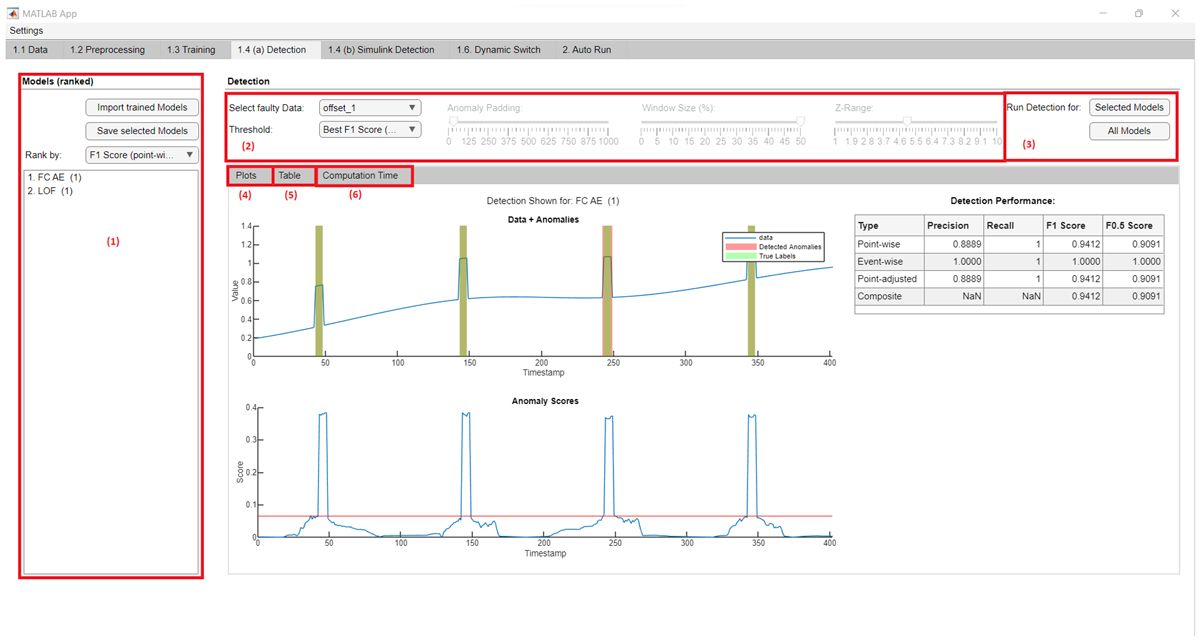

Once the models are trained, you assess their performance on the Detection panel (1.4 (a) Detection). Each trained model is used to detect anomalies in the provided data set. The models are then ranked by a performance metric that you select, such as precision, recall, or F1 score (Figure 7). The tab also includes plots for visualizing performance as well as computation time metrics for each model. In some use cases, a faster but slightly less accurate model may be preferable to a slower but more accurate model. This is the case when the detection must be completed quickly to prevent situations in which a cyber-physical system would otherwise continue with a dangerous or potentially damaging action based on a faulty input.

The remaining steps in the TSAD workflow can be used to run a chosen detector in a Simulink simulation (1.4 (b) Simulink Detection) and to configure the platform’s dynamic switch mechanism (1.6 Dynamic Switch). This mechanism is particularly useful for cyber-physical systems with distinct modes of operation that require diverse anomaly detection approaches. An electric autonomous vehicle, for example, may need to switch dynamically between anomaly detection models trained for highway driving and city driving or between active use and charging while parked.

Industry Collaboration and Future Enhancements

Our team is continuing to improve the TSAD platform and extend our work in the practical application of anomaly detection. One area we are evaluating is the deployment of anomaly detectors to real-time hardware. The particular case system that is currently using anomaly detectors is a lower limb assistive exoskeleton, SafeLegs, developed by colleagues from the Karlsruhe Institute of Technology (Figure 8). We run the anomaly detection in parallel on a standard laptop. Going forward, we are exploring the use of Simulink Real-Time™ and Speedgoat® target hardware to enable real-time execution of the anomaly detectors we generate with the TSAD platform.

Figure 8. Anomaly detectors working in real time on a lower limb assistive exoskeleton.

As part of our ongoing work, we are also collaborating with a number of industry organizations. For example, we are working with engineers who are using the TSAD platform to run experiments in automotive testing and with partners to use the TSAD platform for fault diagnosis in electric vehicle powertrains. We are also a research partner in the SofD, working on a project for anomaly detection in CAN bus communication that is part of a research program sponsored by the German Ministry of Business Affairs and Climate Control to pave the way for software-defined vehicles.

University of Stuttgart is among the more than 2,000 universities worldwide that provide campus-wide access to MATLAB and Simulink. With a Campus-Wide License, researchers, faculty, and students have access to a common configuration of products, at the latest release level, for use anywhere—in the classroom, at home, in the lab, or in the field.

Published 2023