loss

Loss of k-nearest neighbor classifier

Description

L = loss(mdl,Tbl,ResponseVarName)mdl classifies the data

in Tbl when Tbl.ResponseVarName contains the

true classifications. If Tbl contains the response variable

used to train mdl, then you do not need to specify

ResponseVarName.

When computing the loss, the loss function normalizes the

class probabilities in Tbl.ResponseVarName to the class

probabilities used for training, which are stored in the Prior

property of mdl.

The meaning of the classification loss (L) depends on the

loss function and weighting scheme, but, in general, better classifiers yield

smaller classification loss values. For more details, see Classification Loss.

L = loss(mdl,Tbl,Y)mdl classifies the data

in Tbl when Y contains the true

classifications.

When computing the loss, the loss function normalizes the

class probabilities in Y to the class probabilities used for

training, which are stored in the Prior property of

mdl.

L = loss(mdl,X,Y)mdl classifies the data

in X when Y contains the true

classifications.

When computing the loss, the loss function normalizes the

class probabilities in Y to the class probabilities used for

training, which are stored in the Prior property of

mdl.

L = loss(___,Name,Value)

Note

If the predictor data in X or Tbl contains

any missing values and LossFun is not set to

"classifcost", "classiferror", or

"mincost", the loss function can

return NaN. For more details, see loss can return NaN for predictor data with missing values.

Examples

Create a k-nearest neighbor classifier for the Fisher iris data, where k = 5.

Load the Fisher iris data set.

load fisheririsCreate a classifier for five nearest neighbors.

mdl = fitcknn(meas,species,'NumNeighbors',5);Examine the loss of the classifier for a mean observation classified as 'versicolor'.

X = mean(meas);

Y = {'versicolor'};

L = loss(mdl,X,Y)L = 0

All five nearest neighbors classify as 'versicolor'.

Input Arguments

k-nearest neighbor classifier model, specified as a

ClassificationKNN object.

Sample data used to train the model, specified as a table. Each row of

Tbl corresponds to one observation, and each column corresponds

to one predictor variable. Optionally, Tbl can contain one

additional column for the response variable. Multicolumn variables and cell arrays other

than cell arrays of character vectors are not allowed.

If Tbl contains the response variable used to train

mdl, then you do not need to specify

ResponseVarName or Y.

If you train mdl using sample data contained in a table, then the

input data for loss must also be in a table.

Data Types: table

Response variable name, specified as the name of a variable in Tbl. If

Tbl contains the response variable used to train

mdl, then you do not need to specify

ResponseVarName.

You must specify ResponseVarName as a character vector or string scalar.

For example, if the response variable is stored as Tbl.response, then

specify it as 'response'. Otherwise, the software treats all columns

of Tbl, including Tbl.response, as

predictors.

The response variable must be a categorical, character, or string array, logical or numeric vector, or cell array of character vectors. If the response variable is a character array, then each element must correspond to one row of the array.

Data Types: char | string

Predictor data, specified as a numeric matrix. Each row of X

represents one observation, and each column represents one variable.

Data Types: single | double

Class labels, specified as a categorical, character, or string array, logical or

numeric vector, or cell array of character vectors. Each row of Y

represents the classification of the corresponding row of X.

Data Types: categorical | char | string | logical | single | double | cell

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Before R2021a, use commas to separate each name and value, and enclose

Name in quotes.

Example: loss(mdl,Tbl,'response','LossFun','exponential','Weights','w')

returns the weighted exponential loss of mdl classifying the data

in Tbl. Here, Tbl.response is the response

variable, and Tbl.w is the weight variable.

Loss function, specified as the comma-separated pair consisting of

'LossFun' and a built-in loss function name or a

function handle.

The following table lists the available loss functions.

Value Description "binodeviance"Binomial deviance "classifcost"Observed misclassification cost "classiferror"Misclassified rate in decimal "exponential"Exponential loss "hinge"Hinge loss "logit"Logistic loss "mincost"Minimal expected misclassification cost (for classification scores that are posterior probabilities) "quadratic"Quadratic loss 'mincost'is appropriate for classification scores that are posterior probabilities. By default, k-nearest neighbor models return posterior probabilities as classification scores (seepredict).You can specify a function handle for a custom loss function using

@(for example,@lossfun). Let n be the number of observations inXand K be the number of distinct classes (numel(mdl.ClassNames)). Your custom loss function must have this form:function lossvalue = lossfun(C,S,W,Cost)Cis an n-by-K logical matrix with rows indicating the class to which the corresponding observation belongs. The column order corresponds to the class order inmdl.ClassNames. ConstructCby settingC(p,q) = 1, if observationpis in classq, for each row. Set all other elements of rowpto0.Sis an n-by-K numeric matrix of classification scores. The column order corresponds to the class order inmdl.ClassNames. The argumentSis a matrix of classification scores, similar to the output ofpredict.Wis an n-by-1 numeric vector of observation weights. If you passW, the software normalizes the weights to sum to1.Costis a K-by-K numeric matrix of misclassification costs. For example,Cost = ones(K) – eye(K)specifies a cost of0for correct classification and1for misclassification.The output argument

lossvalueis a scalar.

For more details on loss functions, see Classification Loss.

Data Types: char | string | function_handle

Observation weights, specified as the comma-separated pair consisting

of 'Weights' and a numeric vector or the name of a

variable in Tbl.

If you specify Weights as a numeric vector, then

the size of Weights must be equal to the number of

rows in X or Tbl.

If you specify Weights as the name of a variable

in Tbl, the name must be a character vector or

string scalar. For example, if the weights are stored as

Tbl.w, then specify Weights

as 'w'. Otherwise, the software treats all columns of

Tbl, including Tbl.w, as

predictors.

loss normalizes the weights so that observation

weights in each class sum to the prior probability of that class. When

you supply Weights, loss

computes the weighted classification loss.

Example: 'Weights','w'

Data Types: single | double | char | string

Algorithms

Classification loss functions measure the predictive inaccuracy of classification models. When you compare the same type of loss among many models, a lower loss indicates a better predictive model.

Consider the following scenario.

L is the weighted average classification loss.

n is the sample size.

For binary classification:

yj is the observed class label. The software codes it as –1 or 1, indicating the negative or positive class (or the first or second class in the

ClassNamesproperty), respectively.f(Xj) is the positive-class classification score for observation (row) j of the predictor data X.

mj = yjf(Xj) is the classification score for classifying observation j into the class corresponding to yj. Positive values of mj indicate correct classification and do not contribute much to the average loss. Negative values of mj indicate incorrect classification and contribute significantly to the average loss.

For algorithms that support multiclass classification (that is, K ≥ 3):

yj* is a vector of K – 1 zeros, with 1 in the position corresponding to the true, observed class yj. For example, if the true class of the second observation is the third class and K = 4, then y2* = [

0 0 1 0]′. The order of the classes corresponds to the order in theClassNamesproperty of the input model.f(Xj) is the length K vector of class scores for observation j of the predictor data X. The order of the scores corresponds to the order of the classes in the

ClassNamesproperty of the input model.mj = yj*′f(Xj). Therefore, mj is the scalar classification score that the model predicts for the true, observed class.

The weight for observation j is wj. The software normalizes the observation weights so that they sum to the corresponding prior class probability stored in the

Priorproperty. Therefore,

Given this scenario, the following table describes the supported loss functions that you can specify by using the LossFun name-value argument.

| Loss Function | Value of LossFun | Equation |

|---|---|---|

| Binomial deviance | "binodeviance" | |

| Observed misclassification cost | "classifcost" | where is the class label corresponding to the class with the maximal score, and is the user-specified cost of classifying an observation into class when its true class is yj. |

| Misclassified rate in decimal | "classiferror" | where I{·} is the indicator function. |

| Cross-entropy loss | "crossentropy" |

The weighted cross-entropy loss is where the weights are normalized to sum to n instead of 1. |

| Exponential loss | "exponential" | |

| Hinge loss | "hinge" | |

| Logistic loss | "logit" | |

| Minimal expected misclassification cost | "mincost" |

The software computes the weighted minimal expected classification cost using this procedure for observations j = 1,...,n.

The weighted average of the minimal expected misclassification cost loss is |

| Quadratic loss | "quadratic" |

If you use the default cost matrix (whose element value is 0 for correct classification

and 1 for incorrect classification), then the loss values for

"classifcost", "classiferror", and

"mincost" are identical. For a model with a nondefault cost matrix,

the "classifcost" loss is equivalent to the "mincost"

loss most of the time. These losses can be different if prediction into the class with

maximal posterior probability is different from prediction into the class with minimal

expected cost. Note that "mincost" is appropriate only if classification

scores are posterior probabilities.

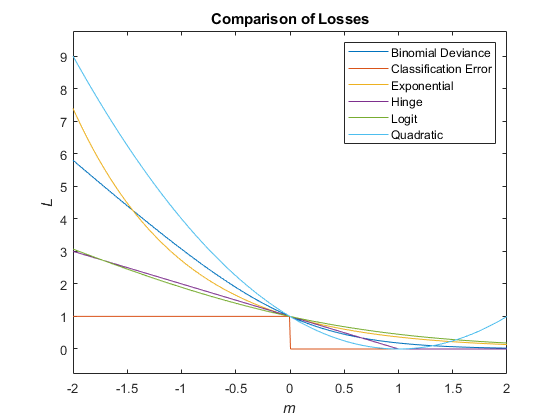

This figure compares the loss functions (except "classifcost",

"crossentropy", and "mincost") over the score

m for one observation. Some functions are normalized to pass through

the point (0,1).

Two costs are associated with KNN classification: the true misclassification cost per class and the expected misclassification cost per observation.

You can set the true misclassification cost per class by using the 'Cost'

name-value pair argument when you run fitcknn. The value Cost(i,j) is the cost of classifying

an observation into class j if its true class is i. By

default, Cost(i,j) = 1 if i ~= j, and

Cost(i,j) = 0 if i = j. In other words, the cost

is 0 for correct classification and 1 for incorrect

classification.

Two costs are associated with KNN classification: the true misclassification cost per class

and the expected misclassification cost per observation. The third output of predict is the expected misclassification cost per

observation.

Suppose you have Nobs observations that you want to classify with a trained

classifier mdl, and you have K classes. You place the

observations into a matrix Xnew with one observation per row. The

command

[label,score,cost] = predict(mdl,Xnew)

returns a matrix cost of size

Nobs-by-K, among other outputs. Each row of the

cost matrix contains the expected (average) cost of classifying the

observation into each of the K classes. cost(n,j)

is

where

K is the number of classes.

is the posterior probability of class i for observation Xnew(n).

is the true misclassification cost of classifying an observation as j when its true class is i.

Extended Capabilities

The

loss function fully supports tall arrays. For more information,

see Tall Arrays.

Usage notes and limitations:

lossdoes not support GPU arrays forClassificationKNNmodels with the following specifications:The

NSMethodproperty is specified as"kdtree".The

Distanceproperty is specified as"fasteuclidean","fastseuclidean", or a function handle.The

IncludeTiesproperty is specified astrue.

For more information, see Run MATLAB Functions on a GPU (Parallel Computing Toolbox).

Version History

Introduced in R2012aThe loss function no longer omits an observation with a

NaN score when computing the weighted average classification loss. Therefore,

loss can now return NaN when the predictor data

X or the predictor variables in Tbl

contain any missing values, and the name-value argument LossFun is

not specified as "classifcost", "classiferror", or

"mincost". In most cases, if the test set observations do not

contain missing predictors, the loss function does not

return NaN.

This change improves the automatic selection of a classification model when you use

fitcauto.

Before this change, the software might select a model (expected to best classify new

data) with few non-NaN predictors.

If loss in your code returns NaN, you can update your code

to avoid this result by doing one of the following:

Remove or replace the missing values by using

rmmissingorfillmissing, respectively.Specify the name-value argument

LossFunas"classifcost","classiferror", or"mincost".

The following table shows the classification models for which the

loss object function might return NaN. For more details,

see the Compatibility Considerations for each loss

function.

| Model Type | Full or Compact Model Object | loss Object

Function |

|---|---|---|

| Discriminant analysis classification model | ClassificationDiscriminant, CompactClassificationDiscriminant | loss |

| Ensemble of learners for classification | ClassificationEnsemble, CompactClassificationEnsemble | loss |

| Gaussian kernel classification model | ClassificationKernel | loss |

| k-nearest neighbor classification model | ClassificationKNN | loss |

| Linear classification model | ClassificationLinear | loss |

| Neural network classification model | ClassificationNeuralNetwork, CompactClassificationNeuralNetwork | loss |

| Support vector machine (SVM) classification model | loss |

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)