fitensemble

Fit ensemble of learners for classification and regression

Syntax

Description

fitensemble can boost or bag decision

tree learners or discriminant analysis classifiers. The function can

also train random subspace ensembles of KNN or discriminant analysis

classifiers.

For simpler interfaces that fit classification and regression

ensembles, instead use fitcensemble and fitrensemble, respectively. Also, fitcensemble and fitrensemble provide

options for Bayesian optimization.

Mdl = fitensemble(Tbl,ResponseVarName,Method,NLearn,Learners)NLearn classification or regression

learners (Learners) to all variables in the table Tbl. ResponseVarName is

the name of the response variable in Tbl. Method is

the ensemble-aggregation method.

Mdl = fitensemble(___,Name,Value)Name,Value pair

arguments and any of the previous syntaxes. For example, you can specify

the class order, to implement 10–fold cross-validation, or

the learning rate.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Tips

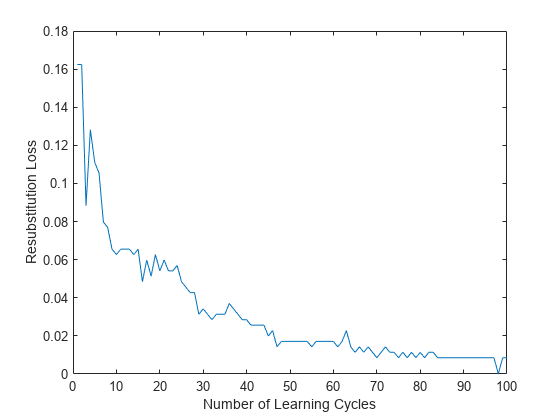

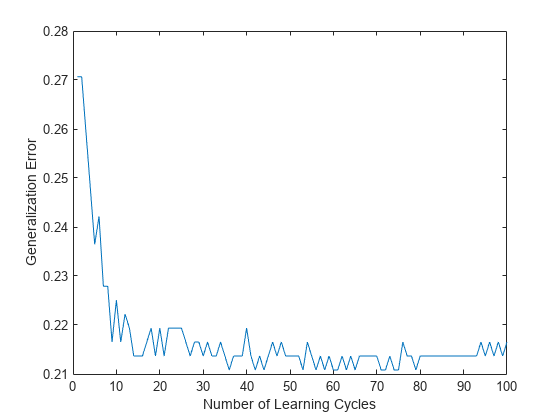

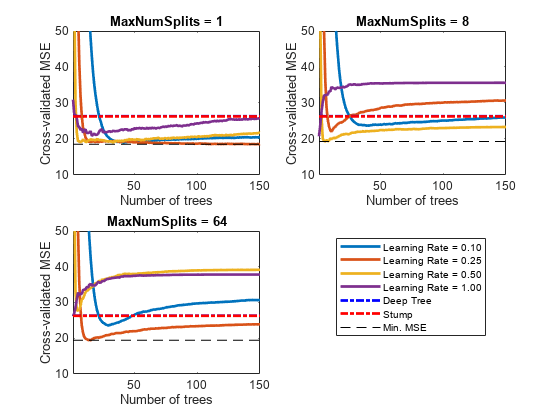

NLearncan vary from a few dozen to a few thousand. Usually, an ensemble with good predictive power requires from a few hundred to a few thousand weak learners. However, you do not have to train an ensemble for that many cycles at once. You can start by growing a few dozen learners, inspect the ensemble performance and then, if necessary, train more weak learners usingresumefor classification problems, orresumefor regression problems.Ensemble performance depends on the ensemble setting and the setting of the weak learners. That is, if you specify weak learners with default parameters, then the ensemble can perform poorly. Therefore, like ensemble settings, it is good practice to adjust the parameters of the weak learners using templates, and to choose values that minimize generalization error.

If you specify to resample using

Resample, then it is good practice to resample to entire data set. That is, use the default setting of1forFResample.In classification problems (that is,

Typeis'classification'):If the ensemble-aggregation method (

Method) is'bag'and:The misclassification cost (

Cost) is highly imbalanced, then, for in-bag samples, the software oversamples unique observations from the class that has a large penalty.The class prior probabilities (

Prior) are highly skewed, the software oversamples unique observations from the class that has a large prior probability.

For smaller sample sizes, these combinations can result in a low relative frequency of out-of-bag observations from the class that has a large penalty or prior probability. Consequently, the estimated out-of-bag error is highly variable and it can be difficult to interpret. To avoid large estimated out-of-bag error variances, particularly for small sample sizes, set a more balanced misclassification cost matrix using

Costor a less skewed prior probability vector usingPrior.Because the order of some input and output arguments correspond to the distinct classes in the training data, it is good practice to specify the class order using the

ClassNamesname-value pair argument.To determine the class order quickly, remove all observations from the training data that are unclassified (that is, have a missing label), obtain and display an array of all the distinct classes, and then specify the array for

ClassNames. For example, suppose the response variable (Y) is a cell array of labels. This code specifies the class order in the variableclassNames.Ycat = categorical(Y); classNames = categories(Ycat)

categoricalassigns<undefined>to unclassified observations andcategoriesexcludes<undefined>from its output. Therefore, if you use this code for cell arrays of labels or similar code for categorical arrays, then you do not have to remove observations with missing labels to obtain a list of the distinct classes.To specify that the class order from lowest-represented label to most-represented, then quickly determine the class order (as in the previous bullet), but arrange the classes in the list by frequency before passing the list to

ClassNames. Following from the previous example, this code specifies the class order from lowest- to most-represented inclassNamesLH.Ycat = categorical(Y); classNames = categories(Ycat); freq = countcats(Ycat); [~,idx] = sort(freq); classNamesLH = classNames(idx);

Algorithms

For details of ensemble-aggregation algorithms, see Ensemble Algorithms.

If you specify

Methodto be a boosting algorithm andLearnersto be decision trees, then the software grows stumps by default. A decision stump is one root node connected to two terminal, leaf nodes. You can adjust tree depth by specifying theMaxNumSplits,MinLeafSize, andMinParentSizename-value pair arguments usingtemplateTree.fitensemblegenerates in-bag samples by oversampling classes with large misclassification costs and undersampling classes with small misclassification costs. Consequently, out-of-bag samples have fewer observations from classes with large misclassification costs and more observations from classes with small misclassification costs. If you train a classification ensemble using a small data set and a highly skewed cost matrix, then the number of out-of-bag observations per class can be low. Therefore, the estimated out-of-bag error can have a large variance and can be difficult to interpret. The same phenomenon can occur for classes with large prior probabilities.For the RUSBoost ensemble-aggregation method (

Method), the name-value pair argumentRatioToSmallestspecifies the sampling proportion for each class with respect to the lowest-represented class. For example, suppose that there are two classes in the training data: A and B. A have 100 observations and B have 10 observations. Also, suppose that the lowest-represented class hasmobservations in the training data.If you set

'RatioToSmallest',2, thens*m2*10=20. Consequently,fitensembletrains every learner using 20 observations from class A and 20 observations from class B. If you set'RatioToSmallest',[2 2], then you obtain the same result.If you set

'RatioToSmallest',[2,1], thens1*m2*10=20ands2*m1*10=10. Consequently,fitensembletrains every learner using 20 observations from class A and 10 observations from class B.

For ensembles of decision trees, and for dual-core systems and above,

fitensembleparallelizes training using Intel® Threading Building Blocks (TBB). For details on Intel TBB, see https://www.intel.com/content/www/us/en/developer/tools/oneapi/onetbb.html.

References

[1] Breiman, L. “Bagging Predictors.” Machine Learning. Vol. 26, pp. 123–140, 1996.

[2] Breiman, L. “Random Forests.” Machine Learning. Vol. 45, pp. 5–32, 2001.

[3] Freund, Y. “A more robust boosting algorithm.” arXiv:0905.2138v1, 2009.

[4] Freund, Y. and R. E. Schapire. “A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting.” J. of Computer and System Sciences, Vol. 55, pp. 119–139, 1997.

[5] Friedman, J. “Greedy function approximation: A gradient boosting machine.” Annals of Statistics, Vol. 29, No. 5, pp. 1189–1232, 2001.

[6] Friedman, J., T. Hastie, and R. Tibshirani. “Additive logistic regression: A statistical view of boosting.” Annals of Statistics, Vol. 28, No. 2, pp. 337–407, 2000.

[7] Hastie, T., R. Tibshirani, and J. Friedman. The Elements of Statistical Learning section edition, Springer, New York, 2008.

[8] Ho, T. K. “The random subspace method for constructing decision forests.” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 20, No. 8, pp. 832–844, 1998.

[9] Schapire, R. E., Y. Freund, P. Bartlett, and W.S. Lee. “Boosting the margin: A new explanation for the effectiveness of voting methods.” Annals of Statistics, Vol. 26, No. 5, pp. 1651–1686, 1998.

[10] Seiffert, C., T. Khoshgoftaar, J. Hulse, and A. Napolitano. “RUSBoost: Improving classification performance when training data is skewed.” 19th International Conference on Pattern Recognition, pp. 1–4, 2008.

[11] Warmuth, M., J. Liao, and G. Ratsch. “Totally corrective boosting algorithms that maximize the margin.” Proc. 23rd Int’l. Conf. on Machine Learning, ACM, New York, pp. 1001–1008, 2006.

Extended Capabilities

Version History

Introduced in R2011a