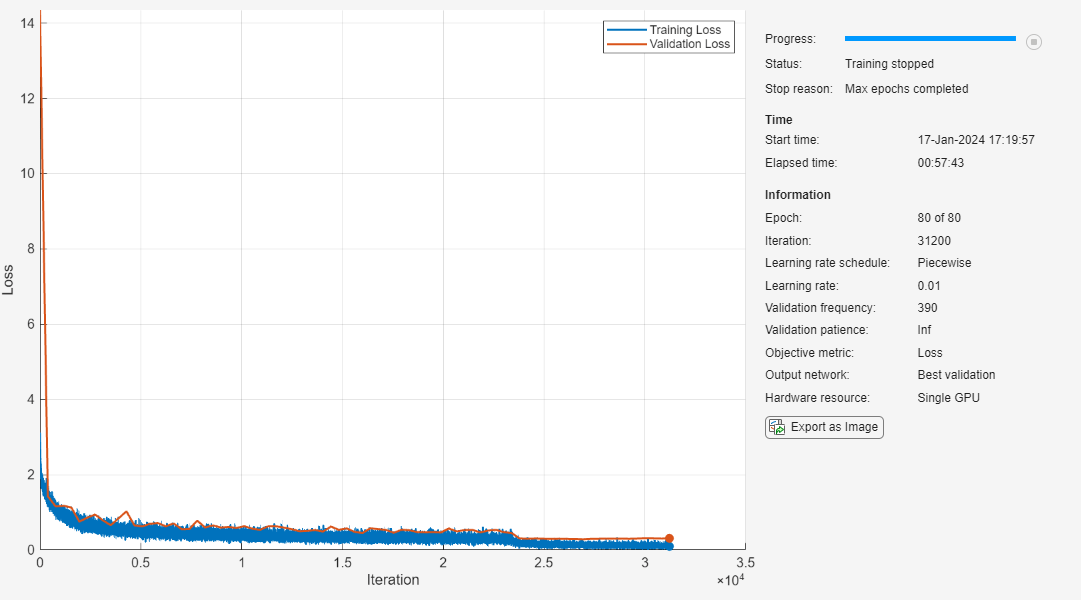

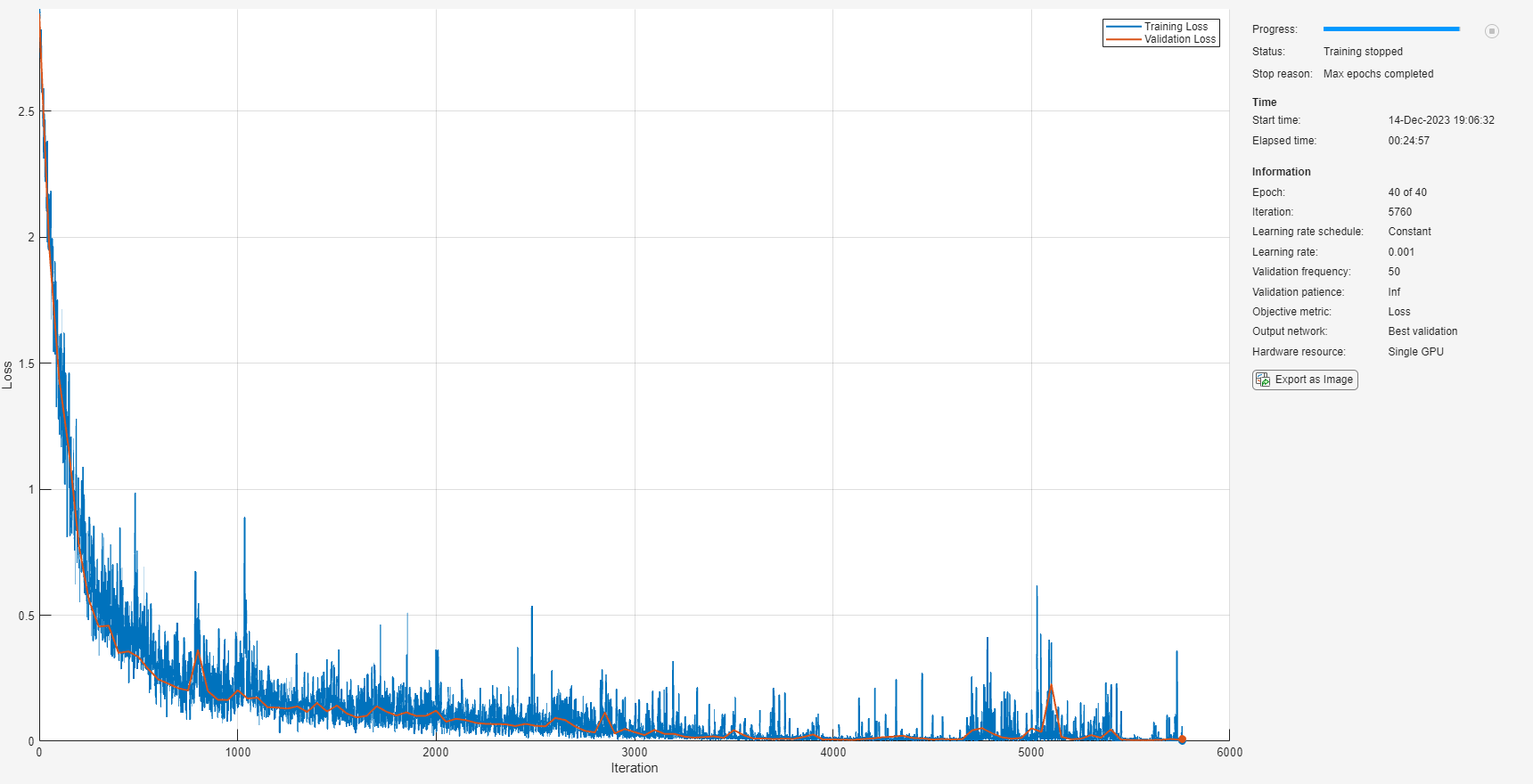

Built-In Training

Train deep learning networks using built-in training functions

After defining the network architecture, you can define training parameters

using the trainingOptions function. You can

then train the network using the trainnet function. Use the trained network to predict class

labels or numeric responses.

Functions

Topics

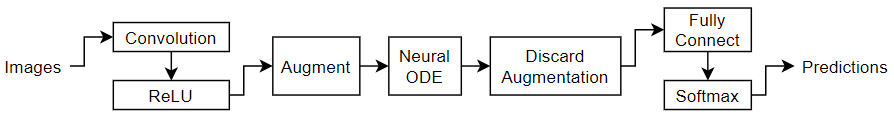

Training Fundamentals

- Create Simple Deep Learning Neural Network for Classification

This example shows how to create and train a simple convolutional neural network for deep learning classification. - Train Convolutional Neural Network for Regression

This example shows how to train a convolutional neural network to predict the angles of rotation of handwritten digits. - Create Custom Deep Learning Training Plot

This example shows how to create a custom training plot that updates at each iteration during training of deep learning neural networks usingtrainnet. (Since R2023b) - Custom Stopping Criteria for Deep Learning Training

This example shows how to stop training of deep learning neural networks based on custom stopping criteria usingtrainnet. (Since R2023b) - Speed Up Deep Neural Network Training

Learn how to accelerate deep neural network training. - Define Custom Learning Rate Schedule

This example shows how to define a time-based decay learning rate schedule and use it to train a neural network. - Train Network with Multiple Outputs

This example shows how to train a deep learning network with multiple outputs that predict both labels and angles of rotations of handwritten digits. - Train Network with Complex-Valued Data

This example shows how to predict the frequency of a complex-valued waveform using a 1-D convolutional neural network. - Data Sets for Deep Learning

Discover data sets for various deep learning tasks. - Deep Learning Metrics

Comparison of metrics for deep learning tasks.

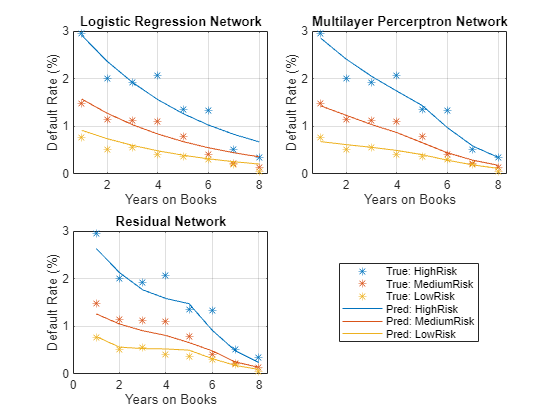

Tabular Data Workflows

- Training Neural Networks with Tabular Data

Learn about training neural networks with tabular data. - Train Neural Network with Tabular Data

This example shows how to train a neural network with tabular data. (Since R2023b)

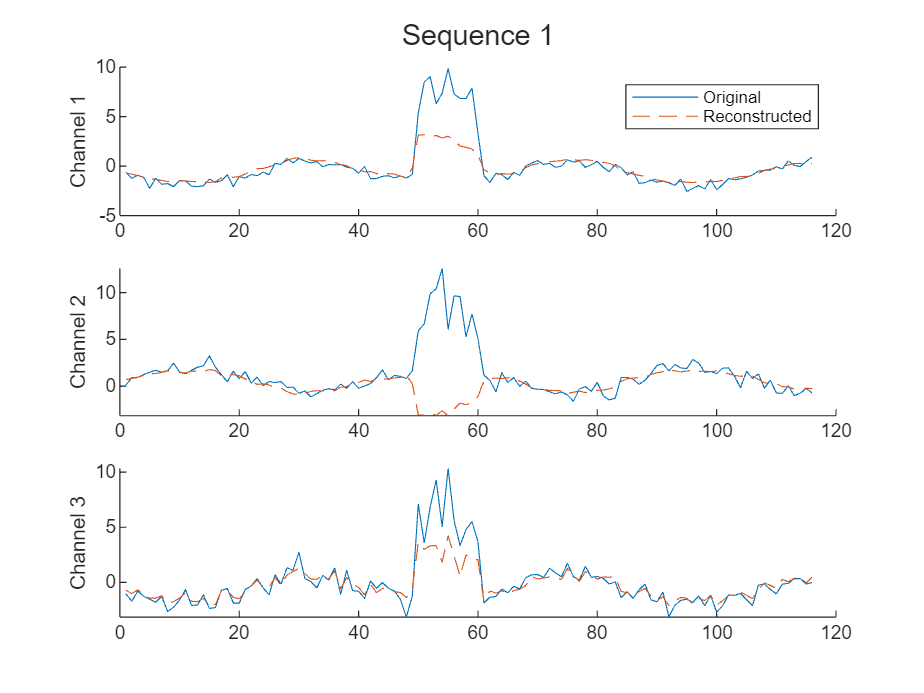

Sequence Data Workflows

- Time Series Forecasting Using Deep Learning

This example shows how to forecast time series data using a long short-term memory (LSTM) network. - Sequence Classification Using Deep Learning

This example shows how to classify sequence data using a long short-term memory (LSTM) network. - Sequence-to-Sequence Classification Using Deep Learning

This example shows how to classify each time step of sequence data using a long short-term memory (LSTM) network. - Sequence-to-Sequence Regression Using Deep Learning

This example shows how to predict the remaining useful life (RUL) of engines by using deep learning. - Sequence-to-One Regression Using Deep Learning

This example shows how to predict the frequency of a waveform using a long short-term memory (LSTM) neural network. - Sequence Classification Using 1-D Convolutions

This example shows how to classify sequence data using a 1-D convolutional neural network. - Train Network with LSTM Projected Layer

Train a deep learning network with an LSTM projected layer for sequence-to-label classification. - Train Sequence Classification Network Using Data with Imbalanced Classes

This example shows how to classify sequences with a 1-D convolutional neural network using class weights to modify the training to account for imbalanced classes. - Sequence-to-Sequence Classification Using 1-D Convolutions

This example shows how to classify each time step of sequence data using a generic temporal convolutional network (TCN). - Sequence Classification Using CNN-LSTM Network

This example shows how to create a 2-D CNN-LSTM network for speech classification tasks by combining a 2-D convolutional neural network (CNN) with a long short-term memory (LSTM) layer. - Train Network Using Custom Mini-Batch Datastore for Sequence Data

This example shows how to train a deep learning network on out-of-memory sequence data using a custom mini-batch datastore. - Train Speech Command Recognition Model Using Deep Learning

This example shows how to train a deep learning model that detects the presence of speech commands in audio. - Chemical Process Fault Detection Using Deep Learning

Use simulation data to train a neural network than can detect faults in a chemical process. - Battery State of Charge Estimation Using Deep Learning

Define requirements, prepare data, train deep learning networks, verify robustness, integrate networks into Simulink, and deploy models. (Since R2024b)

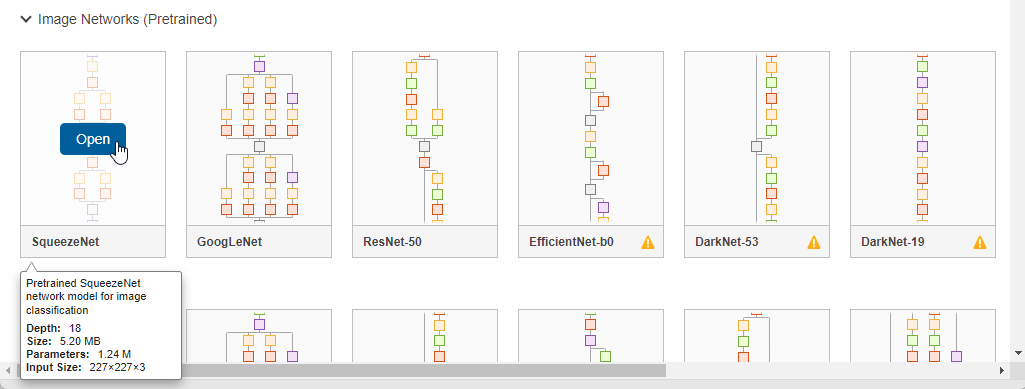

Image Data Workflows

- Train Network on Image and Feature Data

This example shows how to train a network that classifies handwritten digits using both image and feature input data. - Multilabel Image Classification Using Deep Learning

This example shows how to use transfer learning to train a deep learning model for multilabel image classification. - Build Image-to-Image Regression Network Using Deep Network Designer

This example shows how to use Deep Network Designer to construct an image-to-image regression network for super resolution.