dlnetwork

Deep learning neural network

Description

A dlnetwork object specifies a deep learning neural network

architecture.

Tip

For most deep learning tasks, you can use a pretrained neural network and adapt it to your own

data. For an example showing how to use transfer learning to retrain a convolutional neural

network to classify a new set of images, see Retrain Neural Network to Classify New Images. Alternatively, you can

create and train neural networks from scratch using the trainnet and

trainingOptions functions.

If the trainingOptions function does not provide the

training options that you need for your task, then you can create a custom training loop

using automatic differentiation. To learn more, see Train Network Using Custom Training Loop.

If the trainnet

function does not provide the loss function that you need for your task, then you can

specify a custom loss function to the trainnet as a function handle.

For loss functions that require more inputs than the predictions and targets (for example,

loss functions that require access to the neural network or additional inputs), train the

model using a custom training loop. To learn more, see Train Network Using Custom Training Loop.

If Deep Learning Toolbox™ does not provide the layers you need for your task, then you can create a custom layer. To learn more, see Define Custom Deep Learning Layers. For models that cannot be specified as networks of layers, you can define the model as a function. To learn more, see Train Network Using Model Function.

For more information about which training method to use for which task, see Train Deep Learning Model in MATLAB.

Creation

Syntax

Description

Empty Network

net = dlnetworkdlnetwork object with no layers. Use this syntax to create a neural

network from scratch. (since R2024a)

Network with Input Layers

net = dlnetwork(layers)layers to

determine the size and format of the learnable and state parameters of the neural

network.

Use this syntax when layers defines a complete single-input

neural network, has layers arranged in series, and has an input layer.

net = dlnetwork(layers,OutputNames=names)OutputNames

property. The OutputNames property specifies the layers or layer

outputs that correspond to network outputs.

Use this syntax when layers defines a complete single-input

multi-output neural network, has layers arranged in series, and has an input

layer.

net = dlnetwork(layers,Initialize=tf)tf is 1,

(true), this syntax is equivalent to net =

dlnetwork(layers). When tf is 0

(false), this syntax is equivalent to creating an empty network and

then adding layers using the addLayers

function.

Network With Unconnected Inputs

net = dlnetwork(layers,X1,...,XN)X1,...,XN to determine the size and format of the learnable

parameters and state values of the neural network, where N is the

number of network inputs.

Use this syntax when layers defines a complete neural network,

has layers arranged in series, and has inputs that are not connected to input

layers.

net = dlnetwork(layers,X1,...,XN,OutputNames=names)OutputNames

property.

Use this syntax when layers defines a complete neural network,

has multiple outputs, has layers arranged in series, and has inputs that are not

connected to input layers.

Conversion

net = dlnetwork(prunableNet) TaylorPrunableNetwork to a dlnetwork object

by removing filters selected for pruning from the convolution layers of

prunableNet and returns a compressed dlnetwork

object that has fewer learnable parameters and is smaller in size.

net = dlnetwork(mdl)dlnetwork object.

Input Arguments

Properties

Object Functions

addInputLayer | Add input layer to network |

addLayers | Add layers to neural network |

removeLayers | Remove layers from neural network |

connectLayers | Connect layers in neural network |

disconnectLayers | Disconnect layers in neural network |

replaceLayer | Replace layer in neural network |

getLayer | Look up a layer by name or path |

expandLayers | Expand network layers |

groupLayers | Group layers into network layers |

summary | Print network summary |

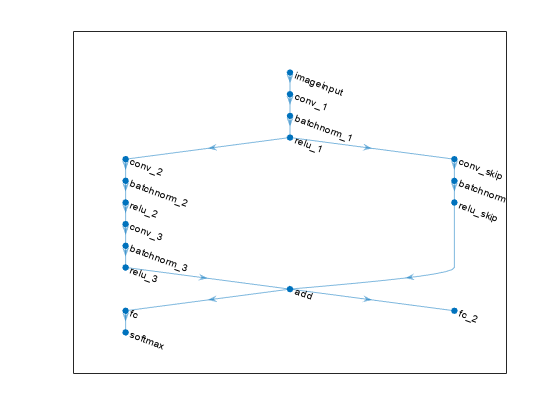

plot | Plot neural network architecture |

initialize | Initialize learnable and state parameters of neural network |

predict | Compute deep learning network output for inference |

forward | Compute deep learning network output for training |

resetState | Reset state parameters of neural network |

setL2Factor | Set L2 regularization factor of layer learnable parameter |

setLearnRateFactor | Set learn rate factor of layer learnable parameter |

getLearnRateFactor | Get learn rate factor of layer learnable parameter |

getL2Factor | Get L2 regularization factor of layer learnable parameter |

Examples

Extended Capabilities

Version History

Introduced in R2019bSee Also

trainnet | trainingOptions | dlarray | dlgradient | dlfeval | forward | predict | initialize | TaylorPrunableNetwork